We wrote this beginner’s guide to help you understand some of the basics and where your time is best spent to maximize impact.

The Beginner’s Guide to Technical SEO

What is technical SEO?

Technical SEO is the practice of optimizing your website to help search engines find, crawl, understand, and index your pages. It helps increase visibility and rankings in search engines.

How complicated is technical SEO?

It depends. The fundamentals aren’t really difficult to master, but technical SEO can be complex and hard to understand. I’ll keep things as simple as I can with this guide.

In this chapter we’ll cover how to make sure search engines can efficiently crawl your content.

How crawling works

Crawling is where search engines grab content from pages and use the links on them to find even more pages. There are a few ways you can control what gets crawled on your website. Here are a few options.

Robots.txt

A robots.txt file tells search engines where they can and can’t go on your site.

Google may index pages that it can’t crawl if links are pointing to those pages. This can be confusing, but if you want to keep pages from being indexed, check out this guide and flowchart which can guide you through the process.

Crawl rate

There’s a crawl-delay directive you can use in robots.txt that many crawlers support. It lets you set how often they can crawl pages. Unfortunately, Google doesn’t respect this.[1] For Google, you’ll need to change the crawl rate in Google Search Console.[2]

Access restrictions

If you want the page to be accessible to some users but not search engines, then what you probably want is one of these three options:

- Some kind of login system

- HTTP authentication (where a password is required for access)

- IP whitelisting (which only allows specific IP addresses to access the pages)

This type of setup is best for things like internal networks, member-only content, or for staging, test, or development sites. It allows for a group of users to access the page, but search engines will not be able to access the page and will not index it.

How to see crawl activity

For Google specifically, the easiest way to see what it’s crawling is with the “Crawl stats” report in Google Search Console, which gives you more information about how it’s crawling your website.

If you want to see all crawl activity on your website, then you will need to access your server logs and possibly use a tool to better analyze the data. This can get fairly advanced. But if your hosting has a control panel like cPanel, you should have access to raw logs and some aggregators like AWstats and Webalizer.

Crawl adjustments

Each website is going to have a different crawl budget, which is a combination of how often Google wants to crawl a site and how much crawling your site allows. More popular pages and pages that change often will be crawled more often, and pages that don’t seem to be popular or well linked will be crawled less often.

If crawlers see signs of stress while crawling your website, they’ll typically slow down or even stop crawling until conditions improve.

After pages are crawled, they’re rendered and sent to the index. The index is the master list of pages that can be returned for search queries. Let’s talk about the index.

In this chapter, we’ll talk about how to make sure your pages are indexed and check how they’re indexed.

Robots directives

A robots meta tag is an HTML snippet that tells search engines how to crawl or index a certain page. It’s placed into the <head> section of a webpage and looks like this:

<meta name="robots" content="noindex" />

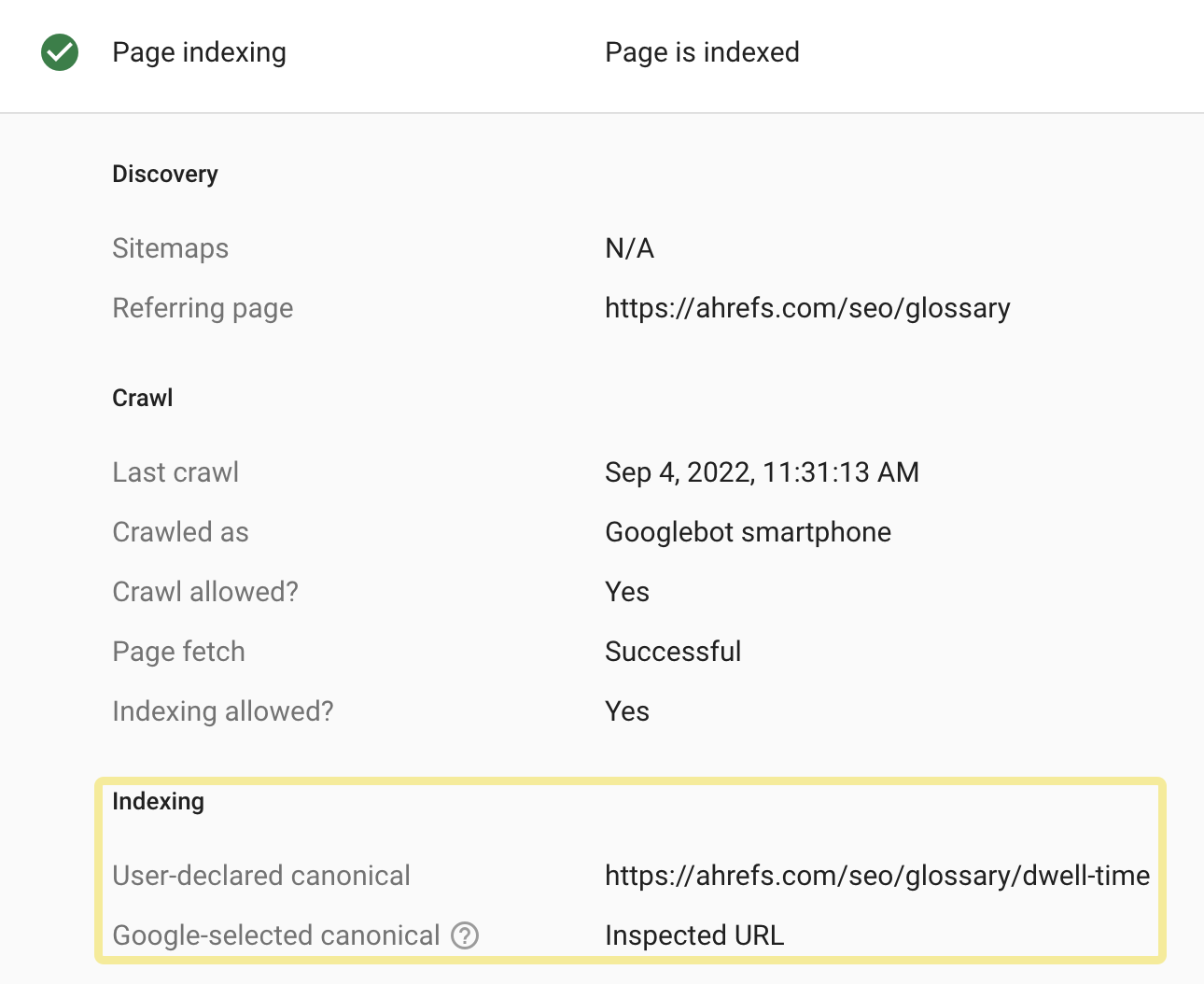

Canonicalization

When there are multiple versions of the same page, Google will select one to store in its index. This process is called canonicalization and the URL selected as the canonical will be the one Google shows in search results. There are many different signals it uses to select the canonical URL including:

The easiest way to see how Google has indexed a page is to use the URL Inspection tool in Google Search Console. It will show you the Google-selected canonical URL.

One of the hardest things for SEOs is prioritization. There are a lot of best practices, but some changes will have more of an impact on your rankings and traffic than others. Here are some of the projects I’d recommend prioritizing.

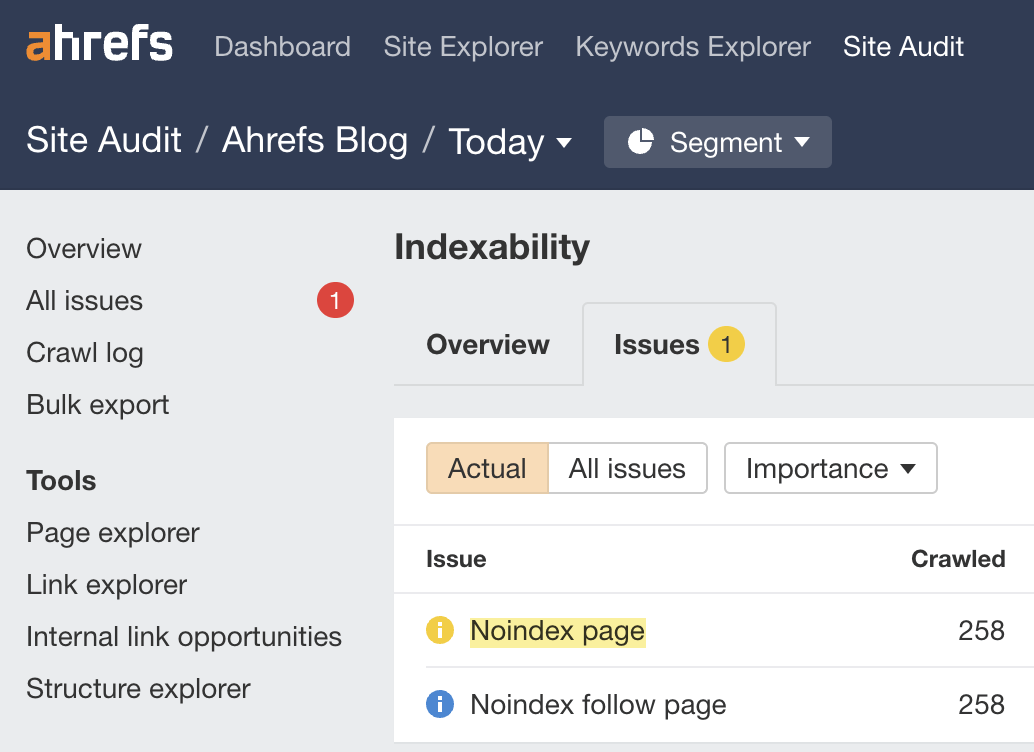

Check indexing

Make sure pages you want people to find can be indexed in Google. The two previous chapters were all about crawling and indexing, and that was no accident.

You can check the Indexability report in Site Audit to find pages that can’t be indexed and the reasons why. It’s free in Ahrefs Webmaster Tools.

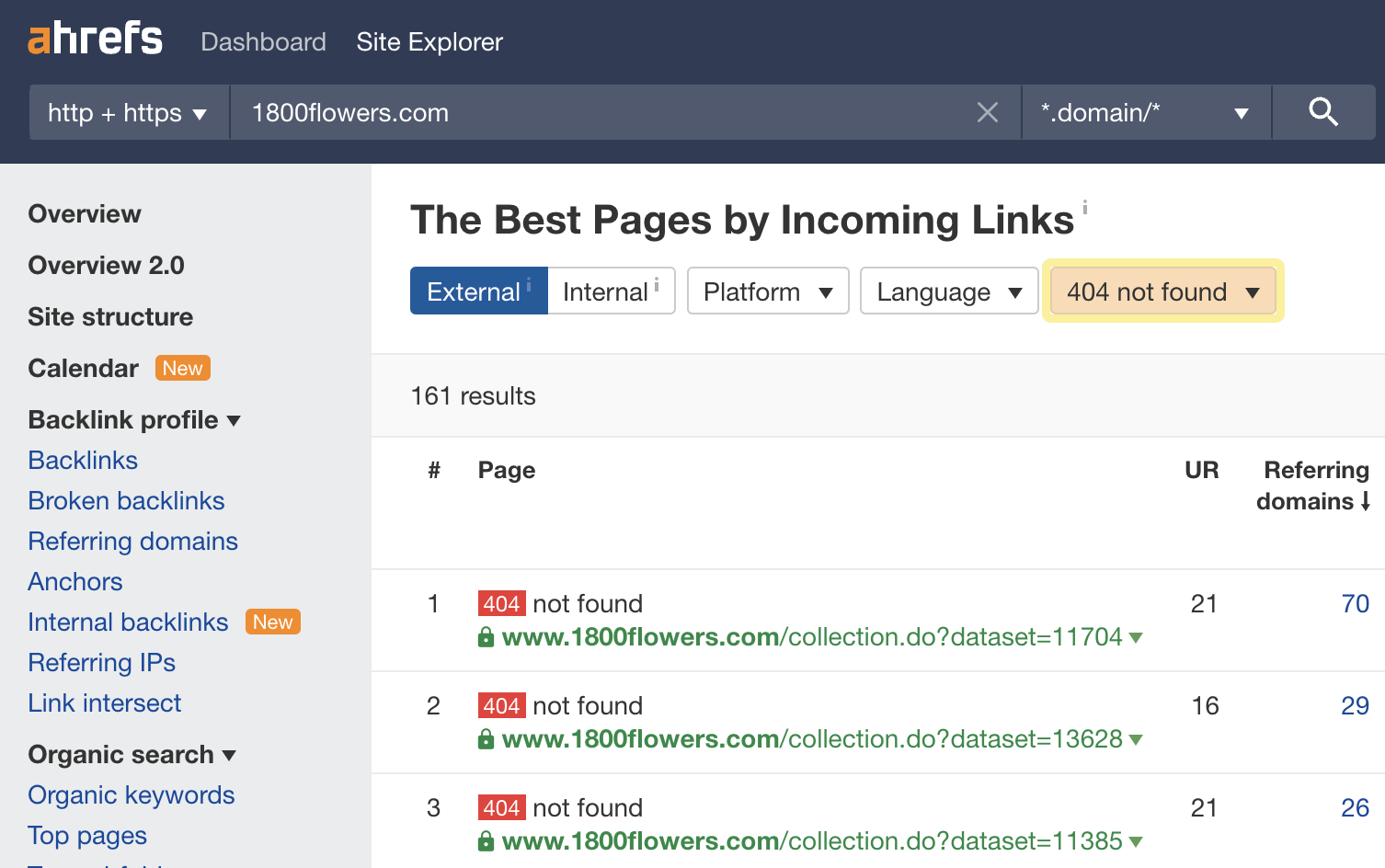

Reclaim lost links

Websites tend to change their URLs over the years. In many cases, these old URLs have links from other websites. If they’re not redirected to the current pages, then those links are lost and no longer count for your pages. It’s not too late to do these redirects, and you can quickly reclaim any lost value. Think of this as the fastest link building you will ever do.

You can find opportunities to reclaim lost links using Ahrefs’ Site Explorer. Enter your domain, go to the Best by Links report, and add a “404 not found” HTTP response filter. I usually sort this by “Referring Domains”.

This is what it looks like for 1800flowers.com:

Looking at the first URL in archive.org, I see that this was previously the Mother’s Day page. By redirecting that one page to the current version, you’ll reclaim 225 links from 59 different websites—and there are plenty more opportunities.

You’ll want to 301 redirect any old URLs to their current locations to reclaim this lost value.

A 301 redirect is a permanent redirect. Any links pointing to the redirected URL will count toward the new URL in Google’s eyes.[3]

Add internal links

Internal links are links from one page on your site to another page on your site. They help your pages be found and also help the pages rank better. We have a tool within Site Audit called Internal Link Opportunities that helps you quickly locate these opportunities.

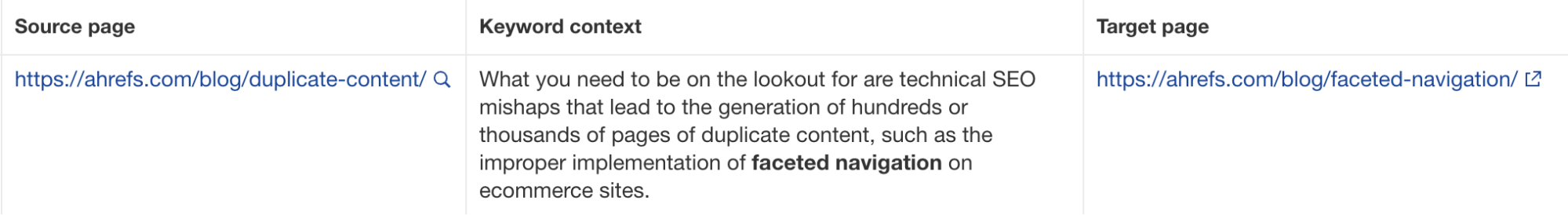

This tool works by looking for mentions of keywords that you already rank for on your site. Then it suggests them as contextual internal link opportunities.

For example, the tool shows a mention of “faceted navigation” in our guide to duplicate content. As Site Audit knows we have a page about faceted navigation, it suggests we add an internal link to that page.

Add schema markup

Schema markup is code that helps search engines understand your content better and powers many features that can help your website stand out from the rest in search results. Google has a search gallery that shows the various search features and the schema needed for your site to be eligible.

The projects we’ll talk about in this chapter are all good things to focus on, but they may require more work and have less benefit than the “quick win” projects from the previous part. That doesn’t mean you shouldn’t do them. This is just to help you get an idea of how to prioritize various projects.

Page experience signals

These are lesser ranking factors, but still things you want to look at for the sake of your users. They cover aspects of the website that impact user experience (UX).

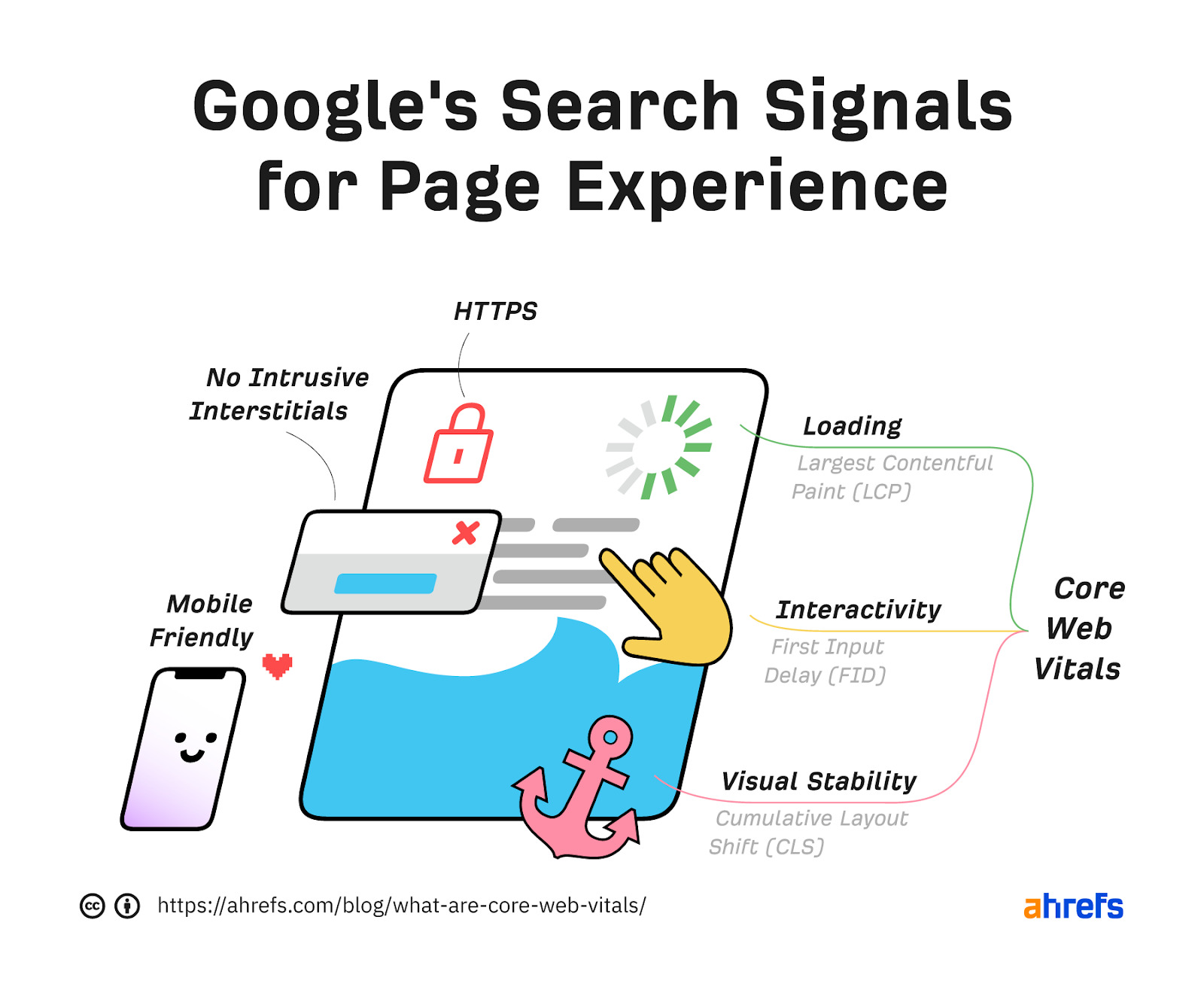

Core Web Vitals

Core Web Vitals are the speed metrics that are part of Google’s Page Experience signals used to measure user experience. The metrics measure visual load with Largest Contentful Paint (LCP), visual stability with Cumulative Layout Shift (CLS), and interactivity with First Input Delay (FID).

HTTPS

HTTPS protects the communication between your browser and server from being intercepted and tampered with by attackers. This provides confidentiality, integrity, and authentication to the vast majority of today’s WWW traffic. You want your pages loaded over HTTPS and not HTTP.

Any website that shows a “lock” icon in the address bar is using HTTPS.

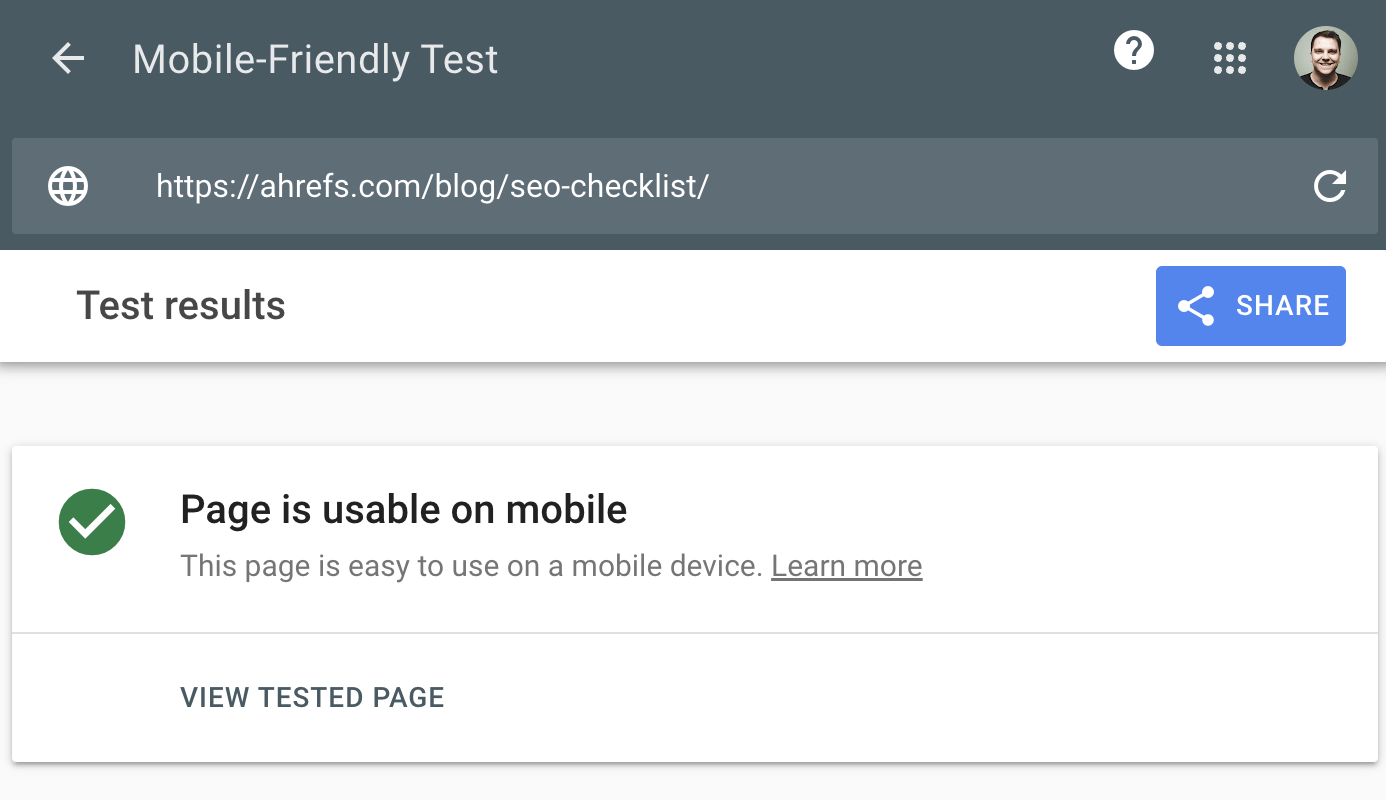

Mobile-friendliness

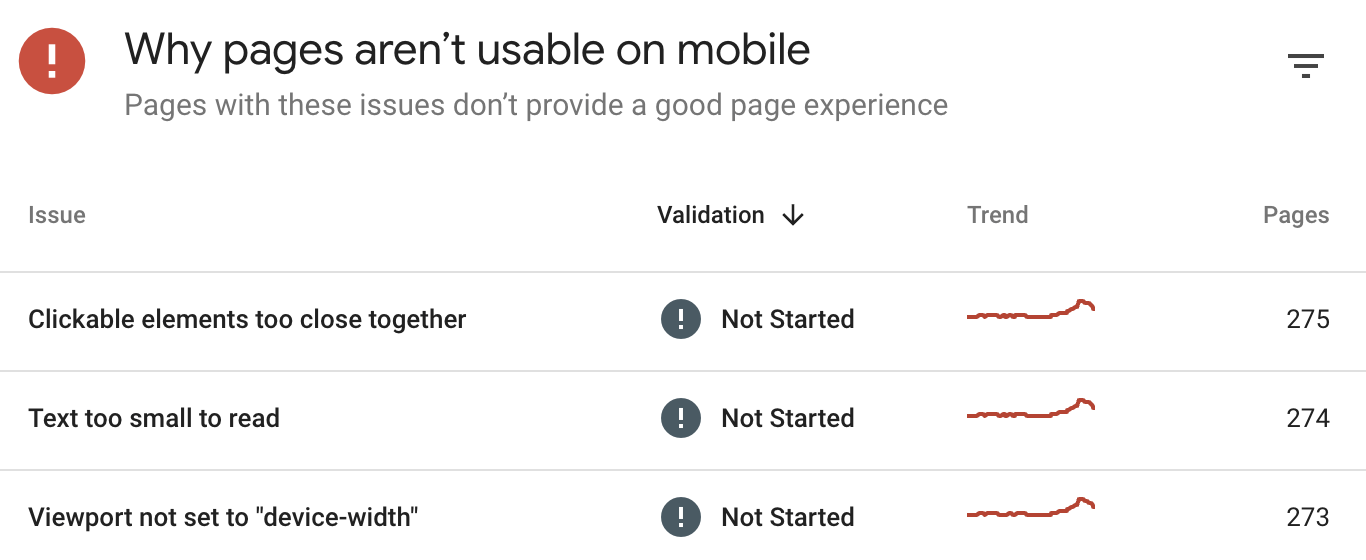

Simply put, this checks if webpages display properly and are easily used by people on mobile devices.

How do you know how mobile-friendly your site is? Check the “Mobile Usability” report in Google Search Console.

This report tells you if any of your pages have mobile-friendliness issues.

Interstitials

Interstitials block content from being seen. These are popups that cover the main content and that users may have to interact with before they go away.

Hreflang — For multiple languages

Hreflang is an HTML attribute used to specify the language and geographical targeting of a webpage. If you have multiple versions of the same page in different languages, you can use the hreflang tag to tell search engines like Google about these variations. This helps them to serve the correct version to their users.

General maintenance/website health

These tasks aren’t likely to have much impact on your rankings but are generally good things to fix for user experience.

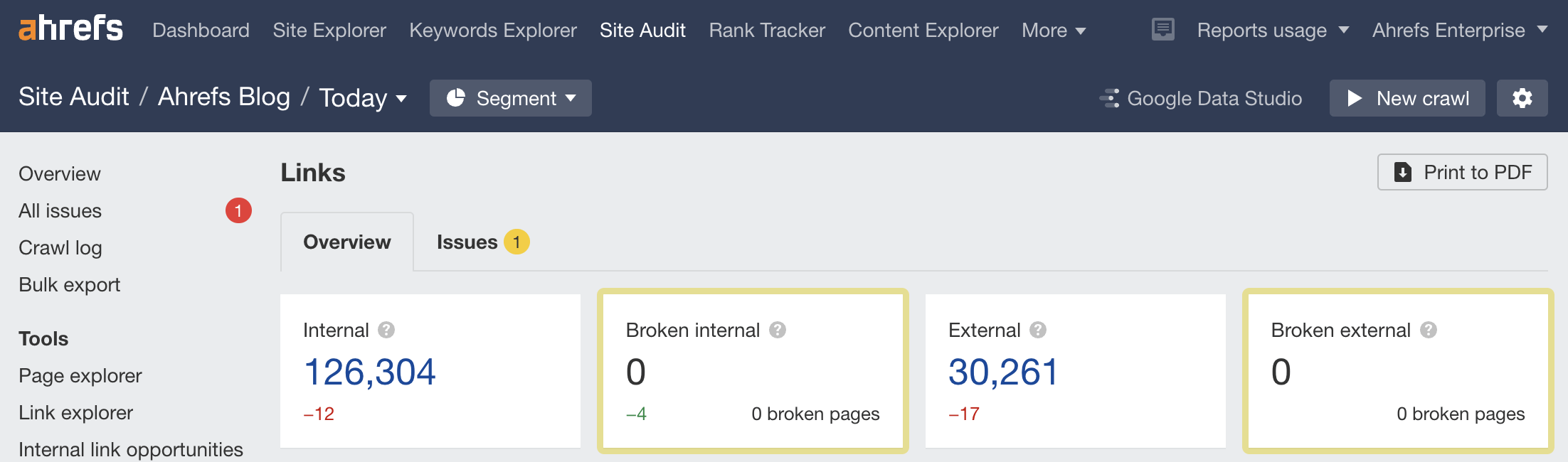

Broken links

Broken links are links on your site that point to non-existent resources. These can be either internal (i.e., to other pages on your domain) or external (i.e., to pages on other domains).

You can find broken links on your website quickly with Site Audit in the Links report. It’s free in Ahrefs Webmaster Tools.

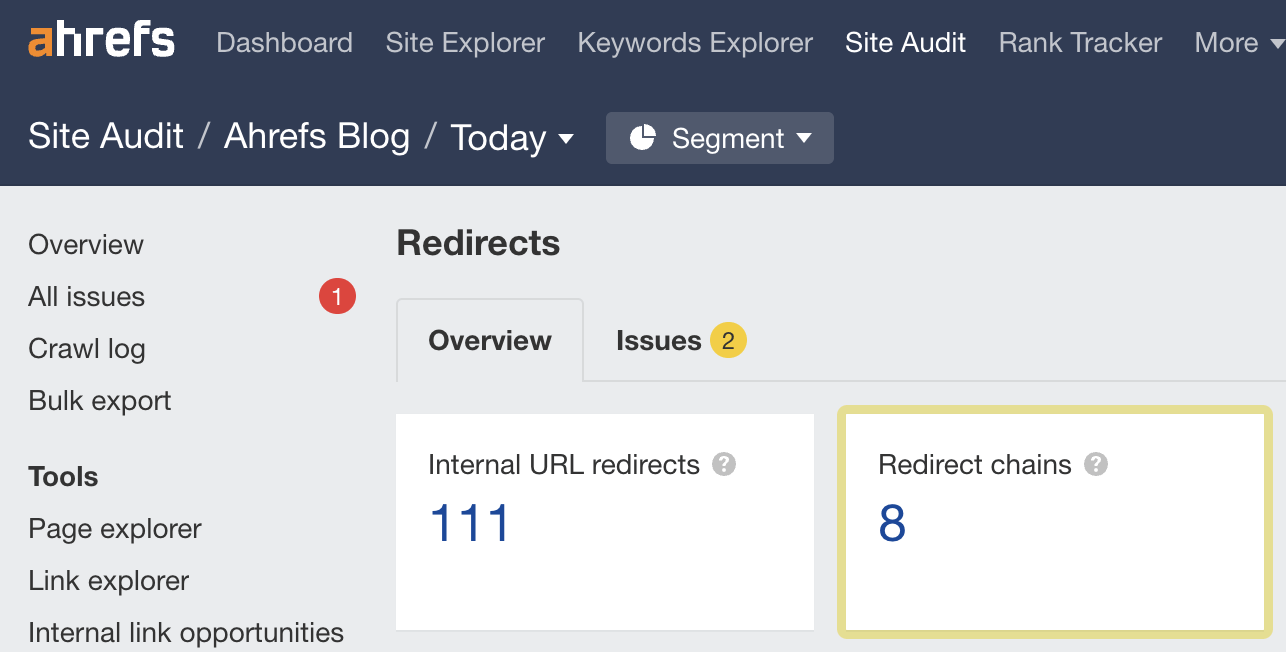

Redirect chains

Redirect chains are a series of redirects that happen between the initial URL and the destination URL.

You can find redirect chains on your website quickly with Site Audit in the Redirects report. It’s free in Ahrefs Webmaster Tools.

These tools help you improve the technical aspects of your website.

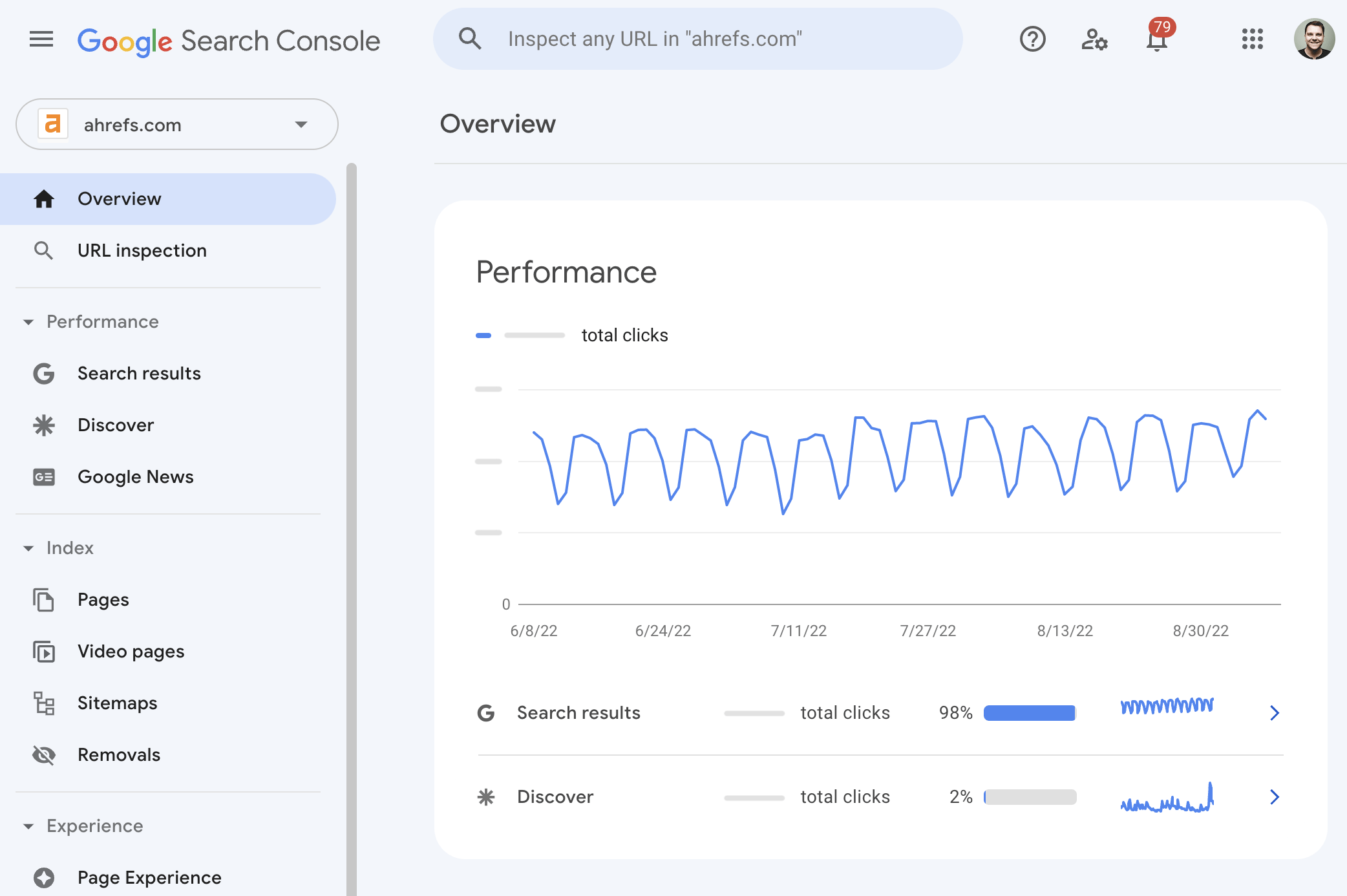

Google Search Console

Google Search Console (previously Google Webmaster Tools) is a free service from Google that helps you monitor and troubleshoot your website’s appearance in its search results.

Use it to find and fix technical errors, submit sitemaps, see structured data issues, and more.

Bing and Yandex have their own versions, and so does Ahrefs. Ahrefs Webmaster Tools is a free tool that’ll help you improve your website’s SEO performance. It allows you to:

- Monitor your website’s SEO health.

- Check for 100+ SEO issues.

- View all your backlinks.

- See all the keywords you rank for.

- Find out how much traffic your pages are receiving.

- Find internal linking opportunities.

It’s our answer to the limitations of Google Search Console.

Google’s Mobile-Friendly Test

Google’s Mobile-Friendly Test checks how easily a visitor can use your page on a mobile device. It also identifies specific mobile-usability issues like text that’s too small to read, the use of incompatible plugins, and so on.

The Mobile-Friendly Test shows what Google sees when it crawls the page. You can also use the Rich Results Test to see the content Google sees for desktop or mobile devices.

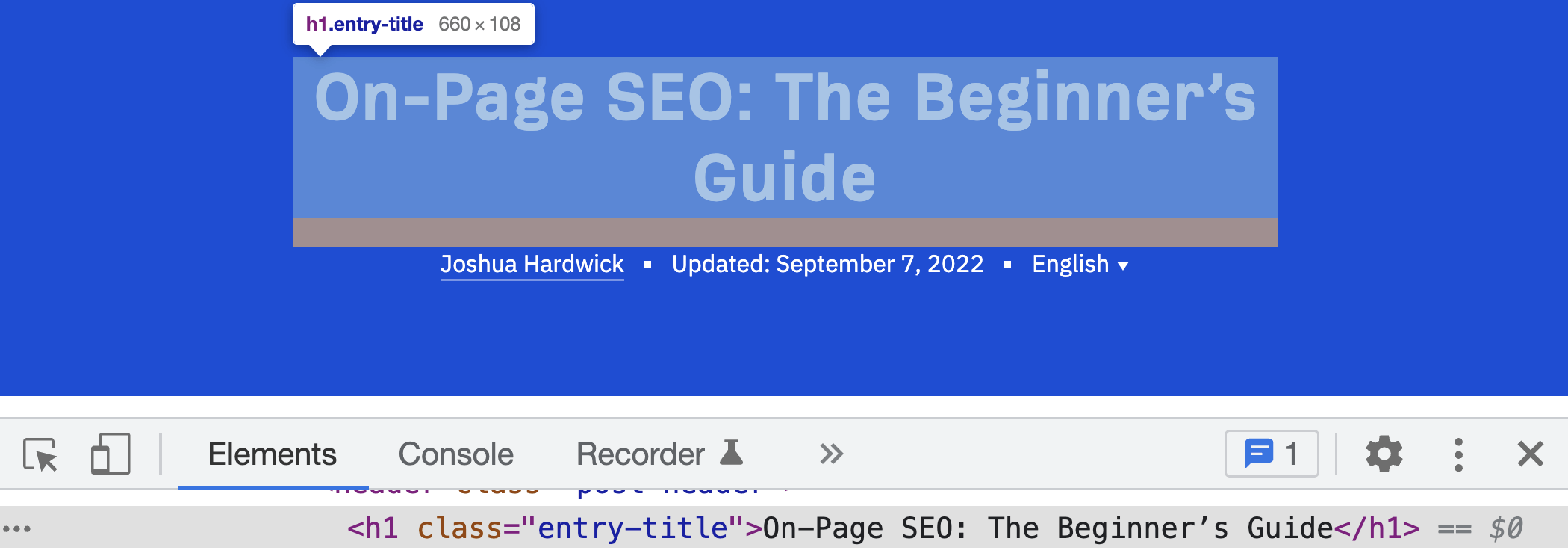

Chrome DevTools

Chrome DevTools is Chrome’s built-in webpage debugging tool. Use it to debug page speed issues, improve webpage rendering performance, and more.

From a technical SEO standpoint, it has endless uses.

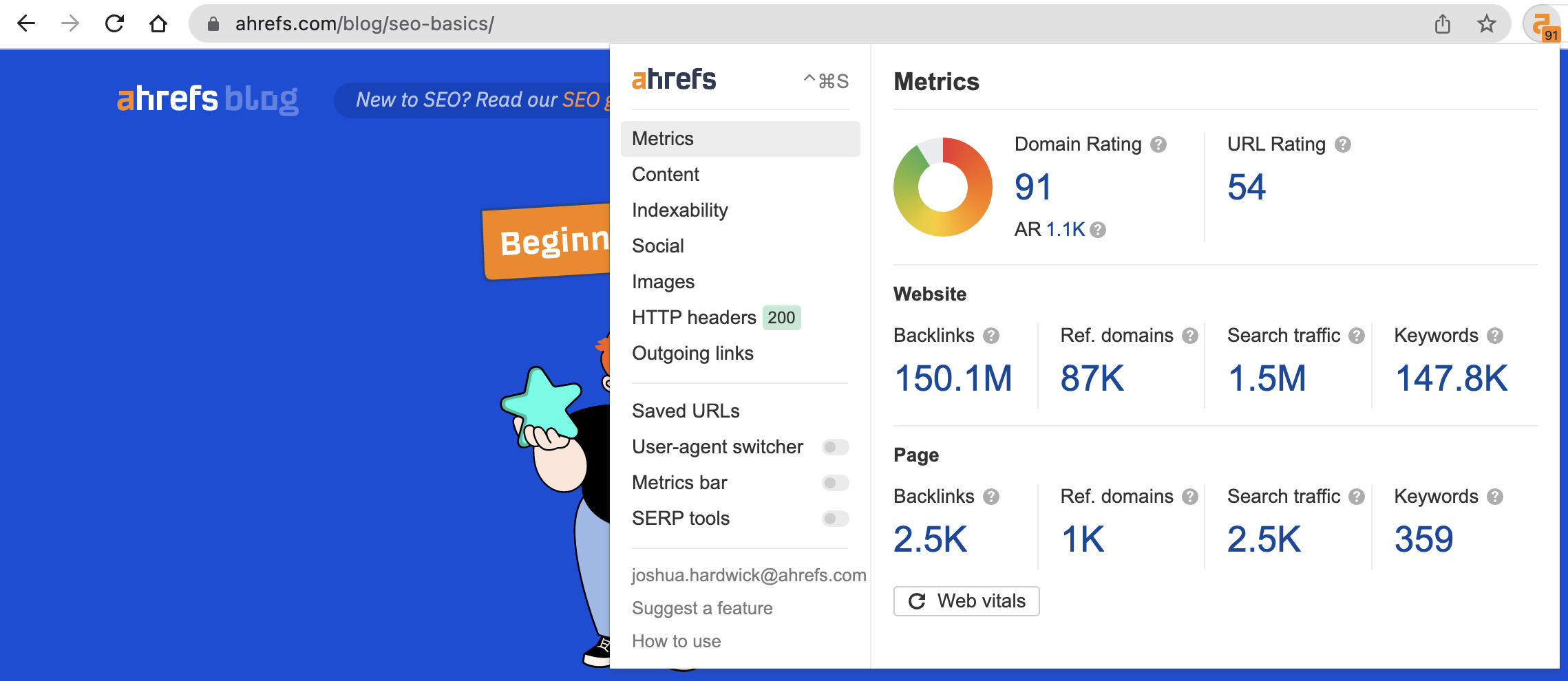

Ahrefs’ SEO Toolbar

Ahrefs’ SEO Toolbar is a free extension for Chrome and Firefox that provides useful SEO data about the pages and websites you visit.

Its free features are:

- On-page SEO report

- Redirect tracer with HTTP headers

- Broken link checker

- Link highlighter

- SERP positions

In addition, as an Ahrefs user, you get:

- SEO metrics for every site and page you visit and for Google search results

- Keyword metrics, such as search volume and Keyword Difficulty, directly in the SERP

- SERP results export

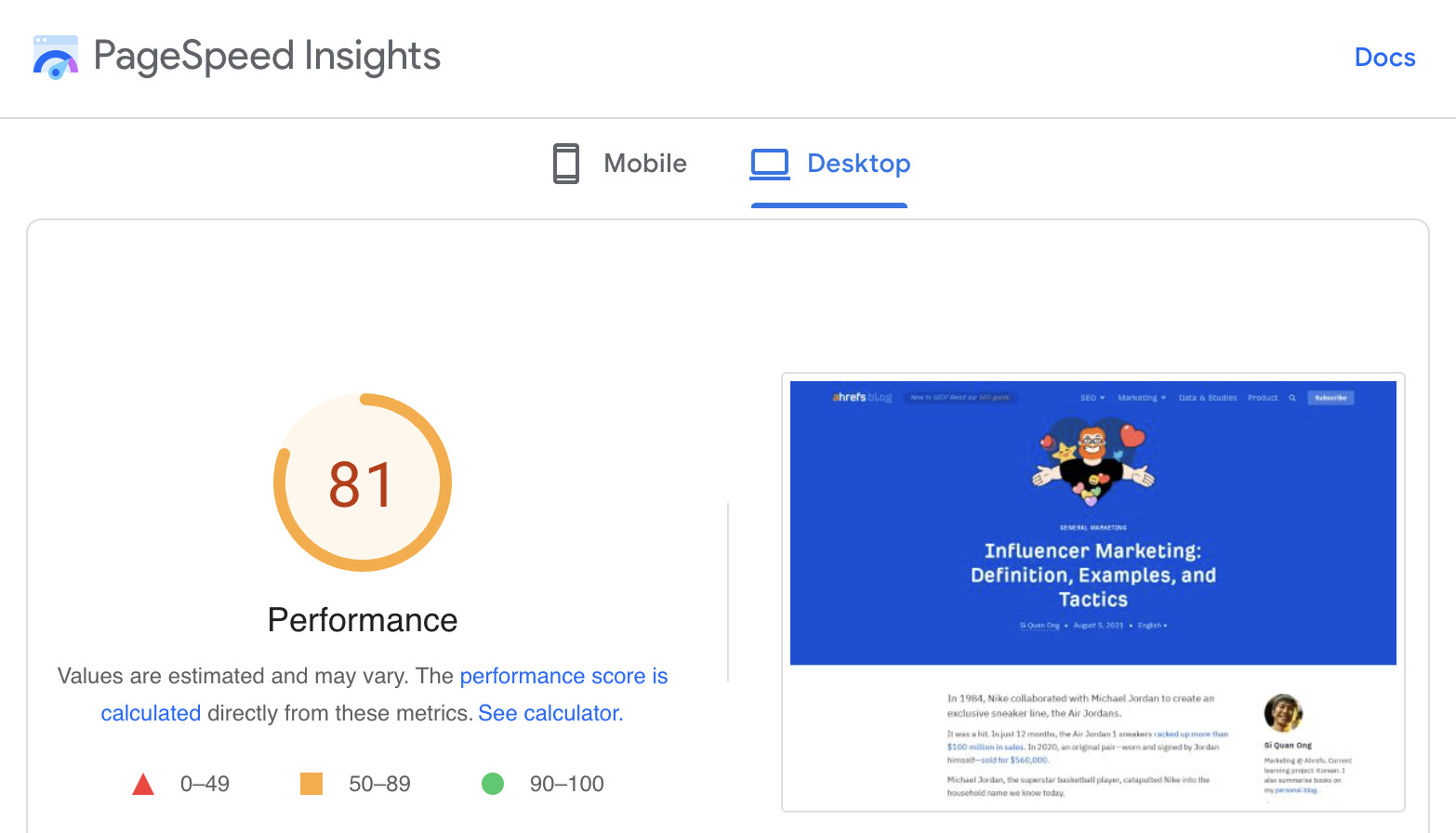

PageSpeed Insights

PageSpeed Insights analyzes the loading speed of your webpages. Alongside the performance score, it also shows actionable recommendations to make pages load faster.

Key takeaways

- If your content isn’t indexed, then it won’t be found in search engines.

- When something is broken that impacts search traffic, it can be a priority to fix. But for most sites, you’re probably better off spending time on your content and links.

- Many of the technical projects that have the most impact are around indexing or links.

References

- “Is a crawl-delay rule ignored by Googlebot?”. Google Search Central. 21st December 2017

- “Change Googlebot crawl rate”. Google. Retrieved 9th September 2022

- “30x redirects don’t lose PageRank anymore”. Gary Illyes. 26th July 2016

Copy link