AI models draw on search engine indexes to enrich their static training data in a process known as retrieval augmented generation (RAG), so some crossover between AI and search results is to be expected.

But how much does your visibility in search align with your presence in AI?

With the help of Xibeijia Guan, I was able to study 1.9M citations from 1M AI Overviews to find out—analyzing the top 3 most visible citations in each response.

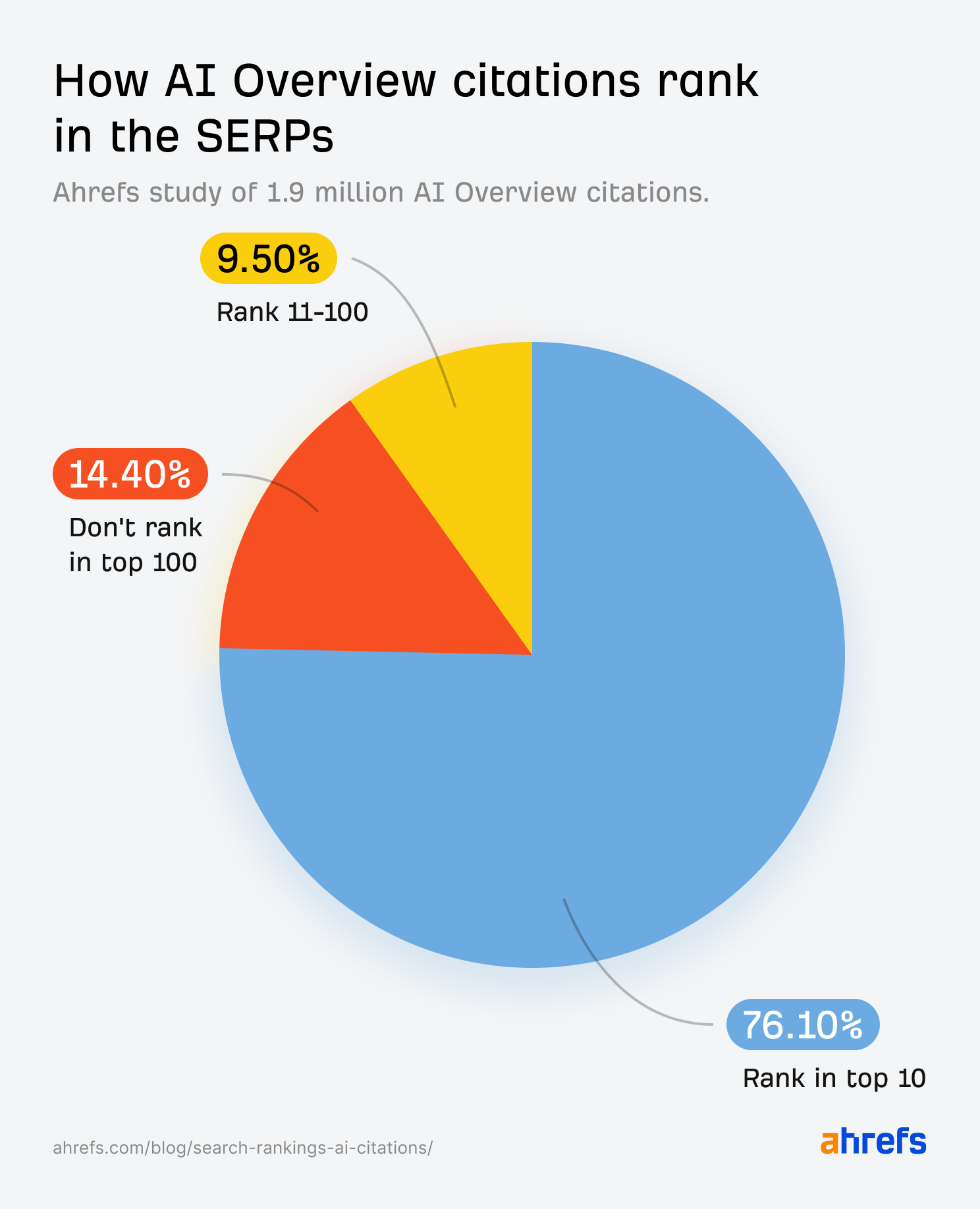

We discovered that:

- 76.10% of AI Overview-cited pages rank in the top 10

- 9.50% of AI Overview-cited pages rank between position 11-100

- 14.40% of pages cited in AI Overviews do not rank in the SERPs (i.e. rank below position 100)

Put simply: there’s some major crossover between traditional search rankings and AI Overview citations.

Of the citations included in AI Overviews, 86% come from pages that can be found somewhere in the top 100 of Google—either in the top 10, or positions 11–100.

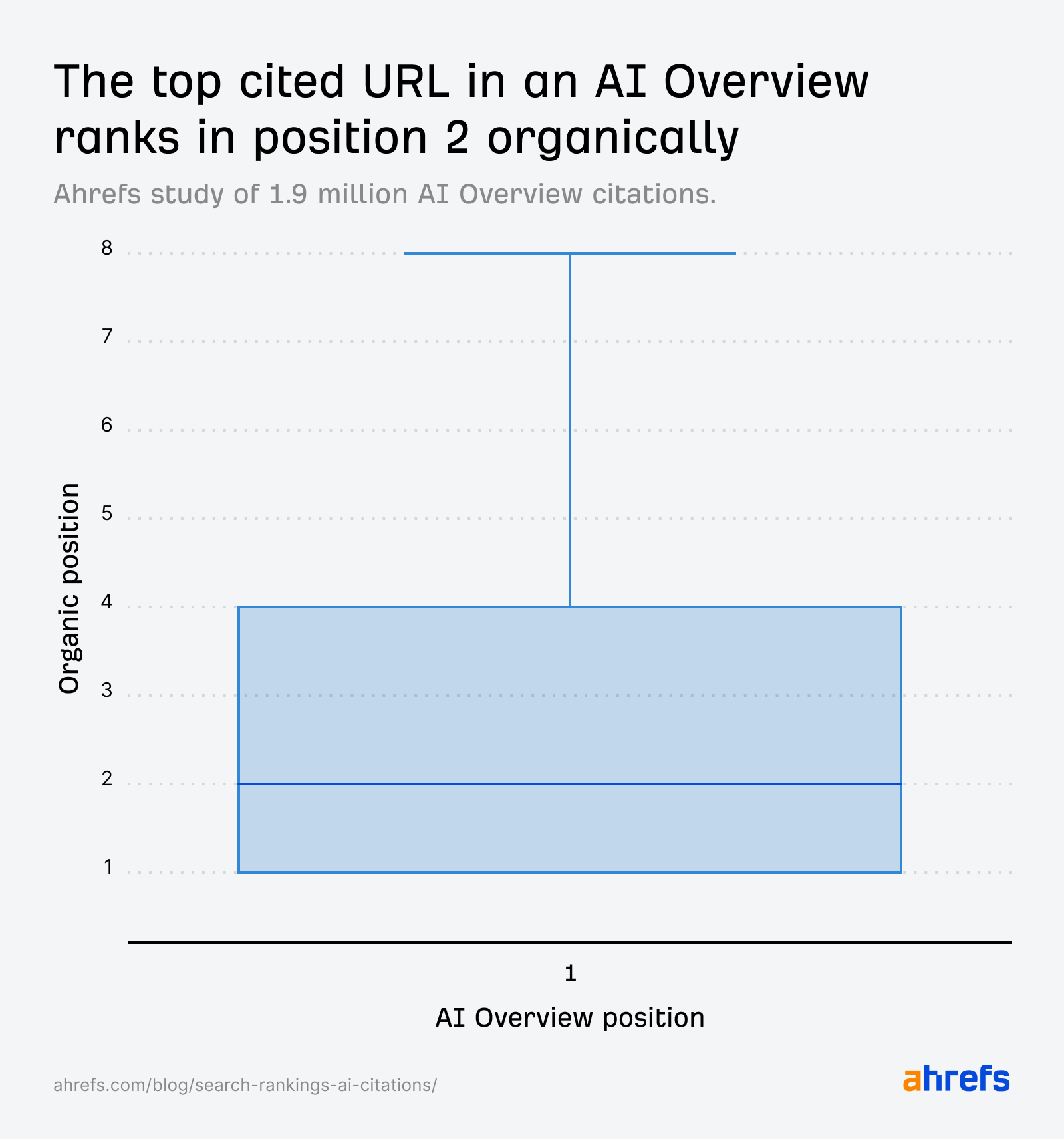

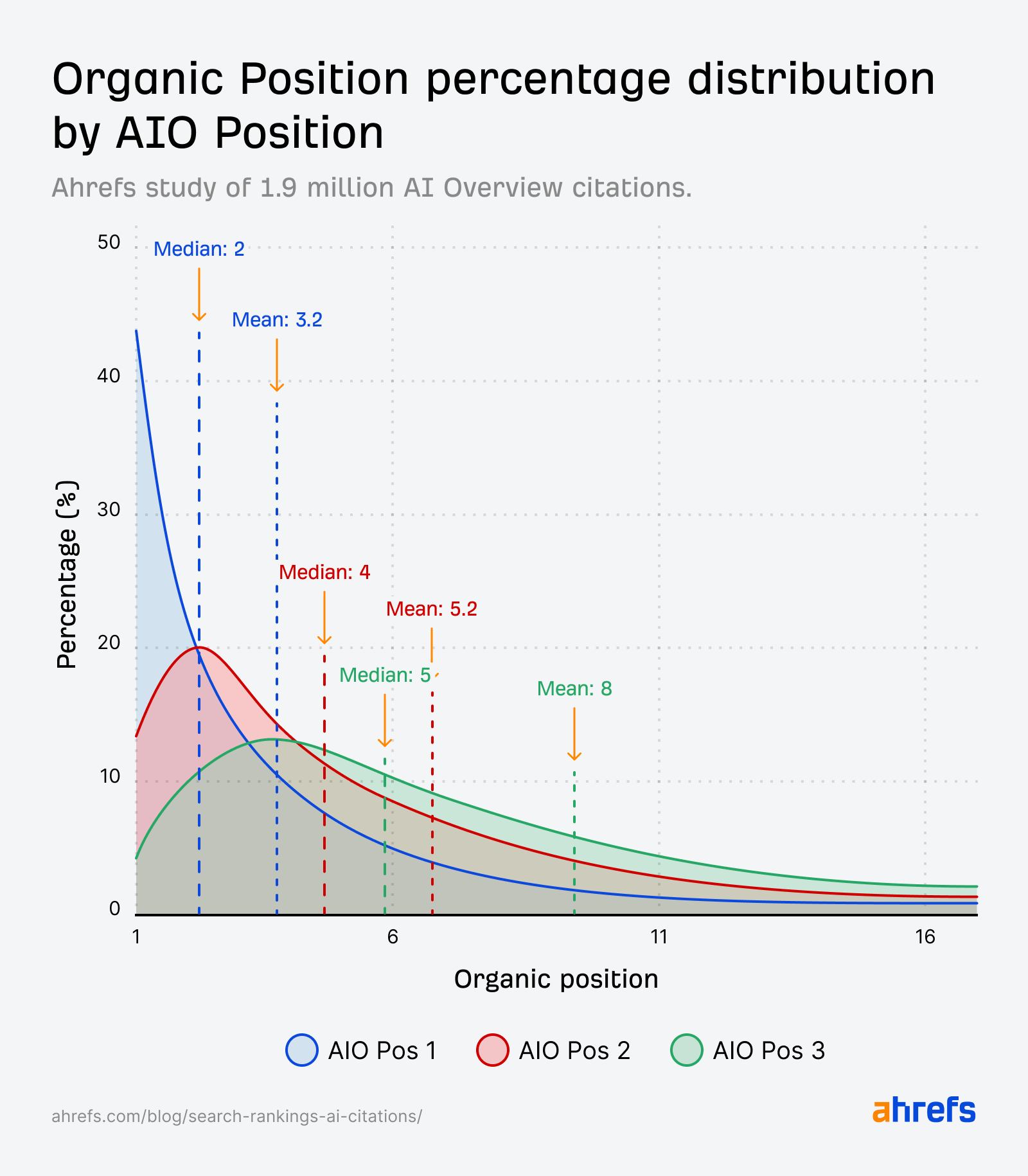

The three URLs cited in AI Overview responses show a median ranking of 3 in the SERPs.

The primary (top-cited) URL tends to position a shave higher than that, earning a median ranking of position 2 in traditional search.

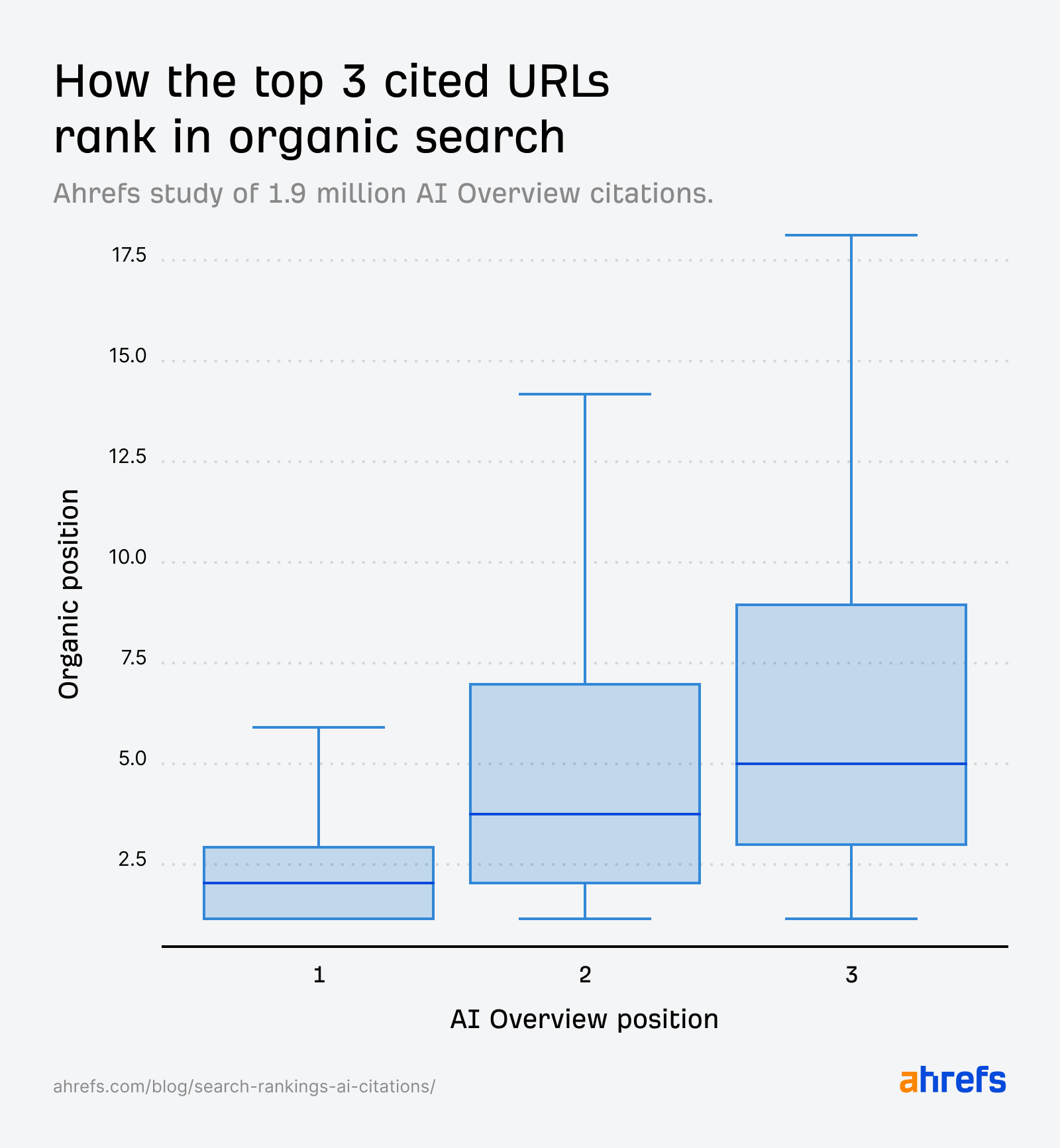

Subsequent AI Overview citations (i.e. links #2 and #3) also commonly rank in the top 10 search results.

Second-place AIO citations typically rank 4th in traditional search, while third-place citations place 5th, based on median averages.

| AI Overview citation position | Traditional search (median) position |

|---|---|

| 1 | 2 |

| 2 | 4 |

| 3 | 5 |

Here’s how that data looks visualized as a distribution graph…

Approximately 10% of AI Overview citations rank below page one in the SERPs.

This made us wonder: what causes Google to look past page one when citing content in AI?

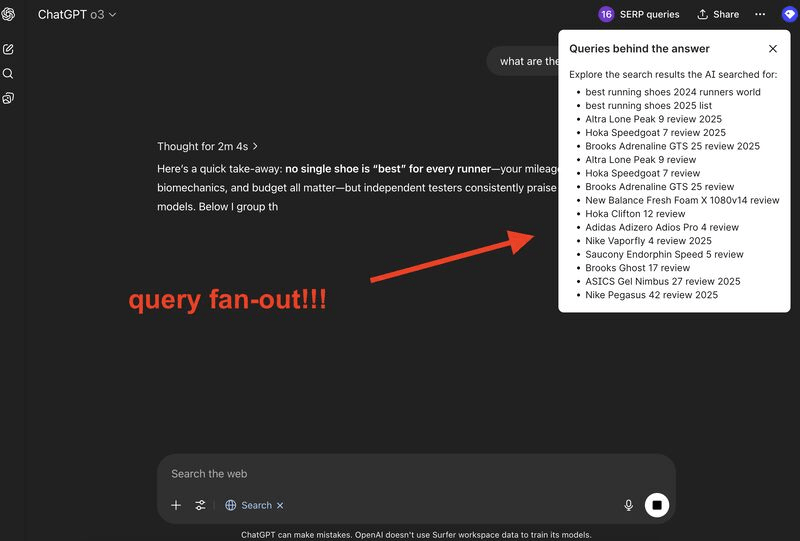

The fan-out query theory

AI is said to select sources by generating a selection of “Fan out queries”—longer, more detailed queries on subtopics related to the user’s prompt.

Tom Niezgoda’s LinkedIn post showing Surfer’s query fan-out Chrome bookmarklet—the “Keyword Surfer” extension. This reveals the fan-out queries ChatGPT generates when processing responses to prompts.

We’d seen theories circulating that some lower ranking pages get cited because they better answer AI’s synthetic fan-out queries. We set out to test that.

What we found:

We expected that if lower ranking content was cited during the query fan out process, it would return for more detailed (read: longer) queries, and for lots of synonymous (read: more) keywords—given the long-tail nature of fan out queries.

Instead, we found that pages cited beyond the top 10 actually appeared for fewer keywords, and returned for shorter queries.

| Average | Ranks in top 10 | Ranks position 11–100 |

|---|---|---|

| # of keywords | 1020 | 887 |

| length of keywords | 5.4 | 5.2 |

| length of “top keyword” | 8.5 | 7.7 |

This doesn’t neatly fit with our expected profile of fan-out query content.

Content is cited in AI for a growing number of complex reasons, from its freshness to its alignment with specific user preferences—and even past prompts.

The ~10% of results that “rank beyond the top 10” likely represent a culmination of these factors, and possibly many more.

Wrapping up

While 14% of AI-cited content doesn’t rank in the top 100, this seems to be the exception rather than the rule.

AI Overview visibility and search visibility appears to overlap fairly significantly.

Meaning that, when you show up in search, you have a greater chance of also being cited in Google’s AI results.

That said, my colleague SQ just ran a study on the correlation between search ranking and AI Overview visibility.

The result was a positive yet moderate correlation, suggesting that while rankings do matter, there are other factors are at play.

It’s true that if you rank #1 in the SERPs, you’re more likely to be cited in an AI Overview than if you were ranked lower. But that chance is a coin flip at best.

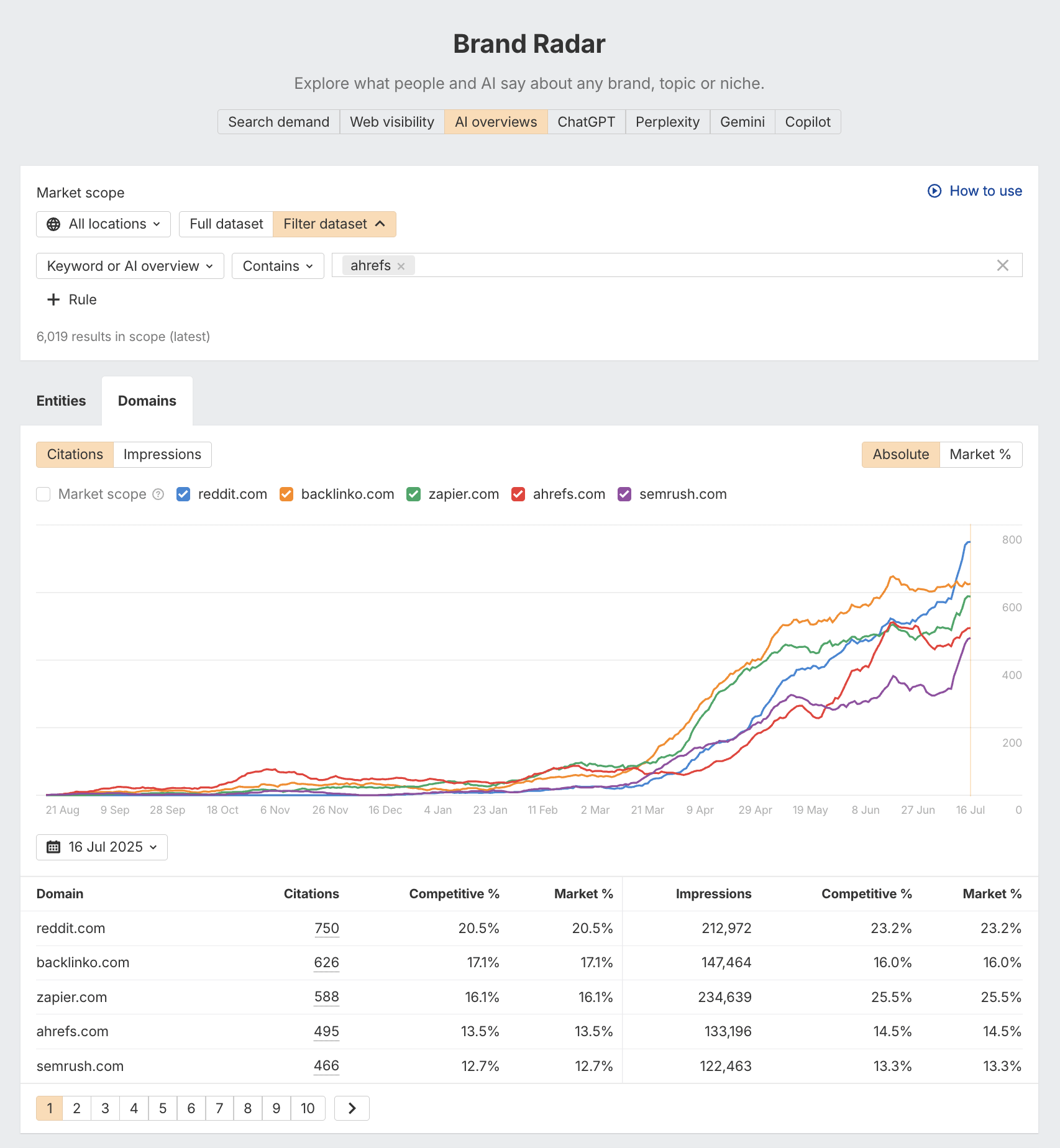

With those odds, it’s still important to monitor both your traditional rankings and AI citation performance to get the complete picture of your search visibility.

You can do this with Ahrefs. Head to the Organic Keywords report in Site Explorer to assess your rankings, and check out Ahrefs Brand Radar to view all of your AI Overview links.

For now it seems AI Overview citations are still largely driven by traditional search success, meaning the SEO fundamentals are still just as important.