Just last month, OpenAI CEO Sam Altman revealed that more than 800 million people use the platform each week.

But what does it take to get mentioned and cited in its responses?

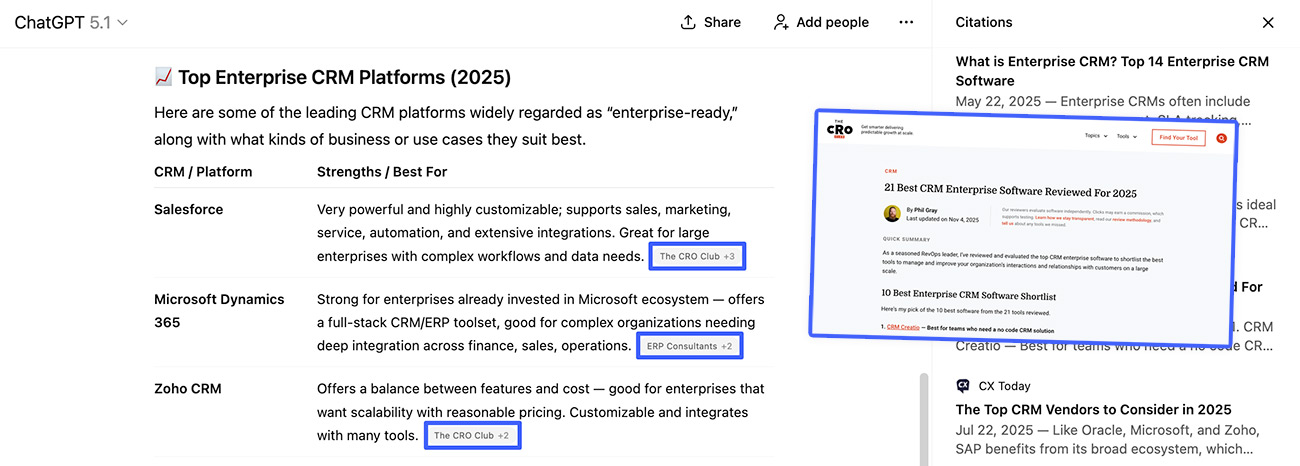

Our latest research into top-of-the-funnel queries shows that recently updated “best X” lists were the most prominent page type in ChatGPT sources, including those where recommended brands ranked themselves in first place.

There was also a correlation between a brand positioning highly in third-party lists and being more likely to feature in responses.

Does that conclusively mean you should be publishing these lists yourself? Before I share the approach we’re taking, let’s look at the data in full.

I analyzed ChatGPT responses across 750 top-of-the-funnel prompts in three categories: software, products, and agency recommendations (e.g., ‘the best web design agencies in London’).

While that number might not sound like much, it took dozens of hours to manually categorize responses to ensure accuracy.

What I was looking for: How often “best” lists show up as citations

For software, I was looking at like “best crm software for enterprise” and “best project management software”.

For products, I analysed prompts around queries such as “best entry-level DSLR” and “best home treadmill”.

In the agency category, I looked at terms related to agencies offering services covering SEO, social media, advertising, branding, general marketing, and web design.

In traditional SEO, these terms are estimated to be searched for hundreds of thousands of times per month.

That said, it’s likely that the terms people enter into Google might not be the perfect representation of what is entered in a chat platform. Unfortunately, AI platforms don’t provide that data directly.

I’ve tested both simple and descriptive prompts in these industries and found little to no additional “drift” compared to tracking the same prompts. I’ve covered this more in our supplemental doc below, and plan to share more research there in the near future.

It’s also likely that a notable percentage of recommendations are given as the result of a multi-query conversation, rather than a single prompt.

For this reason, I haven’t looked into the most recommended brands or the top-cited domains overall, but rather into the types of content sourced from those sites.

Another aim with this study is to set a benchmark for what we’re seeing across the board, which we can compare against and improve upon over time.

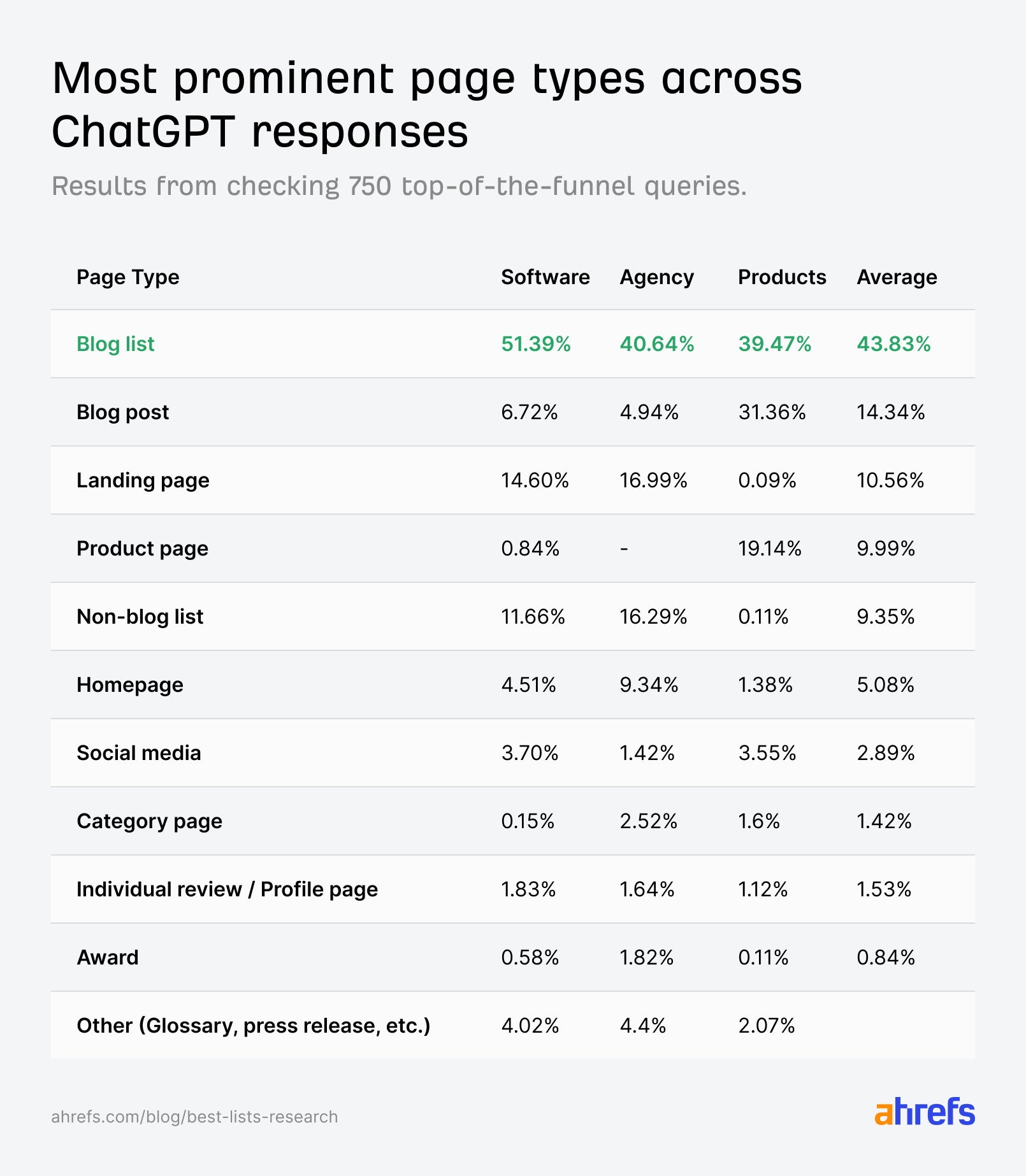

The table below covers the most common page types we saw across all three categories.

It includes both first-party mentions—when a recommended brand or product’s own website is cited—and third-party mentions.

The majority of page types we analysed should be self-explanatory, but for those that are the most prominent, here’s some additional clarification:

- Blog list: A list of recommendations in a blog post format, such as this post on “The 8 Best AI Detectors, Tested and Compared” by our own Ryan Law

- Non-Blog List: Platforms like G2 (software) or Clutch (agency), which rank recommendations but not in a blog post style format

- Landing page: General pages like a specific service an agency offers, or a market a SaaS company has a solution e.g., this page on Siege Media showing they offer web design services

- Social media: This covers sites like Reddit, Facebook, Instagram, and LinkedIn

Links may appear inline with recommendations, or as source links, which were used for research but require an additional click to view.

Each first-party mention was manually categorized.

For third-party mentions, totaling 10,000+ individual URLs, we used a semi-automated approach.

We set up dozens of manual filters to improve accuracy. For example, the learn.g2.com subdomain hosts content types very different from standard G2 category pages.

For the remaining URLs, we categorized them with OpenAI’s GPT-5 model with custom instructions.

Finally, we spent time going over each group of URLs and improved tagging where necessary, though we were happy with 95% of what we originally saw.

In some categories there’s a clear shift in which page type featured prominently. Landing pages are commonly cited when looking for the best software or agency, but rarely show up when researching products.

General blog posts on product websites performed far better than in the software and agency recommendations space.

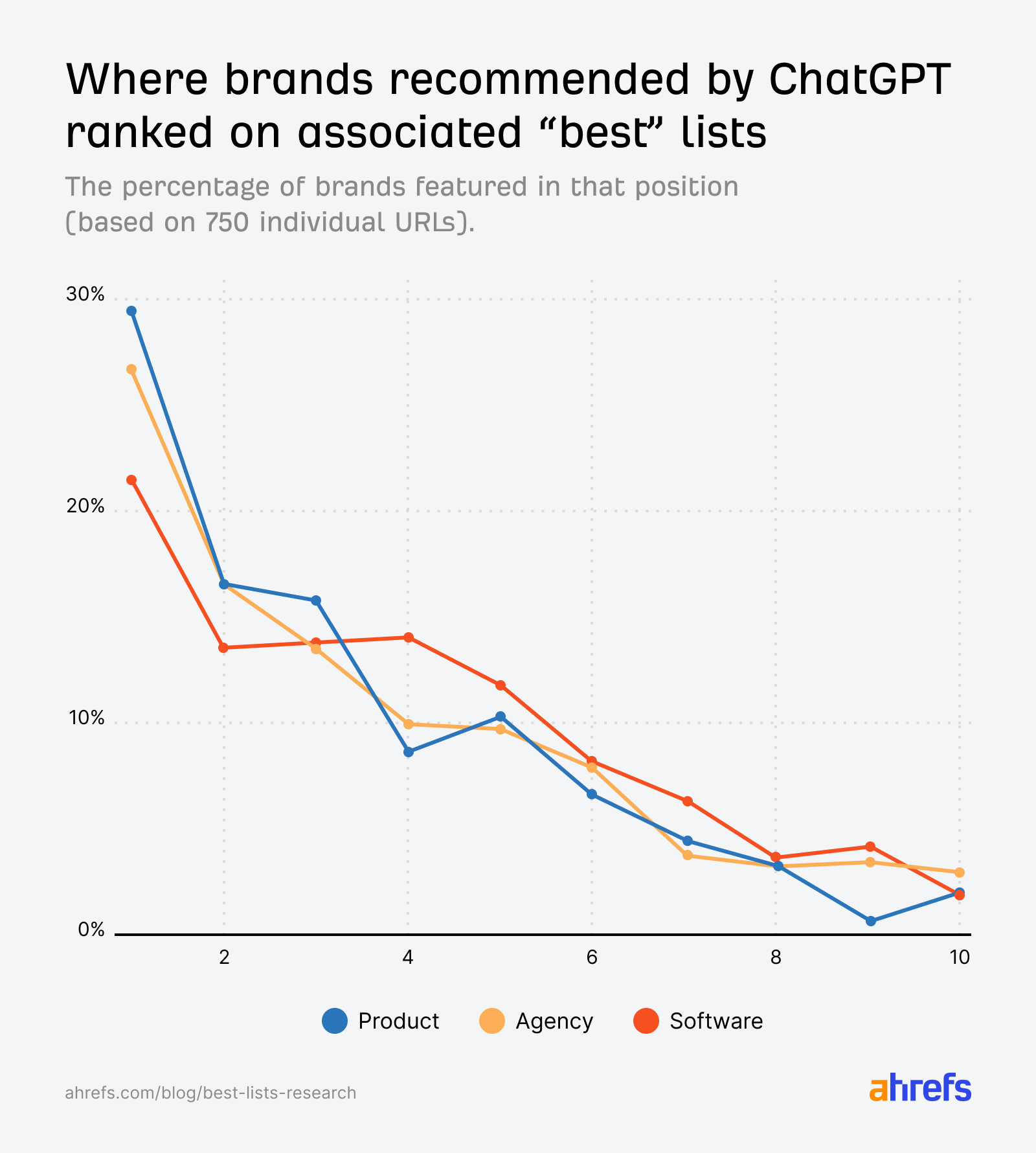

For each of the categories in question, we took 250 “best” blog lists from ChatGPT’s responses (750 in total) to determine where a specific brand was featured when that list was sourced alongside it.

This was all done by hand, as automation would not be as accurate. For example, many lists claim they have a certain number of items, when they often have more and simply haven’t updated their title or headline.

Automation to determine where a brand featured in a list can be even more complex. Especially as they’re not always presented in a clean list of numbered headings.

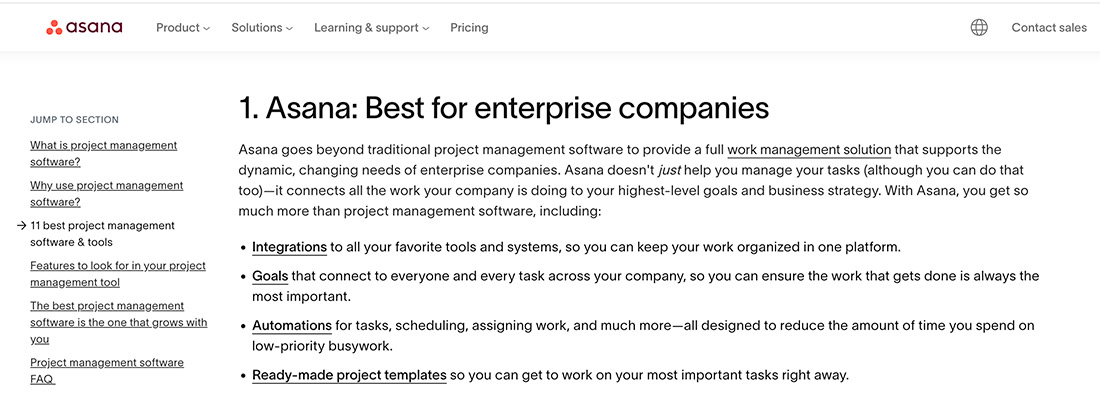

In the example below, you can see that Asana features as the top spot in their own list of the best project management software:

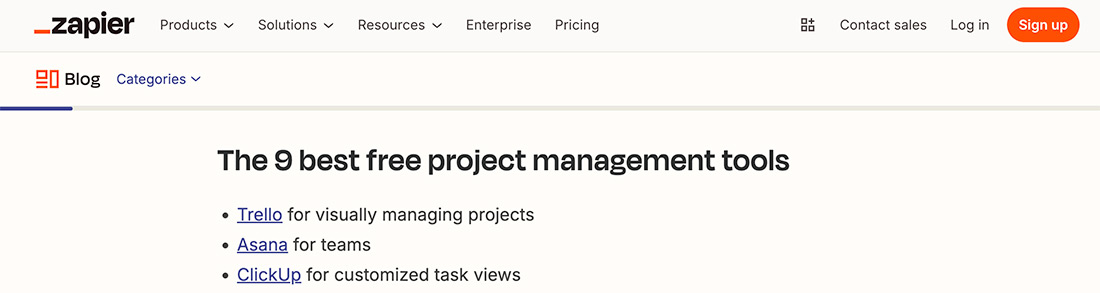

In a similar article on Zapier’s website, Asana is shown as the second recommendation:

Both pages were used as a source in a ChatGPT response.

Taking all 750 lists into account, here’s the distribution of where a mentioned product, brand, or service provider was featured in an associated “best” list.

While there’s a clear suggestion that being positioned highly increases recommendations, there’s also some bias here.

Not every list has 10 or more items, so there will always be more recommendations at higher list positions.

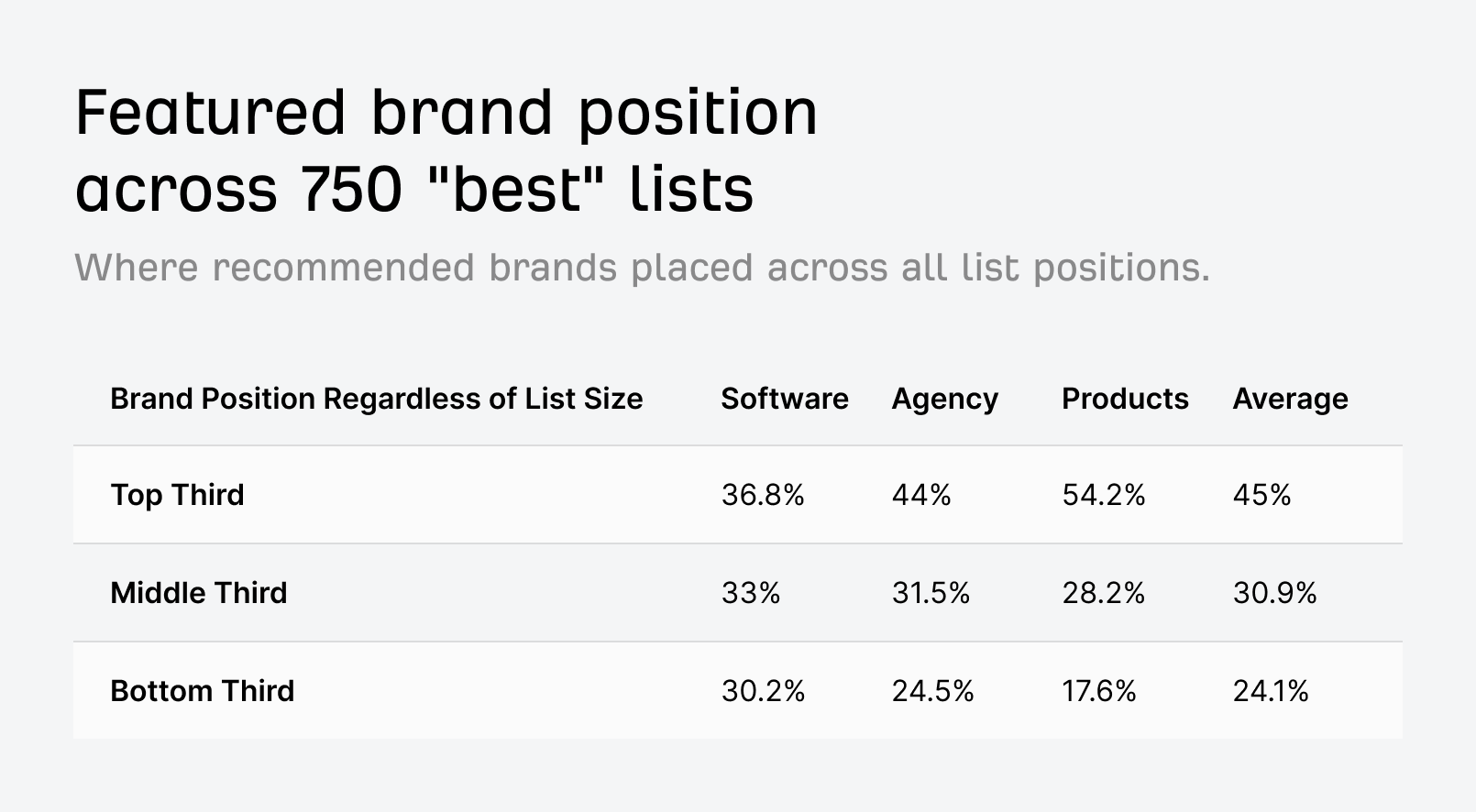

To help solve this, we can look at list distribution and how many recommendations fall into the top, middle, or bottom third of a list, regardless of its size.

As we can see from the overall distribution, there’s still a strong trend towards companies and products that ranked higher in the associated list.

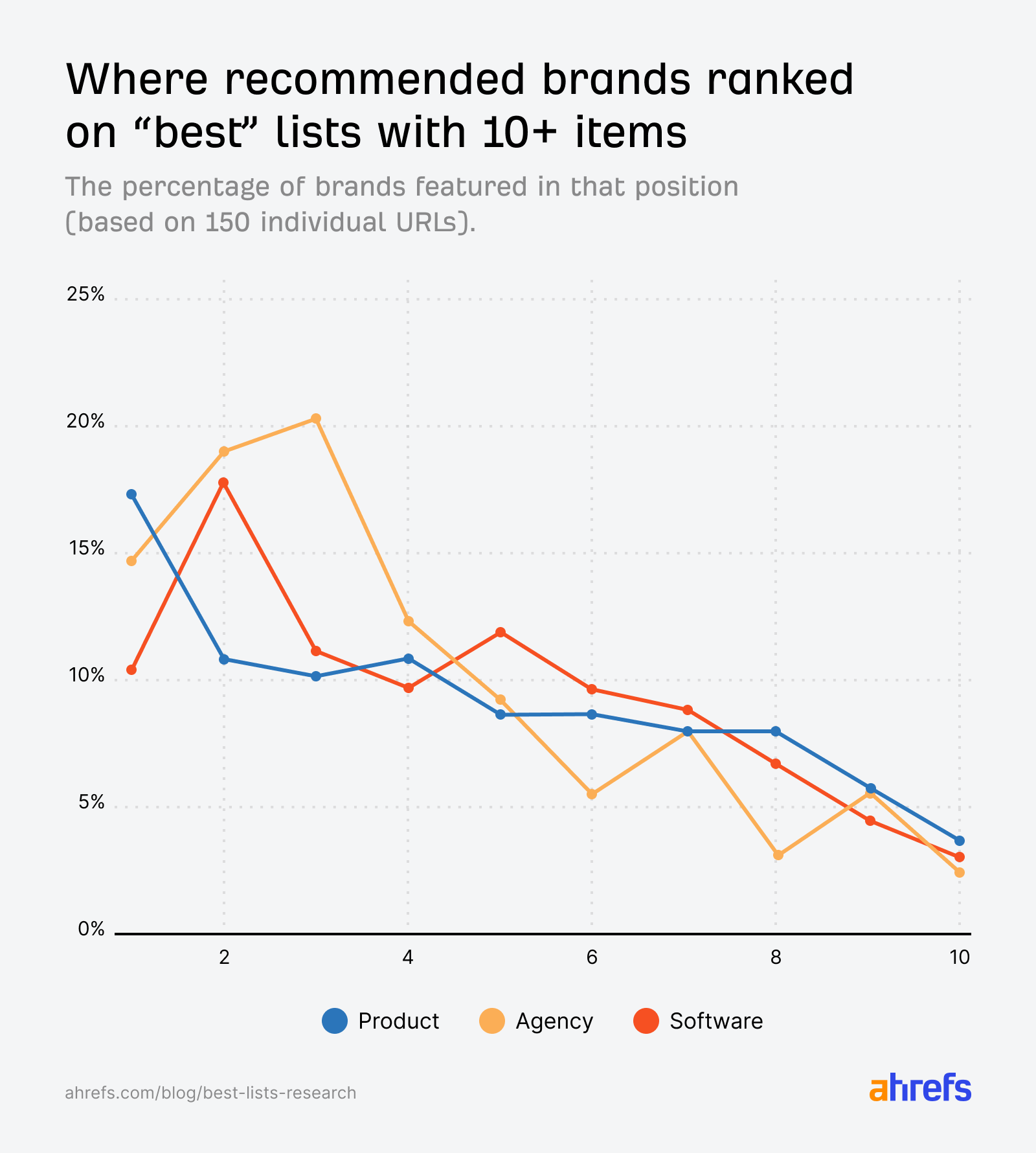

I wanted to do one better than that and also share a chart focused on a separate set of lists with 10 or more items. There we see a less prominent result, but it’s trending in the same direction:

Some other factors might be in play here, as covered in the aforementioned supplemental doc.

One conclusion we might quickly jump to is that LLMs pick earlier sections of the data given to them, and thus they’re “prioritizing” some of the top recommendations.

I spoke briefly with Dan Petrovic from DEJAN SEO, who has done extensive research in this space, and suggested that might not be the case. Some of his analysis reveals that more or less content can be extracted depending on the domain or page being sourced.

We’re still studying this, so I hesitate to give a definitive conclusion, but even if AI were not a thing, you would logically want to feature higher on a list of recommended software, products or service providers in your industry.

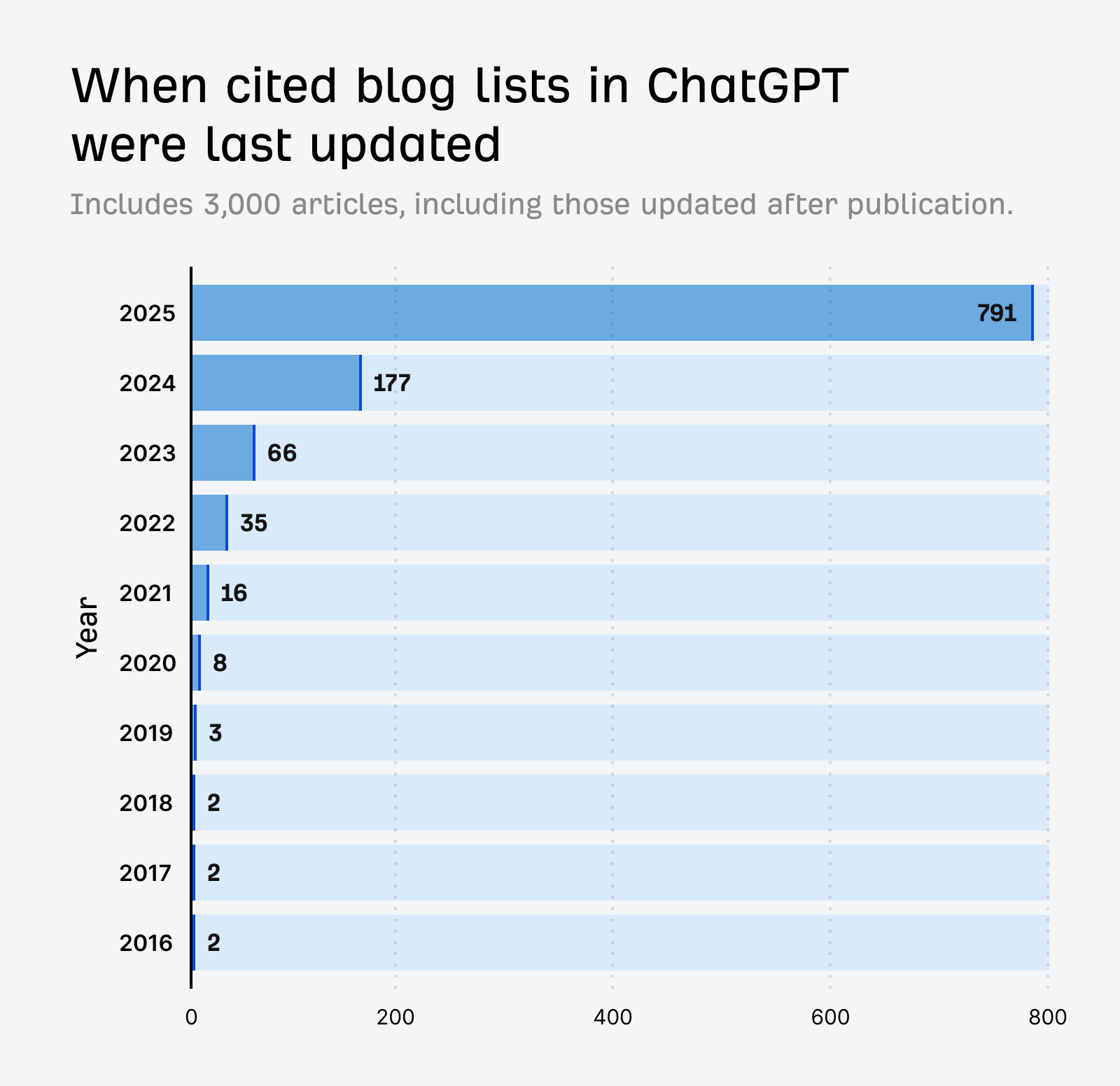

I took an equal sample of blog lists from our three categories and checked to see when they were published or last updated. After filtering out those without clear publish or modified dates, I was left with a clean list of 1,100 URLs to analyze.

79.1% were last updated in 2025, and 26% were last updated in the past two months alone.

This accounts for those that were either published for the first time or later modified in the respective timeframe.

Interestingly, more articles had been updated since publication (57.1%) than those published and left as is.

Keeping articles up to date may help them perform better in traditional search, but our data shows AI assistants prefer even fresher content.

Spend some time reviewing the sources ChatGPT uses and it’s clear they are not always the most legitimate websites.

Many lack any human presence and look almost entirely like they were built for link building and / or citation purposes.

While the bar for quality should be higher if they’re using Google and Bing as their reference sources, our own Louise Linehan found that 28% of ChatGPT’s most-cited pages have zero organic visibility.

My own research found that many questionable sites perform well in Bing but not as well in Google.

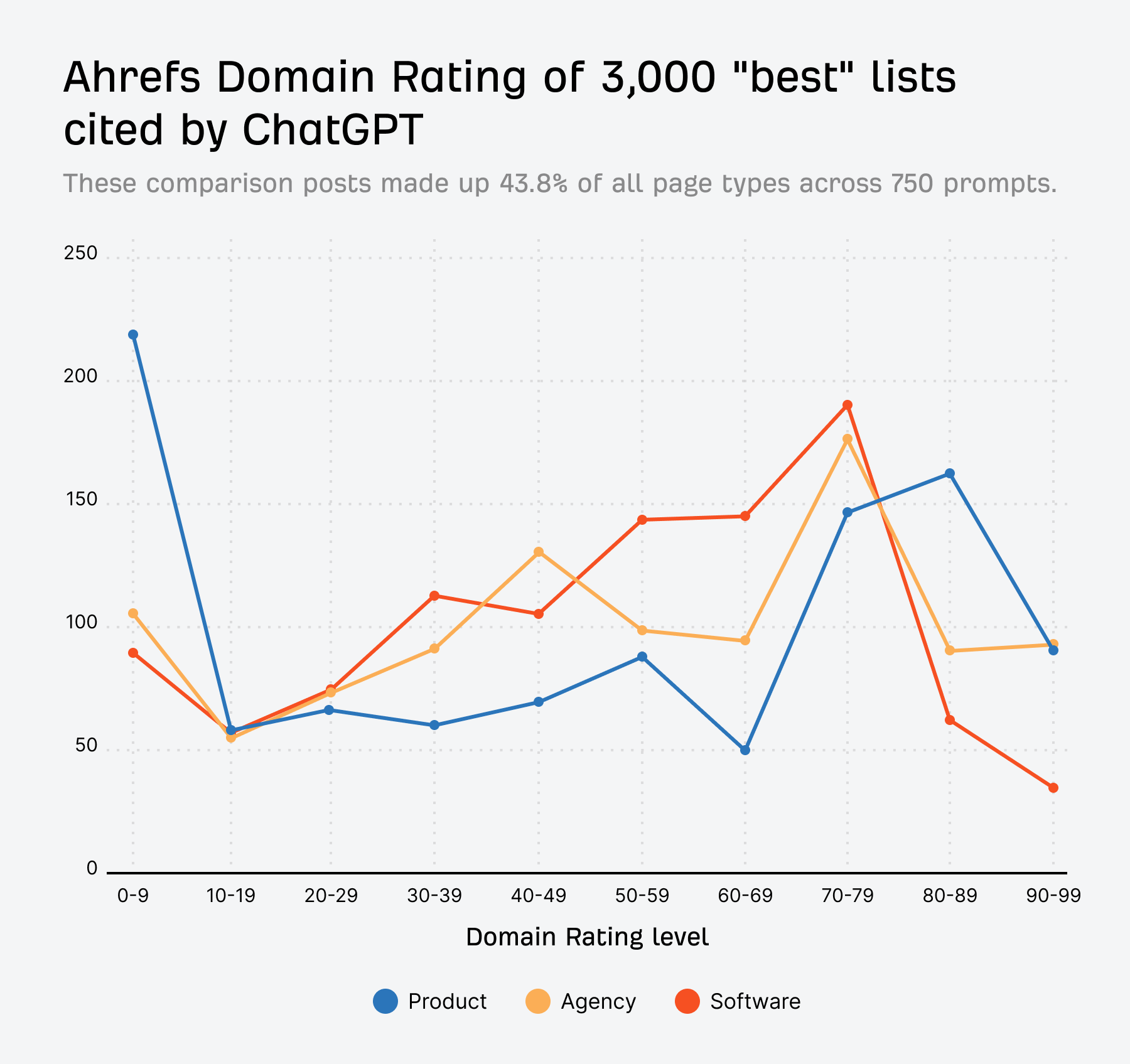

While Ahrefs Domain Rating isn’t a perfect indicator of site quality, if I take 1,000 cited best lists across each category (3,000 in total), I found that a significant portion were published on domains with low authority.

In simple terms, they have fewer high-quality sites linking to their own.

As Glenn Gabe covered in his excellent article, Google’s own AI answers should have fewer issues promoting legitimate sites, but other AI search platforms will likely need to develop stronger trust and quality scoring going forward.

I expect we’ll see the left side of this chart decline over time.

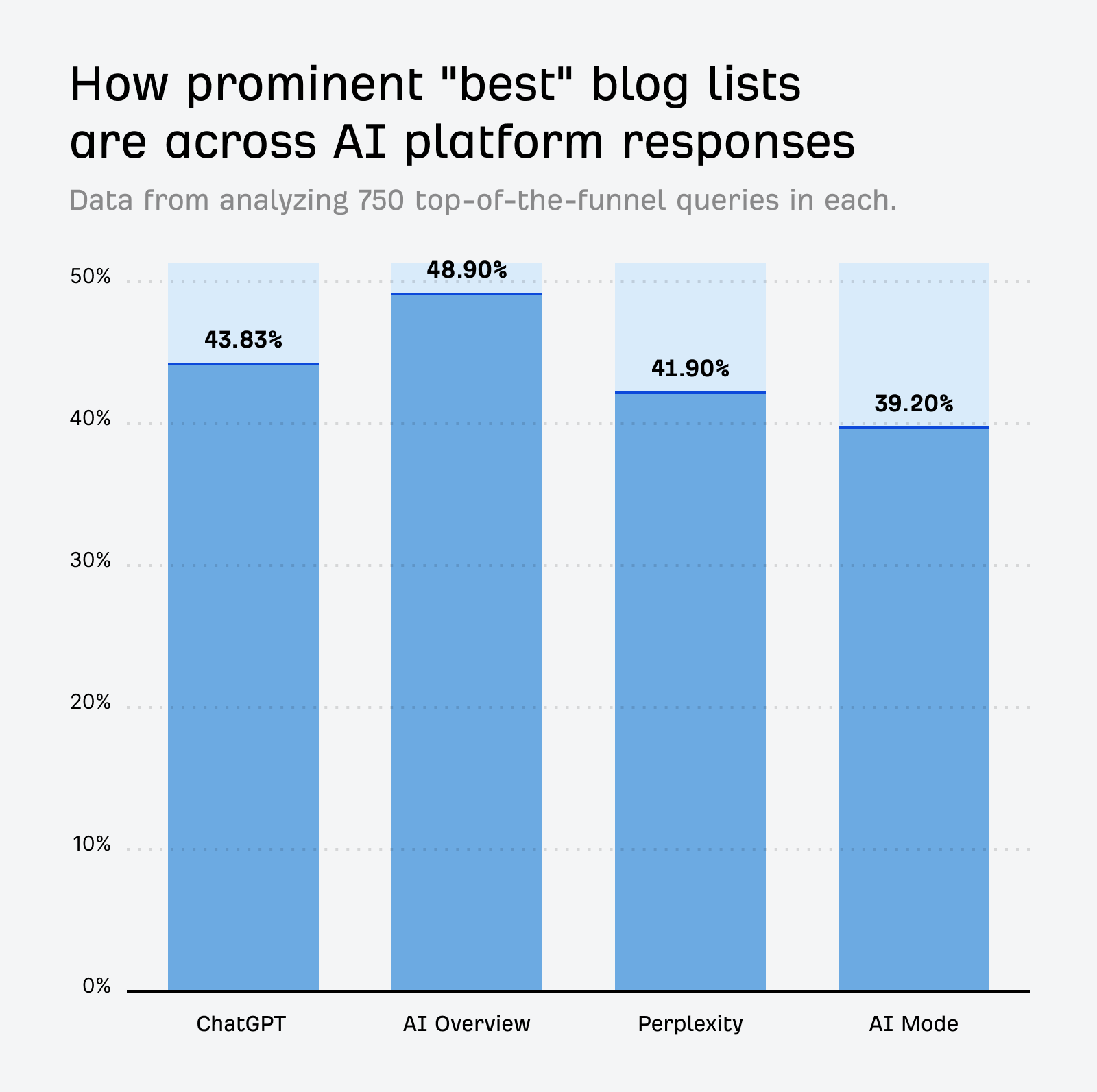

While the majority of this study was focused on ChatGPT, the prominence of these comparison articles isn’t exclusive to OpenAI’s platform

In fact, they were slightly more prominent in Google’s AI Overviews:

It’s not just top-of-the-funnel queries where they show up either.

Looking at the top 1,000 most-cited pages across each platform via Ahrefs Brand Radar, all included comparison listicles. And that’s across 150M+ total prompts.

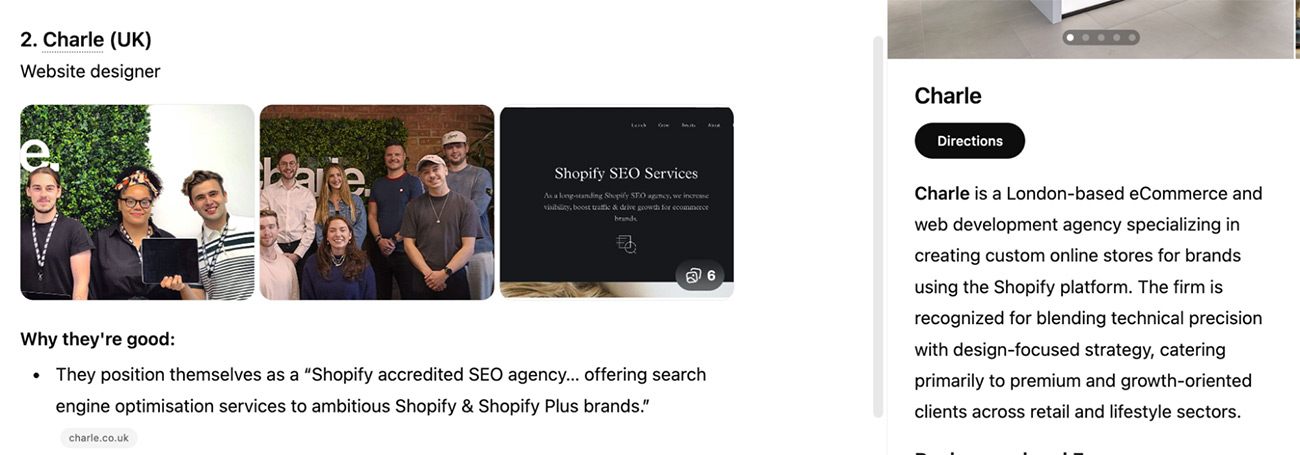

The way ChatGPT recommends agencies and products differs from how it currently recommends software.

When you’re looking for an agency, you can often click directly on that agency’s name to pop out a sidebar and learn more about it.

Unfortunately, for the purposes of analyzing different categories, this isn’t always the case.

As you can see below, there was no direct website link in the sidebar for Charle, but there was a link to their website in the text below their name.

Due to this inconsistency, for agency-focused prompts we decided to focus on the link associated with a brand, rather than the link that may appear in their sidebar.

This should have no impact on stats for things like Blog Lists or Non-Blog Lists, but it may have technically allowed us to find far more first-party homepages in our analysis.

While blog lists were highly prominent across all sources, when a brand’s own website was listed as the first recommendation, an internal landing page was more likely to appear than its homepage or best blog list.

The table below shows the percentage of prompts that first-party mentions showed up in, by page type.

| Software | Agency | Products | |

|---|---|---|---|

| General Landing Pages | 37.2% | 30.4% | 0.4% |

| Blog List | 34% | 17.2% | 4% |

| Homepage | 15.6% | 14% | |

| Documentation (incl .PDF) | 7.6% | 0.4% | 0.8% |

| Blog Post | 4.8% | 0.4% | 12.4% |

| Product Page | 0.4% | 87.2% |

A straightforward explanation for landing pages outperforming blog lists in the software and agency categories is that companies are far more likely to have created landing pages covering the services and features they offer, than to have made a self-serving blog list.

Though they weren’t the most prominent first-party page type, a self-promotional blog list still showed up in more than a third of ChatGPT responses in the software category when its publisher was recommended.

I found they were even more prominent in Google SERPs,

Across 250 “best X software”-style SERPs, 169 (67.6%) featured a list in which the company writing the article ranks itself number one.

Perform any type of “best [seo / web design / branding] agency” search in Google and there’s a strong chance you’ll see a self-promotional list ranking well.

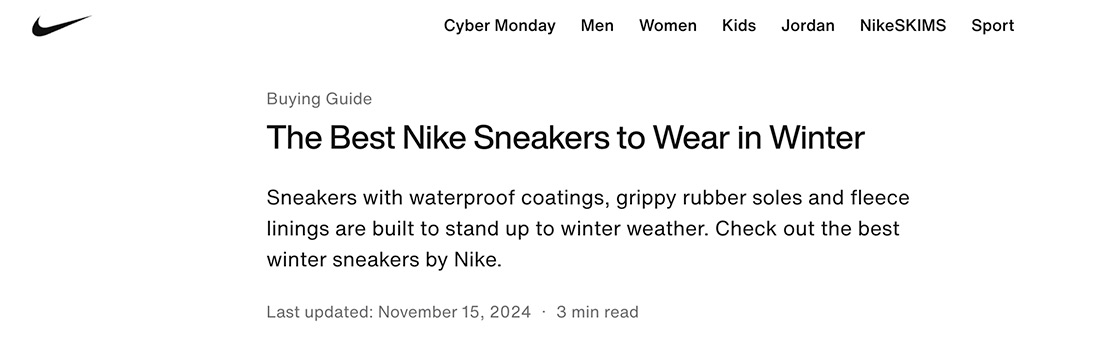

In the products category, it was rare for a company’s own blog list to appear, simply because lots of product companies don’t publish them.

You won’t see a page on Nike.com recommending Reebok, but they will publish an article on “The Best Winter Sneakers” and promote Nike’s own shoes throughout.

There isn’t anything new about creating pages to cover the markets you serve and the features you offer, but you might want to make sure those pages are valuable and up to date.

All of the data points to a clear answer: Agencies and SaaS companies should publish self-promotional “best” lists.

Countless popular brands like Shopify, Slack, Salesforce, HubSpot, and Superside have written them, and there appears to be no downside from a traditional ranking or AI citation perspective.

There’s also an argument for being proud of what you create, and for helping visitors navigate highly saturated markets.

That said, data is only one part of the equation.

During my own research, I’ve often been frustrated by the lists I come across. Many are overly self-promotional, or simply don’t link out to the alternatives they recommend.

While his comments were aimed at creating bad user experiences in general, rather than targeting self-serving blog lists, Mark Williams-Cook made a great point on LinkedIn that poor user signals might catch up with you in due course.

Siege Media founder Ross Hudgens agrees these signals are important, but argues you can position your own brand first and still provide value.

Agency owner Wil Reynolds, whom I’ve respected for years, had another opinion:

Any marketer worth a damn knows listing yourself at the top of a list hosted on your site doesn’t build trust with buyers.

It wasn’t a common occurrence, but I did find examples of companies featuring lower down their own lists.

DoorLoop placed itself 7th on this list of rental management software providers

While it’s admirable as someone with no connection to the business, there’s also the argument that they’re just helping competitors with their own marketing.

You’ll have to decide which approach makes sense for your business, but let me at least share our current take.

At Ahrefs, our thinking is simple: We may publish more comparison posts as we roll out new features or enter new markets, but they’ll continue to make up less than half a percent of our total blog content.

We’re not going to create them for every tool or potential use case.

We won’t always put ourselves in the top spot, as is already the case.

We’ll continue to make it clear that we are the Ahrefs being recommended.

(Almost every company promoting itself does so in the third person, as if they’re subtly trying to overlook that they’re writing about their own business.)

And we’ll keep linking directly to alternative solutions so visitors can research them on their own.

One change we are making thanks to this research is to celebrate more of our wins.

There were many cases where brands were mentioned alongside positive company updates, as shown below for Monday.com.

In another case, when gym management software Kilo was mentioned, it was alongside their announcement of earning an award from Capterra.

We could do a much better job there, with the potential added benefit of giving LLMs enticing commentary to use with any mentions.

Our primary focus will continue to be on things that have naturally gotten us mentioned on the lists of others, like constantly updating our product with new features, sharing original data and research, and being active where our audience is.

Mentions from brands like Zapier are always nice to see

As we’ve done when we spent $400,000 running an event, $1M+ sponsoring creators, or revealing how we decide the business potential of new blog posts, we’ll keep you in the loop about how our approach to AI search visibility evolves.

Stay tuned for some behind-the-scenes updates I’m working on there.

If you have any thoughts on how I could improve the next iteration of this study or anything else you would like me to dive into, please let me know over on LinkedIn or X.