Built within these scores is an implicit assumption that the higher your score, the higher you’ll likely rank on Google.

But is that actually true?

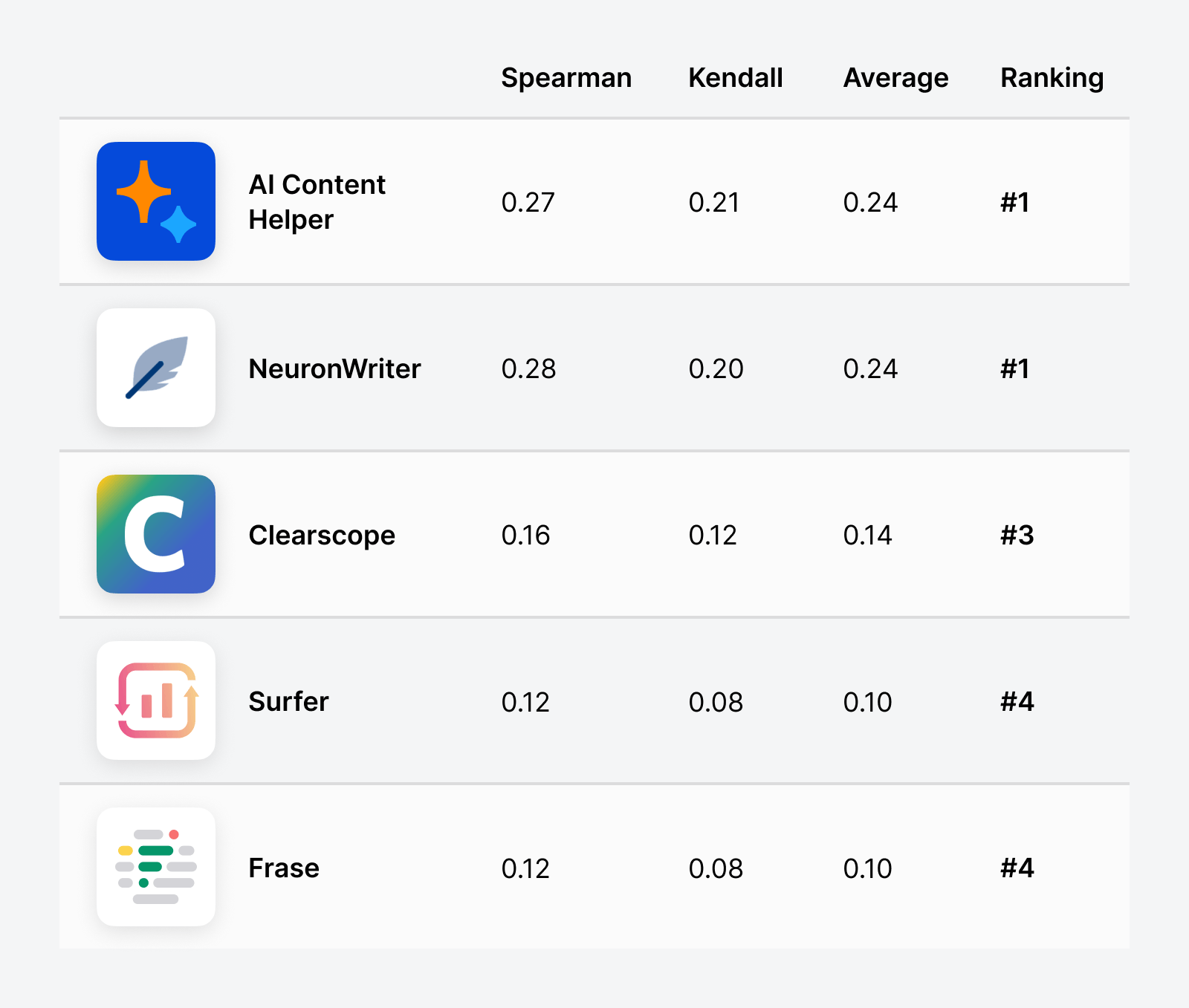

To find out, I studied the correlation between rankings and content scores from five content optimization tools: Surfer, Frase, NeuronWriter, Clearscope, and our own AI Content Helper.

We found weak correlations everywhere.

But out of the five tools I tested, NeuronWriter and AI Content Helper fared the best. The other three only had very weak correlations.

At first glance, these numbers may seem underwhelming. But ‘weak’ doesn’t mean ‘useless’.

Google uses hundreds of ranking signals to determine how pages rank. While correlation may not be causation, even a weak positive correlation means content scores can still move the needle.

Especially when it’s a factor you directly control.

Imagine if you had a button you could press that would give you a 10% chance of improving your rankings by one or two places. Would you press it?

I would spam the hell out of that button.

These tools are inexpensive, and you can use them to generate a positive impact on your rankings. It’s a massive, massive win for our industry.

I selected 20 random keywords. I then entered these keywords into each tool and generated content reports. I jotted down the scores for each URL on the SERPs.

Despite their increasing prominence in the SERPs, most of the tools had trouble analyzing Reddit, Quora, and YouTube. They typically gave a zero or no score for these results. If they showed no scores, we excluded them from the analysis.

Clearscope was the only tool that graded the SERPs, rather than showing a numerical score. I used ChatGPT to convert those grades into numbers.

Since each tool pulled a slightly different SERP for each keyword, we separated the data by keyword and calculated the correlation between ranking and scores within each keyword instead.

We calculated both Spearman and Kendal correlations and took the average. Spearman correlations are more sensitive and therefore more prone to being swayed by small sample size and outliers. On the other hand, the Kendall rank correlation coefficient only takes order into account. So, it is more robust for small sample sizes and less sensitive to outliers.

Given what we now know about content scores having weak (but meaningful) correlations with rankings, here’s how to use them effectively:

1. Focus on topic coverage, not keyword density

Google wants you to provide a comprehensive description of the topic you’re targeting. Your content should address the subtopics and questions your audience cares about.

Which means: The content score can be used as a barometer for topic coverage. For example, if your score is significantly lower than the scores of competing pages, you’re probably missing important subtopics that searchers care about.

However, you need to be aware of how your chosen tool’s content score is calculated. In many tools, the score is largely based on how many times you use the recommended set of keywords. In fact, in some tools, you can literally copy-paste the entire list, draft nothing else, and get an almost perfect score.

Keyword density is not topic coverage.

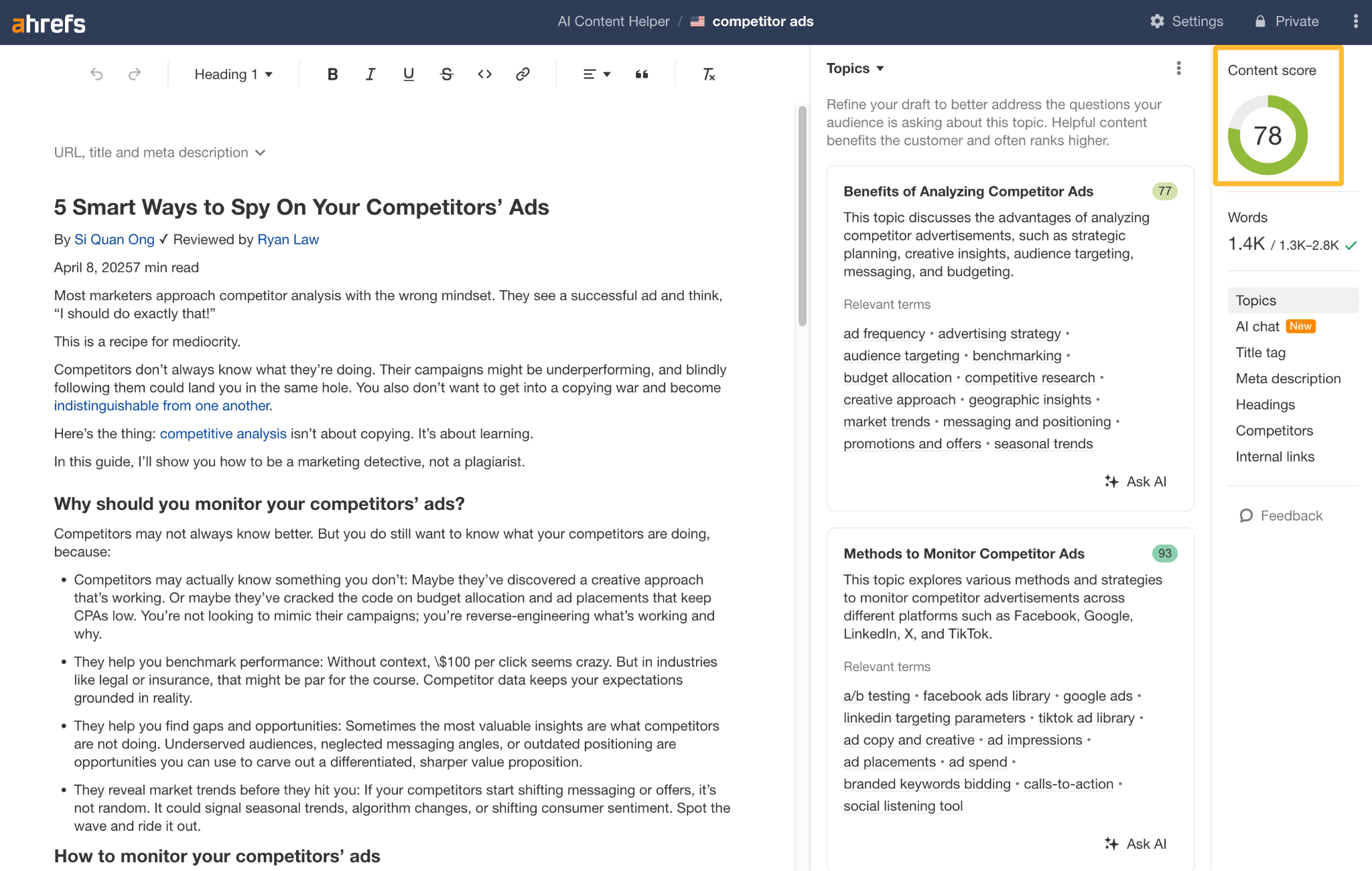

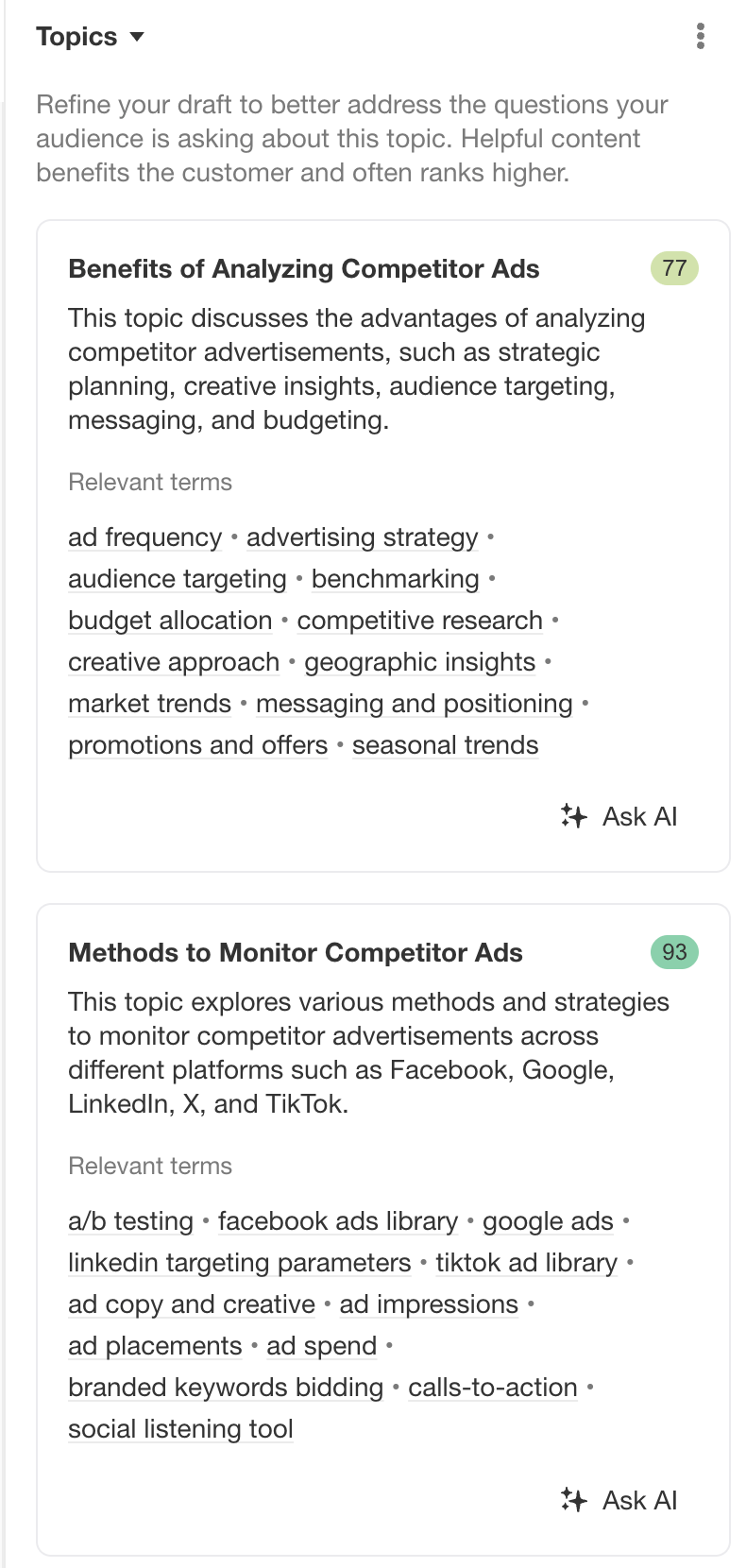

That’s why we designed our AI Content Helper to focus on topic optimization. The goal is comprehensive content coverage—making sure you cover all the information your readers need to answer their questions.

Unlike other tools, you can’t ‘game’ our score by stuffing more keywords.

We think that this makes for better, more value-added content that’s more closely aligned with what both readers and Google want.

So far, so good. Not only did we score the highest in our correlation study, we also have examples of how we boosted our search traffic with our own tool:

2. Use relative scores as benchmarks

Don’t obsess over getting a perfect 100. Instead, use scores as a relative benchmark against top-ranking content.

If competing pages score in the 80-85 range while your page scores 79, it likely isn’t worth worrying about. But if it’s 95 vs. 20 then yeah, you should probably try to cover the topic better.

3. Balance optimization with originality

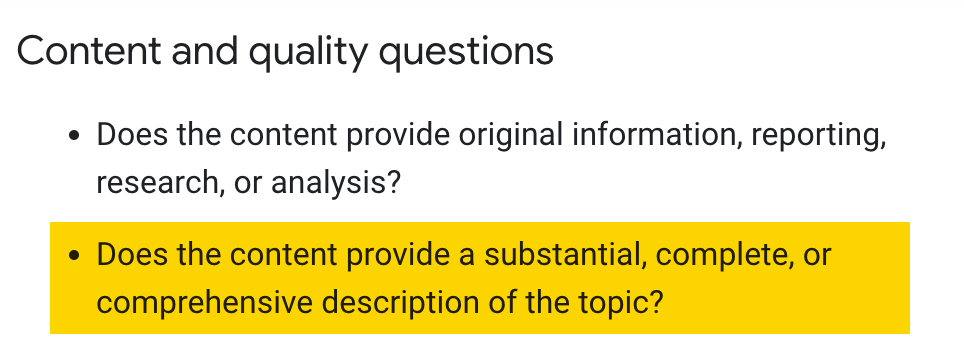

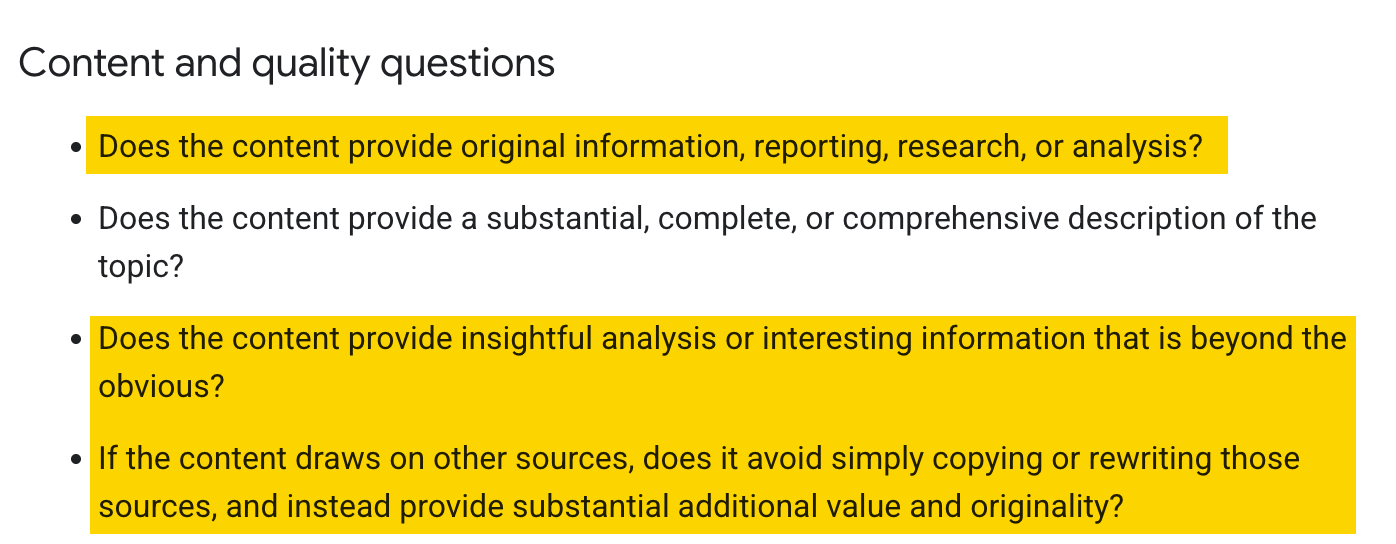

Content scores tell you how well you’re covering the topic based on what’s already out there. If you cover all important keywords and subtopics from the top-ranking pages and create the ultimate copycat content, you’ll score full marks.

This is a problem because quality content should bring something new to the table, not just rehash existing information. Google literally says this in their helpful content guidelines.

The most successful content strikes a balance between thorough topic coverage and original value.

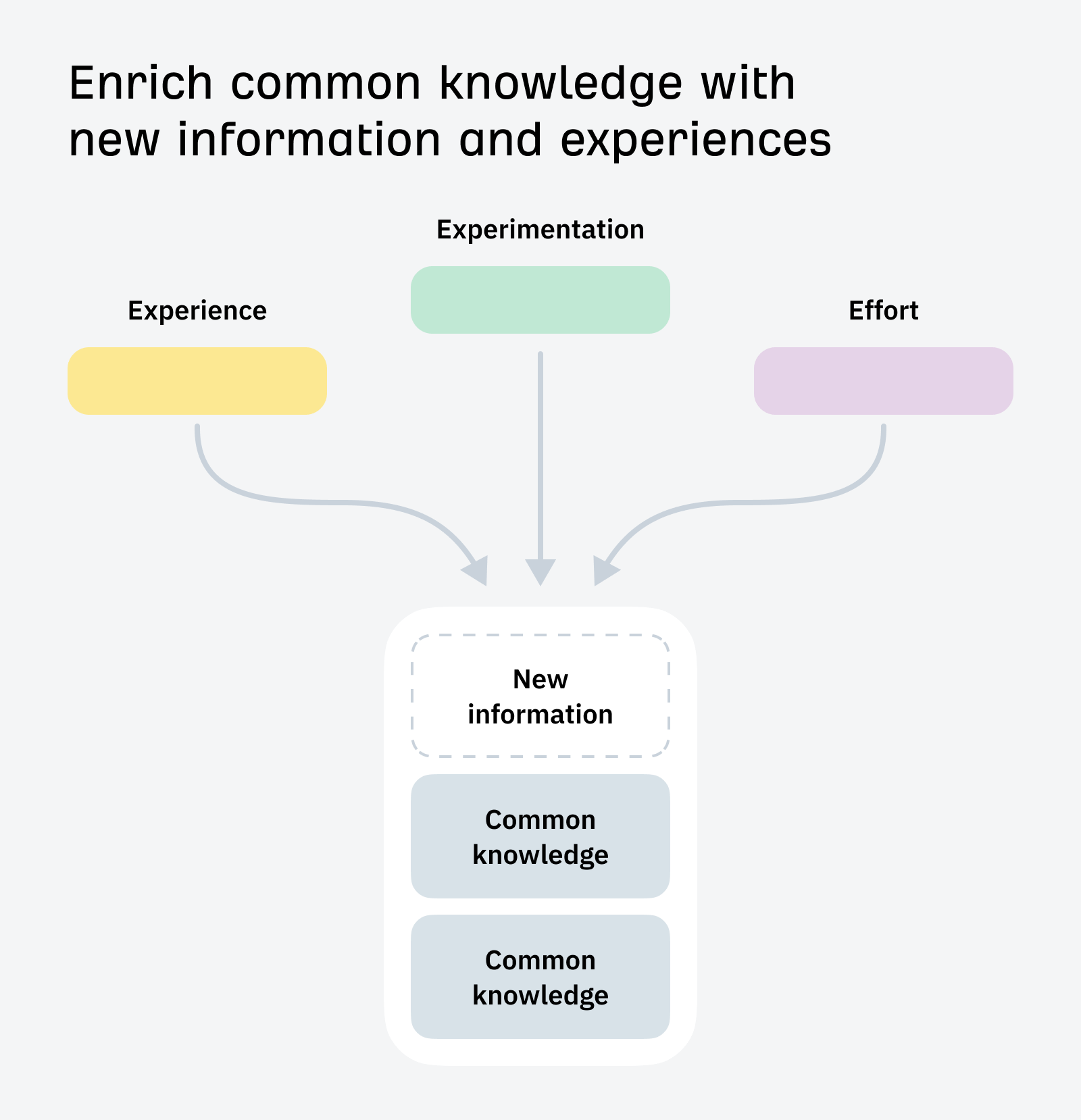

So, while you’ll want to make something comprehensive and answer all your readers’ questions, make sure you’re also creating something that’ll stands out from the rest of the search results.

This will require experience, experimentation, or effort—something only humans can have/do.

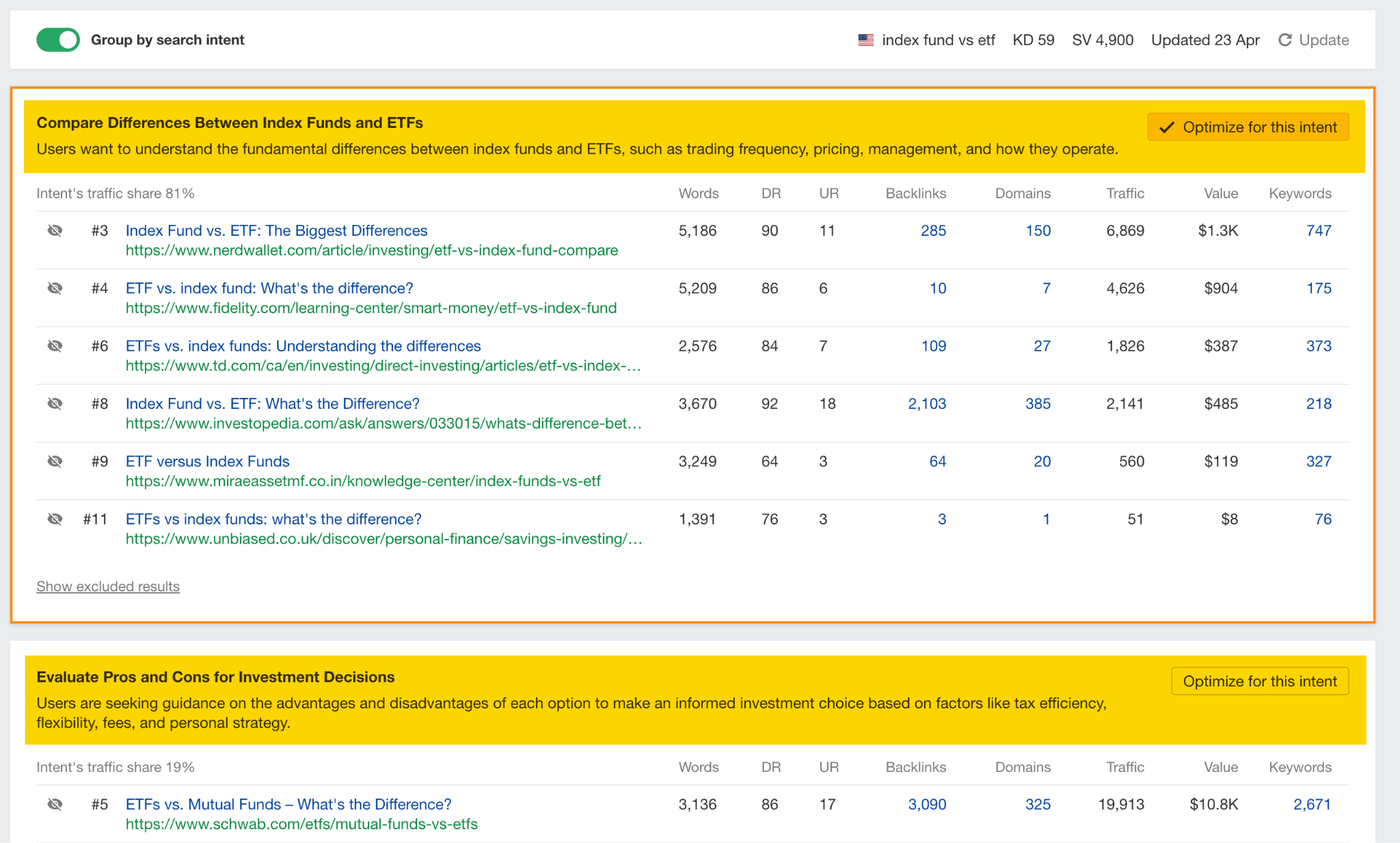

4. Consider search intent first, scores second

Before diving into content optimization, make sure you understand what users actually want.

Content scores are most valuable when they’re guiding content that already matches the right search intent. That’s why the step after entering a keyword in AI Content Helper is for you to select the search intent you want to fulfill:

Final thoughts

Although the correlation between scores and rankings is weak, anything that you can control and impact your rankings is amazing news. Just don’t obsess and spend hours trying to get a perfect score; scoring in the same ballpark as top-ranking pages is enough.

You also need to be aware of their downsides, most notably that they can’t help you craft unique content. That requires human creativity and effort.