Surprisingly, the ones you see the most aren’t necessarily the ones you should be losing sleep over. They may be worth fixing, but definitely not putting on the top of your list.

For instance, we found that meta descriptions were missing on 72.9% of studied sites. But these don’t impact rankings, and Google will generate one for you in that case, anyway. Conversely, a serious issue of a canonical page pointing to a broken page was found on only 2.6% of sites.

So if you want to fix issues that can seriously impact your rankings, here’s a better idea. You can use the free Ahrefs Webmaster Tools and Google Search Console to see if your site is affected by any of the nine issues we listed in this article.

Read on to learn why these issues matter and how to use the tools to find and fix them.

Indexability is a webpage’s ability to be indexed by search engines. Depending on the issue, Google will index the wrong pages or won’t be able to display it in the SERPs (and you won’t get any traffic to these pages).

Here are some examples of these issues:

- Canonical points to 4XX — Only valid live URLs should be specified as canonicals. When the search engine crawler is not able to access the specified canonical page, this instruction will be ignored, and wrong (non-canonical) page version can be indexed.

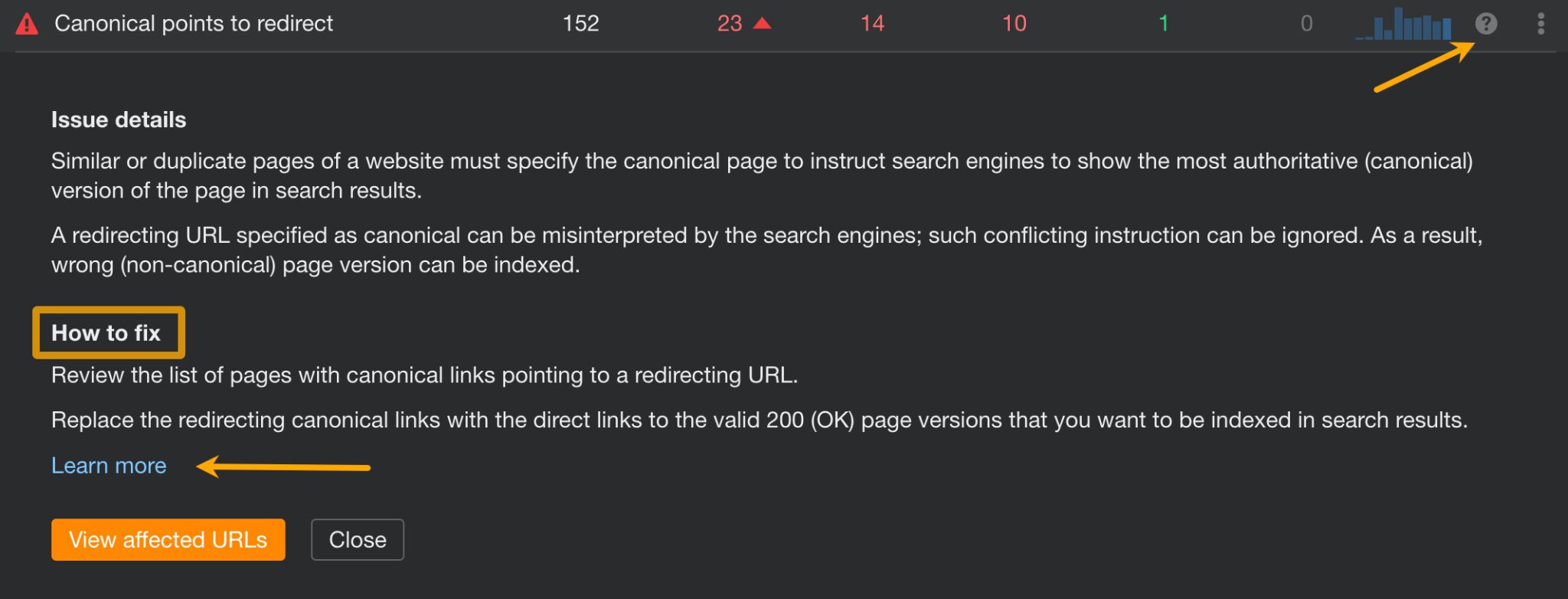

- Canonical points to redirect — Similar or duplicate pages of a website must specify the canonical page to instruct search engines to show the most authoritative (canonical) version of the page in search results.

Three requirements must be met for a page to be indexable:

- The page must be crawlable. If you haven’t blocked Googlebot from entering the page robots.txt or you have a website with fewer than 1,000 pages, you probably don’t have an issue there.

- The page must not have a noindex tag (more on that in a bit).

- The page must be canonical (i.e., the main version).

How to fix

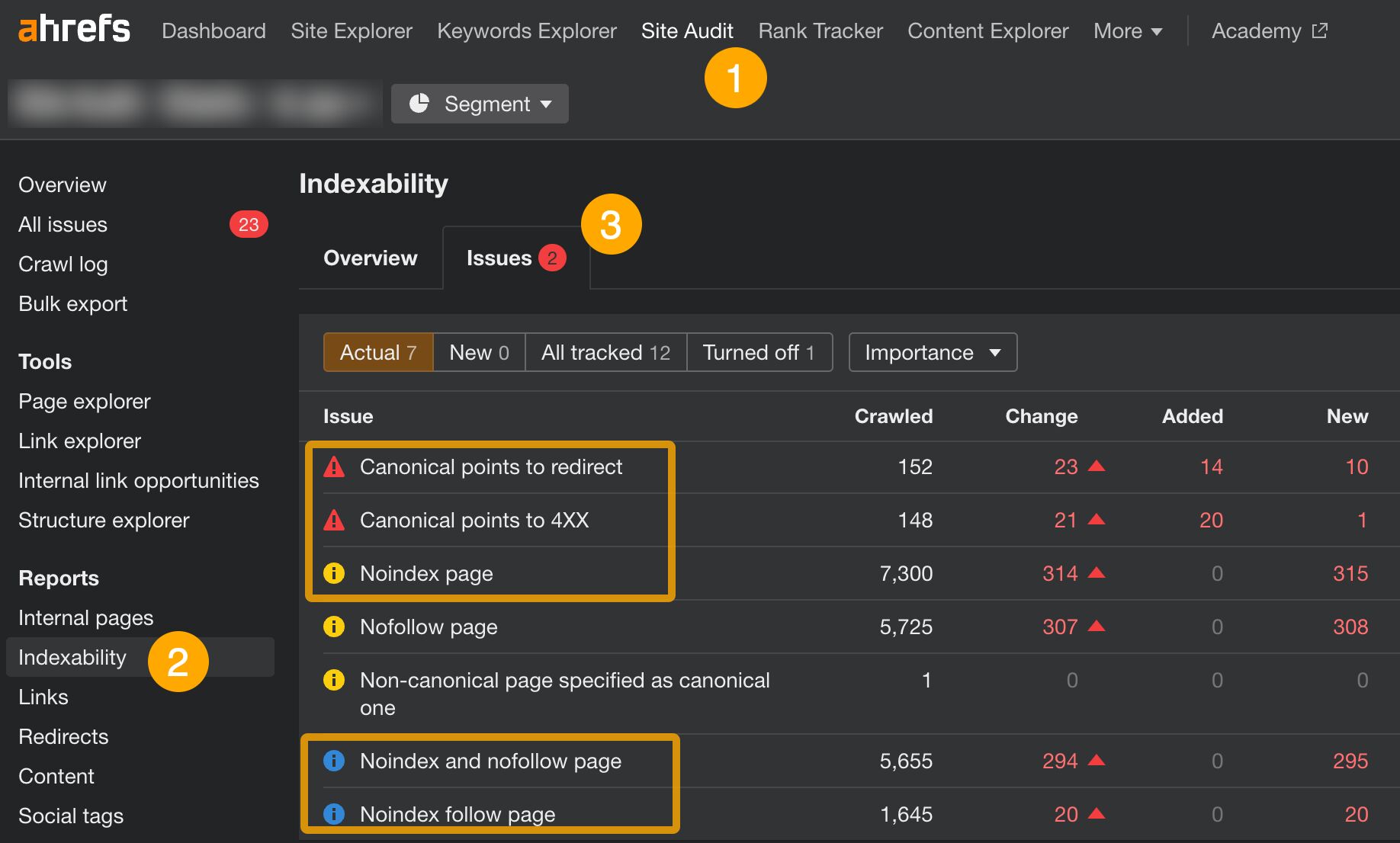

Sign up for a free account of Ahrefs Webmaster Tools and set up your project (you can do it in a few clicks). Next:

- Open Site Audit.

- Go to the Indexability report.

- Click on issues related to canonicalization and “noindex” to see affected pages.

For canonicalization issues in this report, you will need to replace bad URLs in the link rel=“canonical” tag with valid ones (i.e., returning an “HTTP 200 OK”).

As for pages marked by “noindex” issues, these are the pages with the “noindex” meta tag placed inside their code. Chances are, most of the pages found in the report should stay as they are. But if you see any pages that shouldn’t be there, simply remove the tag. Do make sure those pages aren’t blocked by robots.txt first.

Click on the question mark on the right to see instructions on how to fix each issue. For more detailed instructions, click on the “Learn more” link.

Broken pages are pages on your site that return 4XX errors (can’t be found) and 5XX errors (server errors). These pages either won’t be indexed by Google or will be dropped from the index; either way, you’re potentially missing out on traffic and creating a bad experience for your visitors.

Furthermore, if broken pages have backlinks pointing to them, all of that link equity goes to waste.

Broken pages are also a waste of crawl budget—something to watch out for on bigger websites.

How to fix

In AWT, you should:

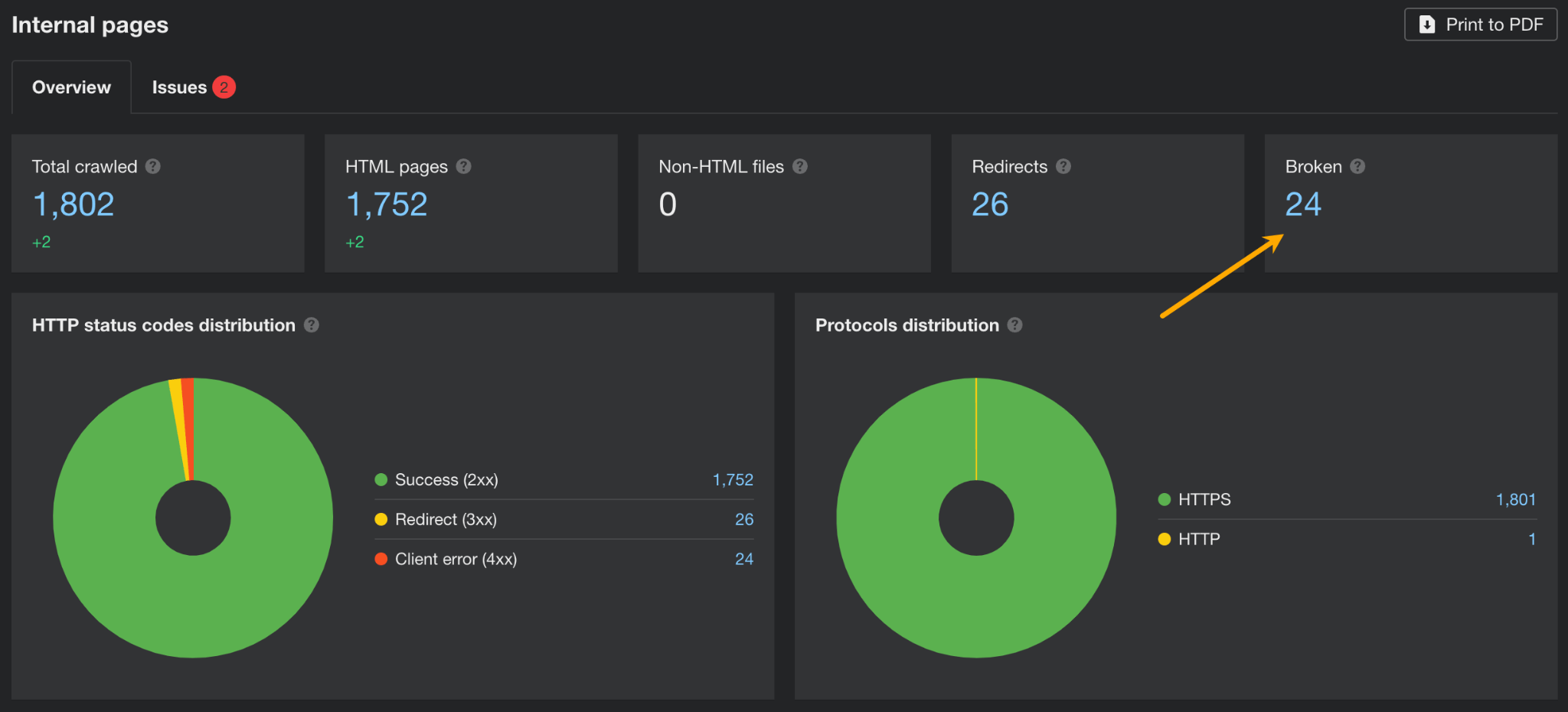

- Open Site Audit.

- Go to the Internal pages report.

- See if there are any broken pages. If so, the Broken section will show a number higher than 0. Click on the number to show affected pages.

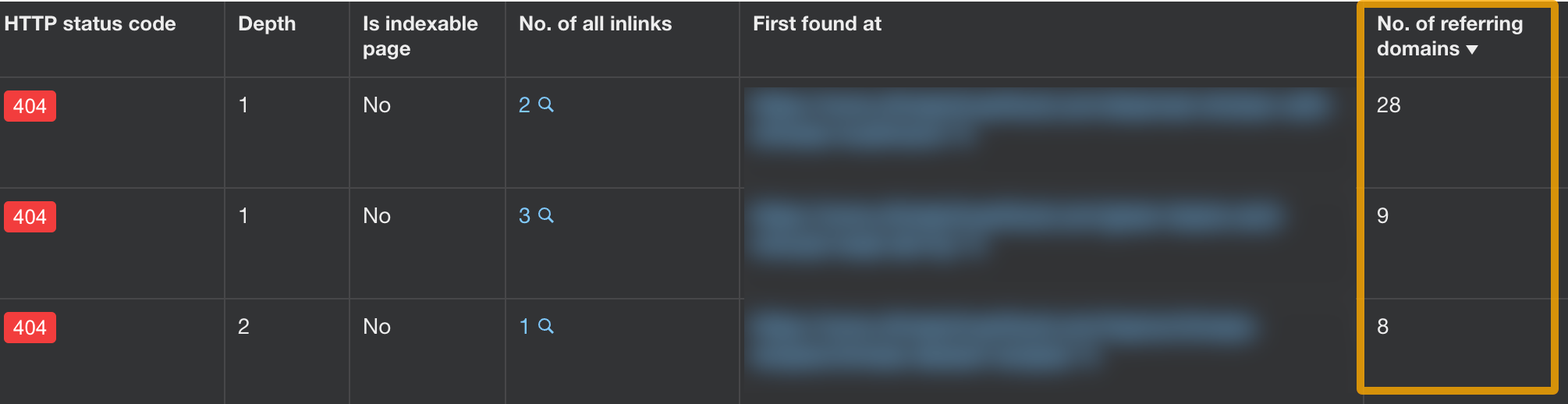

In the report showing pages with issues, it’s a good idea to add a column for the number of referring domains. This will help you make the decision on how to fix the issue.

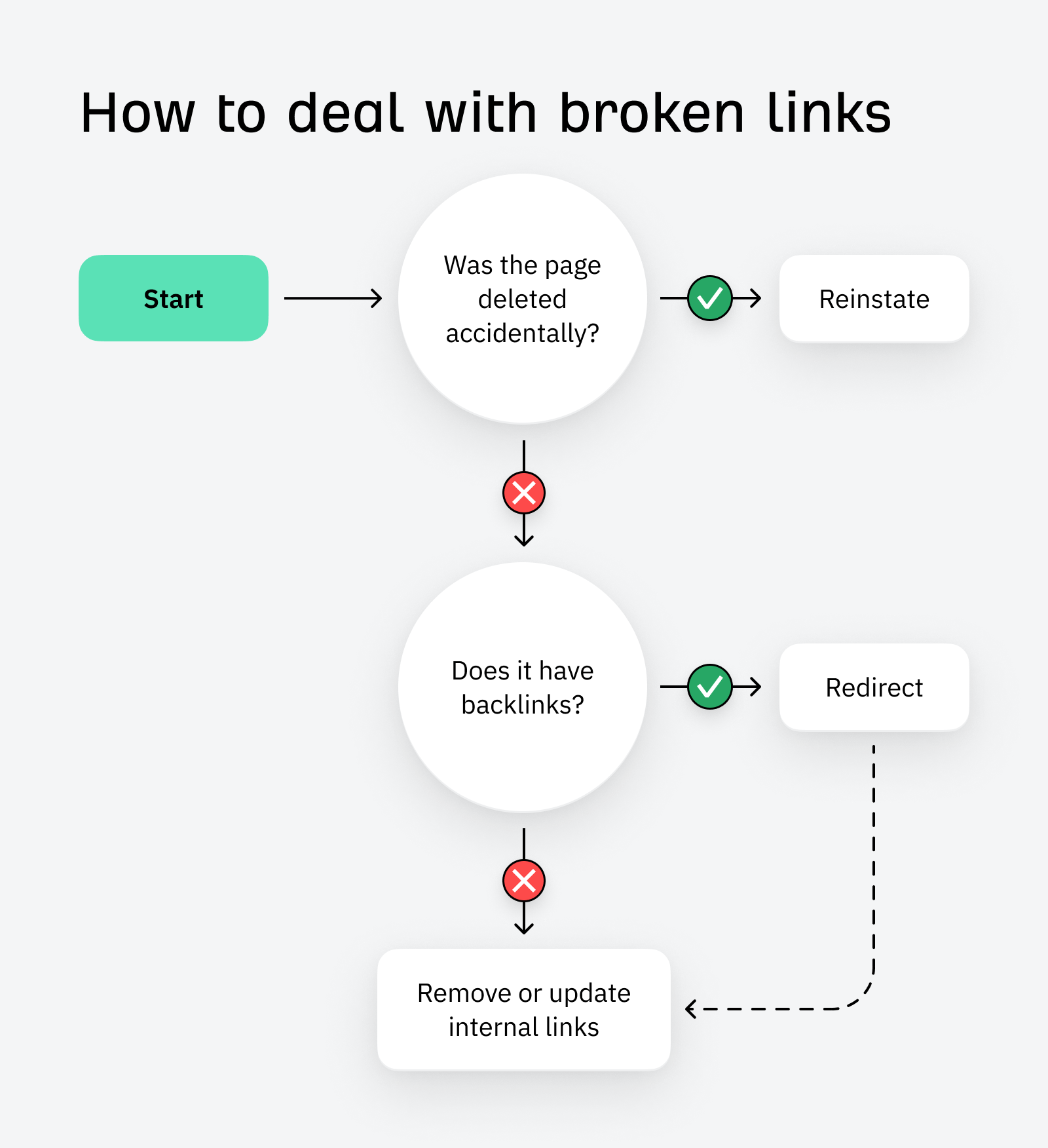

Now, fixing broken pages (4XX error codes) is quite simple, but there is more than one possibility. Here’s a short graph explaining the process:

Dealing with server errors (the ones reporting a 5XX) can be a tougher one, as there are different possible reasons for a server to be unresponsive. Read this short guide for troubleshooting.

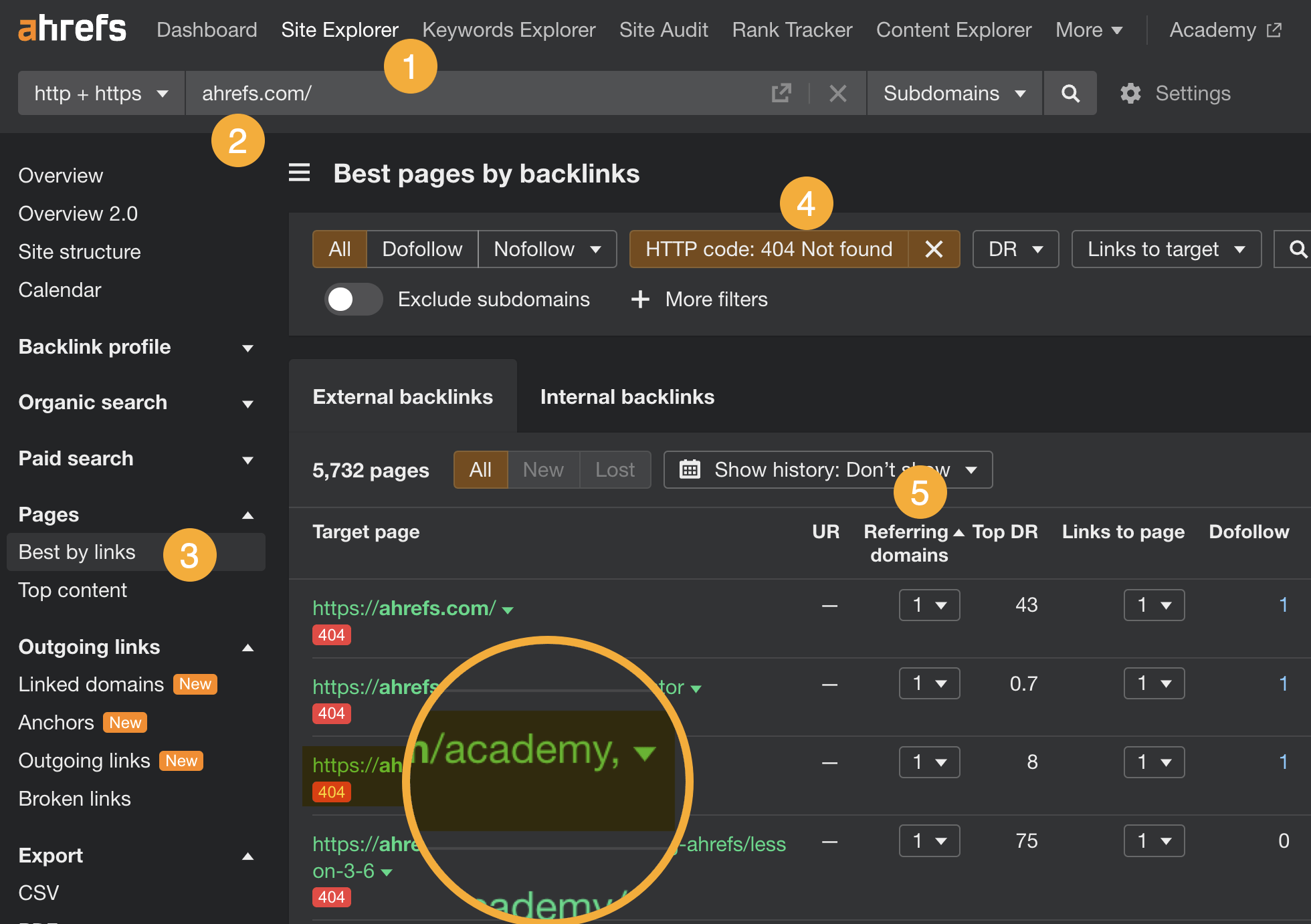

With AWT, you can also see 404s that were caused by incorrect links to your website. While this is not a technical issue per se, reclaiming those links may give you an additional SEO boost.

- Go to Site Explorer

- Enter your domain

- Go to the Best by links report

- Add a “404 not found” filter

- Then sort the report by referring domains from high to low

An internal link is any hyperlink leading to the page or resource on the same website. They can affect rankings by:

- Helping search engines like Google to find and crawl pages

- Boosting pages with PageRank (aka link juice or link equity) passed down from stronger pages.

In the worst case scenario, pages that you want to rank won’t have any internal links. These pages are called orphan pages. Web crawlers have limited ability to access those pages (only from sitemap or backlinks), and there is no link equity flowing to them from other pages on your site.

A more likely scenario is that some pages could rank higher or rank for additional keywords if they had more relevant links.

How to fix

- Go to Site Audit.

- Open the Links report.

- Open the Issues tab.

- Scroll to the Indexable category.

An orphan page needs to be either linked to from some other page on your website or deleted if a given page holds no value to you.

Ahrefs’ Site Audit can find orphan pages as long as they have backlinks or are included in the sitemap. For a more thorough search for this issue, you will need to analyze server logs to find orphan pages with hits. Find out how in this guide.

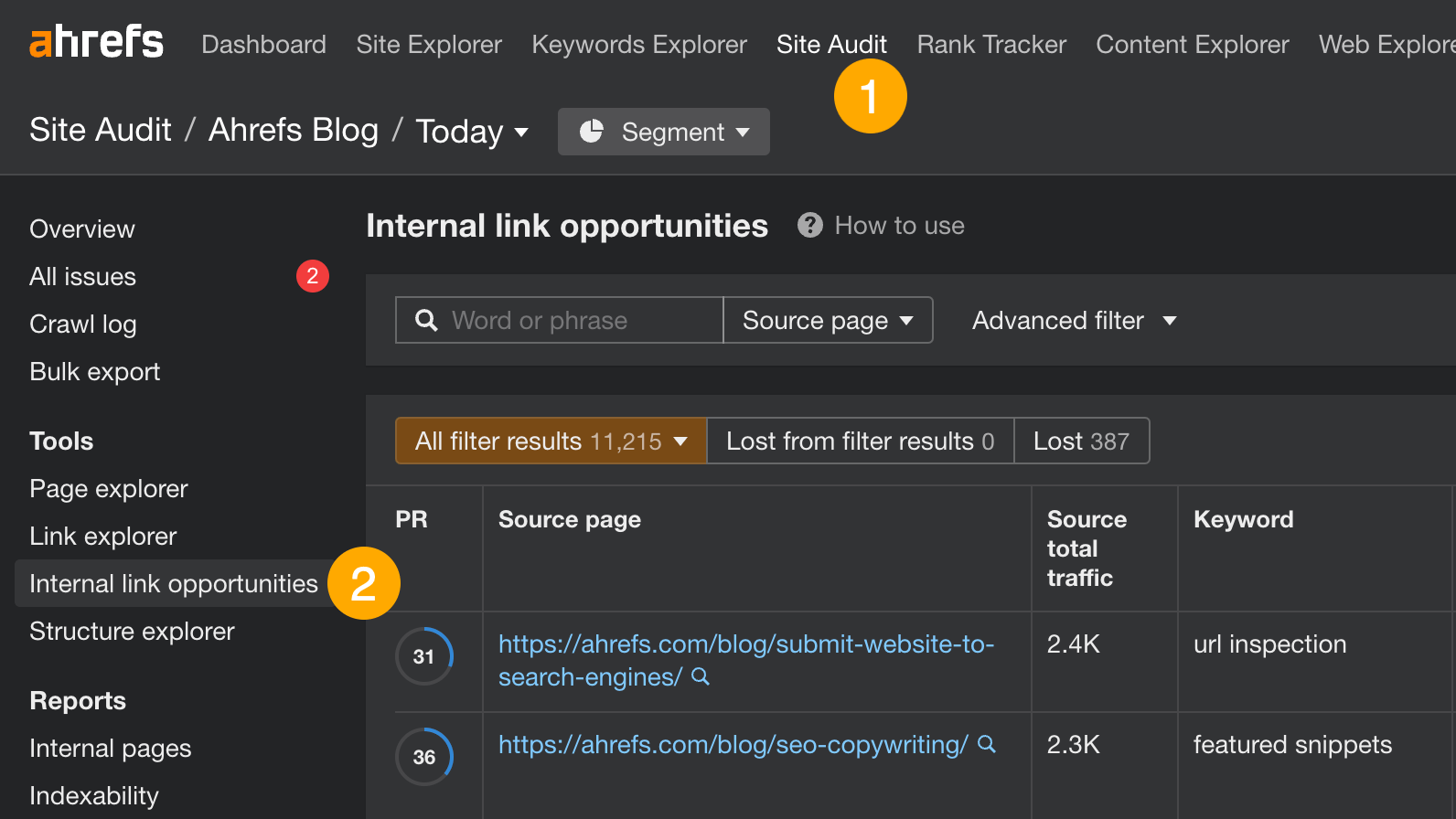

As for internal link opportunities, AWT identifies these automatically:

- Go Site Audit.

- Open the Internal link opportunities tool.

Focus on the columns labeled “source page,” “keyword context,” and “target page” in your documentation. These columns will guide you on which webpage to link from, which webpage to link to, and the specific location on the page where the link should be added.

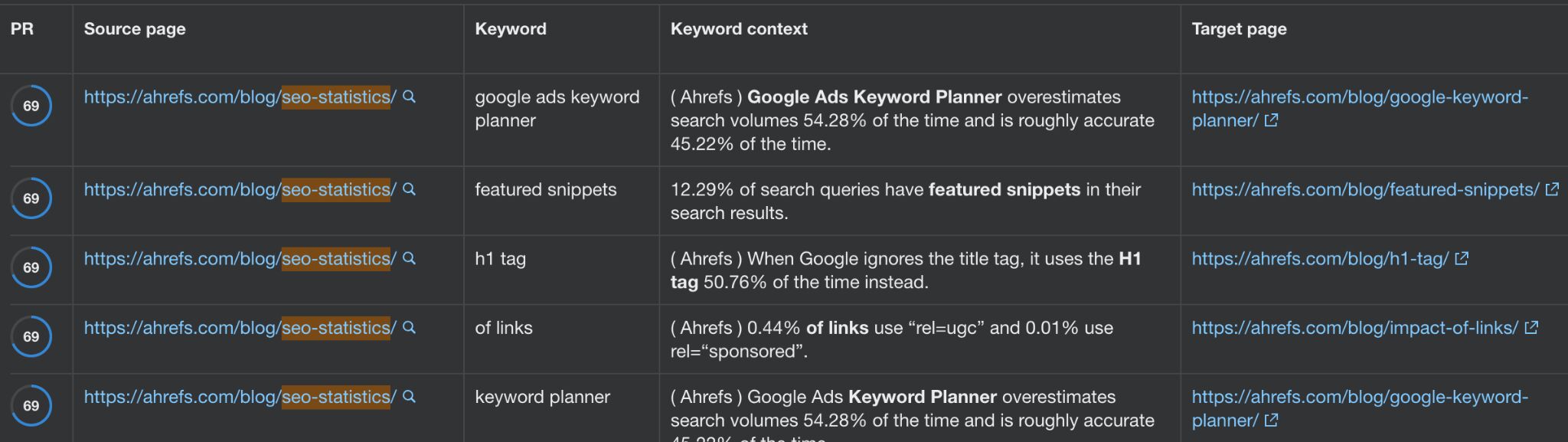

For example, there are a few relevant internal links we can add to our list of SEO statistics:

Having a mobile-friendly website is a must for SEO. Two reasons:

- Google uses mobile-first indexing — it’s mostly using the content of mobile pages for indexing and ranking pages.

- Mobile experience is part of the Page Experience signals — while Google will allegedly always “promote” the page with the best content, page experience can be a tiebreaker for pages offering content of similar quality.

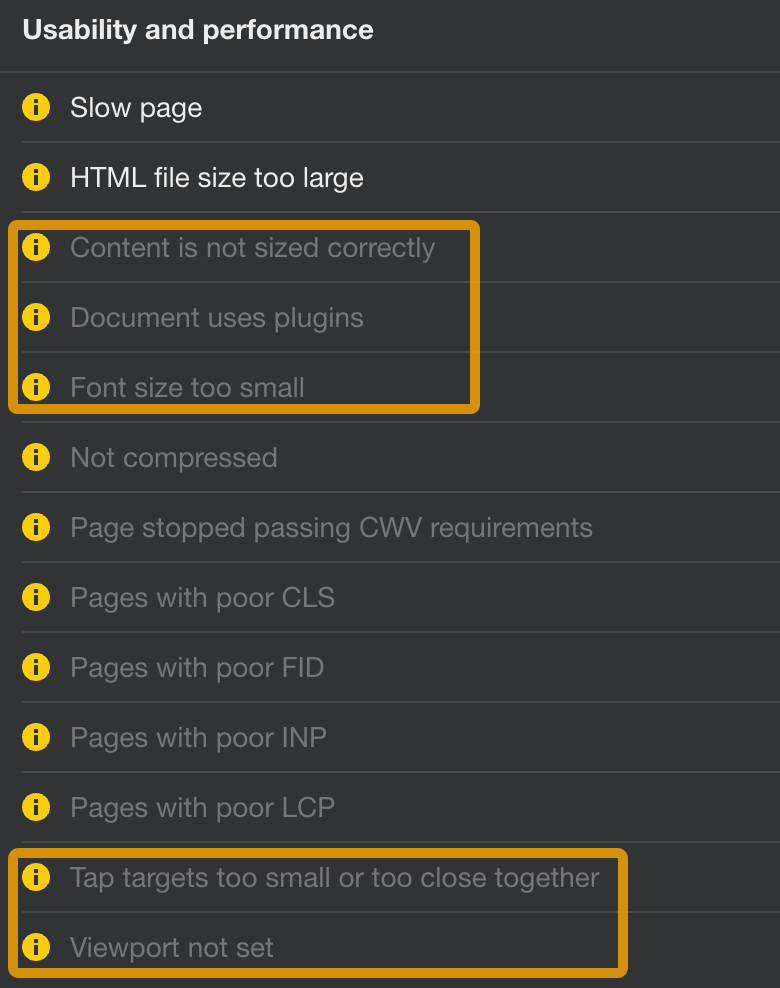

Here are some examples of these issues:

- Viewport not set — when a page does not have a

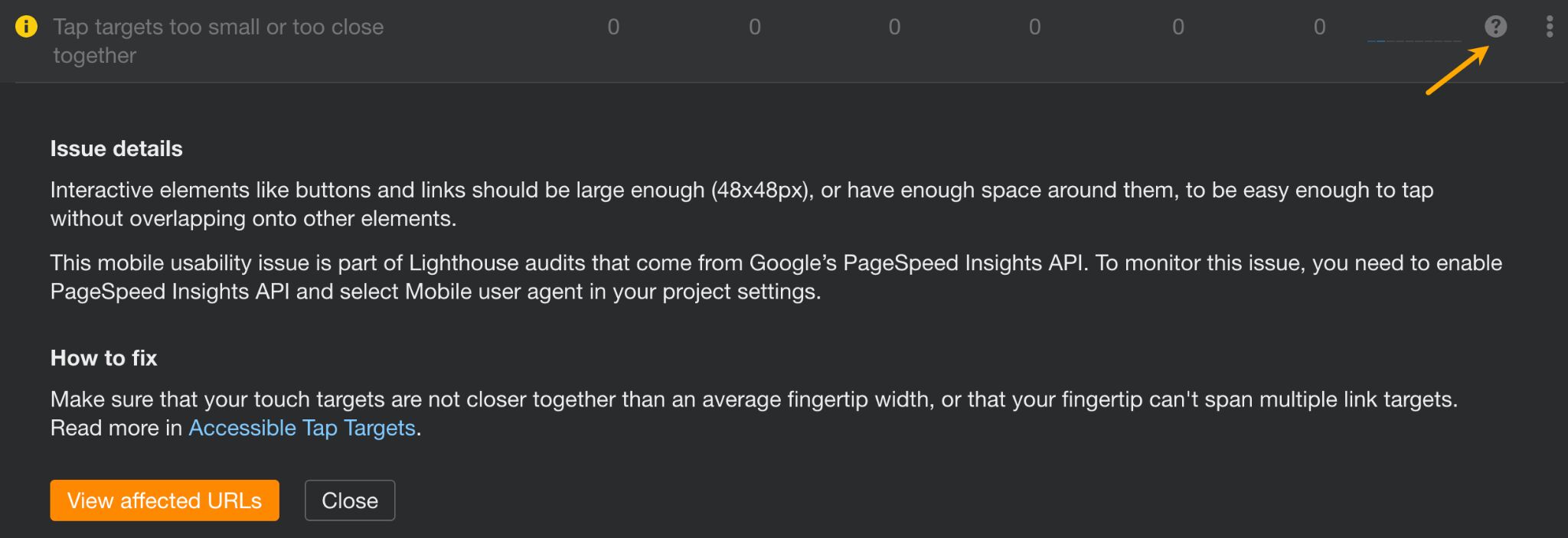

<meta name=“viewport”>,tagorwidthorinitial-scaleparameters. - Tap targets too small or too close together — when interactive elements are not large enough or their tappable areas overlap.

- Document uses plugins — when a page requires plugins, such as Java or Flash.

How to fix

In Ahrefs Site Audit, look for these issues under the Usability and performance category.

Next, click on the question mark next to the issue name and use the instructions to solve the issues for affected pages (they will require some web development work).

For example, if the tool finds pages with a font too small for mobile devices, you will need to make sure that the viewport tag is set properly and make the font at least 12 px.

Google uses HTTPS encryption as a small ranking signal. This means you can experience lower rankings if you don’t have a TLS certificate to secure your website.

But even if you do, some pages and/or resources on your pages may still use the HTTP protocol.

How to fix

Assuming you already have an SSL/TLS certificate for all subdomains (if not, do get one), open AWT and do these:

- Open Site Audit

- Go to the Internal pages report

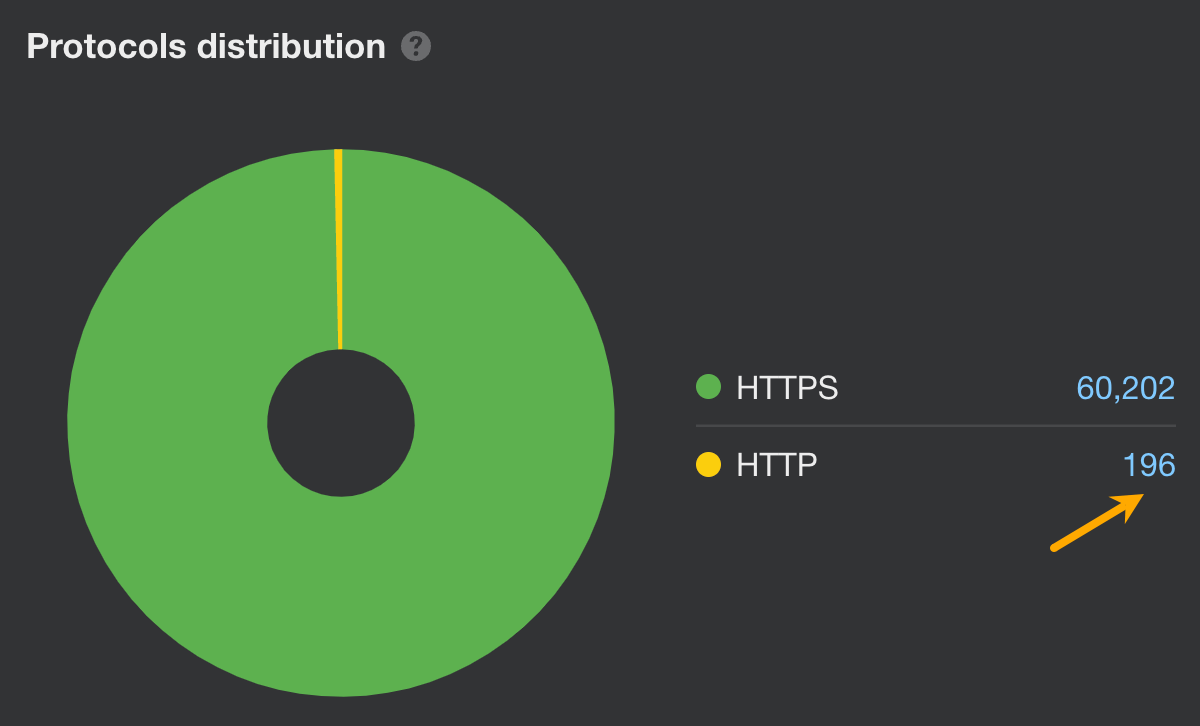

- Look at the protocol distribution graph and click on HTTP to see affected pages

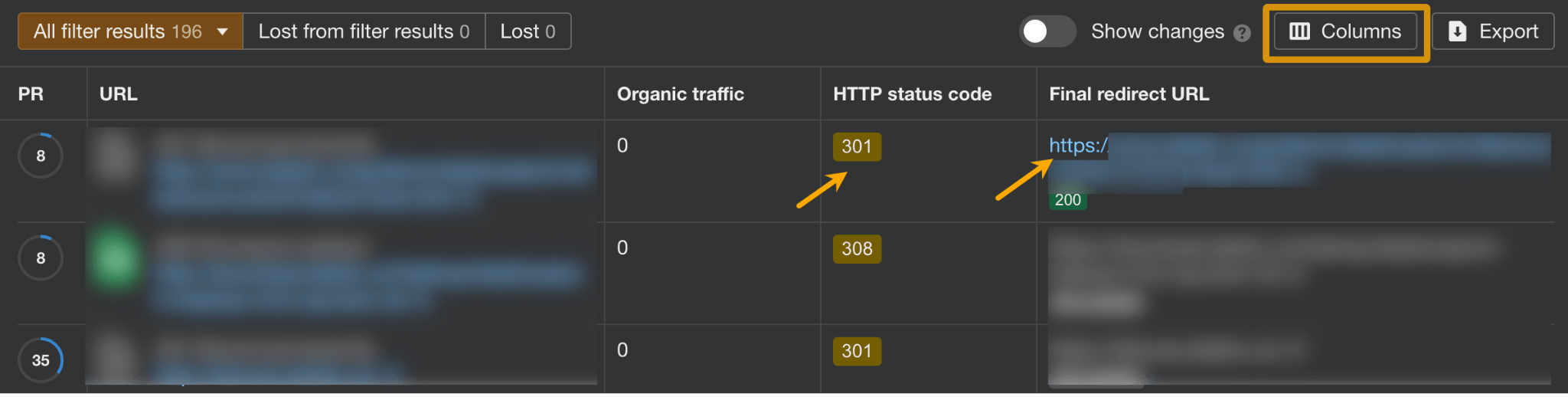

- Inside the report showing pages, add a column for Final redirect URL

- Make sure all HTTP pages are permanently redirected (301 or 308 redirects) to their HTTPS counterparts

Finally, let’s check if any resources on the site still use HTTP:

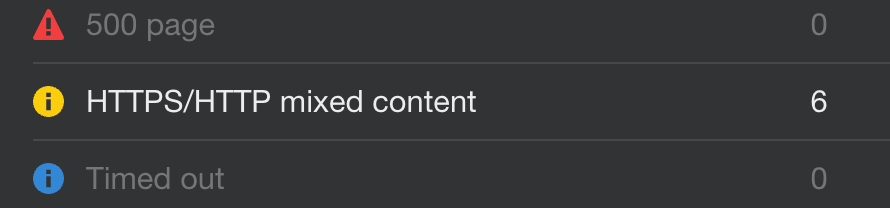

- Inside the Internal pages report, click on Issues.

- Then click on HTTPS/HTTP mixed content to view affected resources.

The quickest way to fix this is to add this short code in your .htaccess file or server config.

<ifModule mod_headers.c>

Header always set Content-Security-Policy "upgrade-insecure-requests;"

</IfModule>This configuration ensures that whenever a browser requests a page or resource from the server, it will automatically convert any HTTP requests to HTTPS for enhanced security.

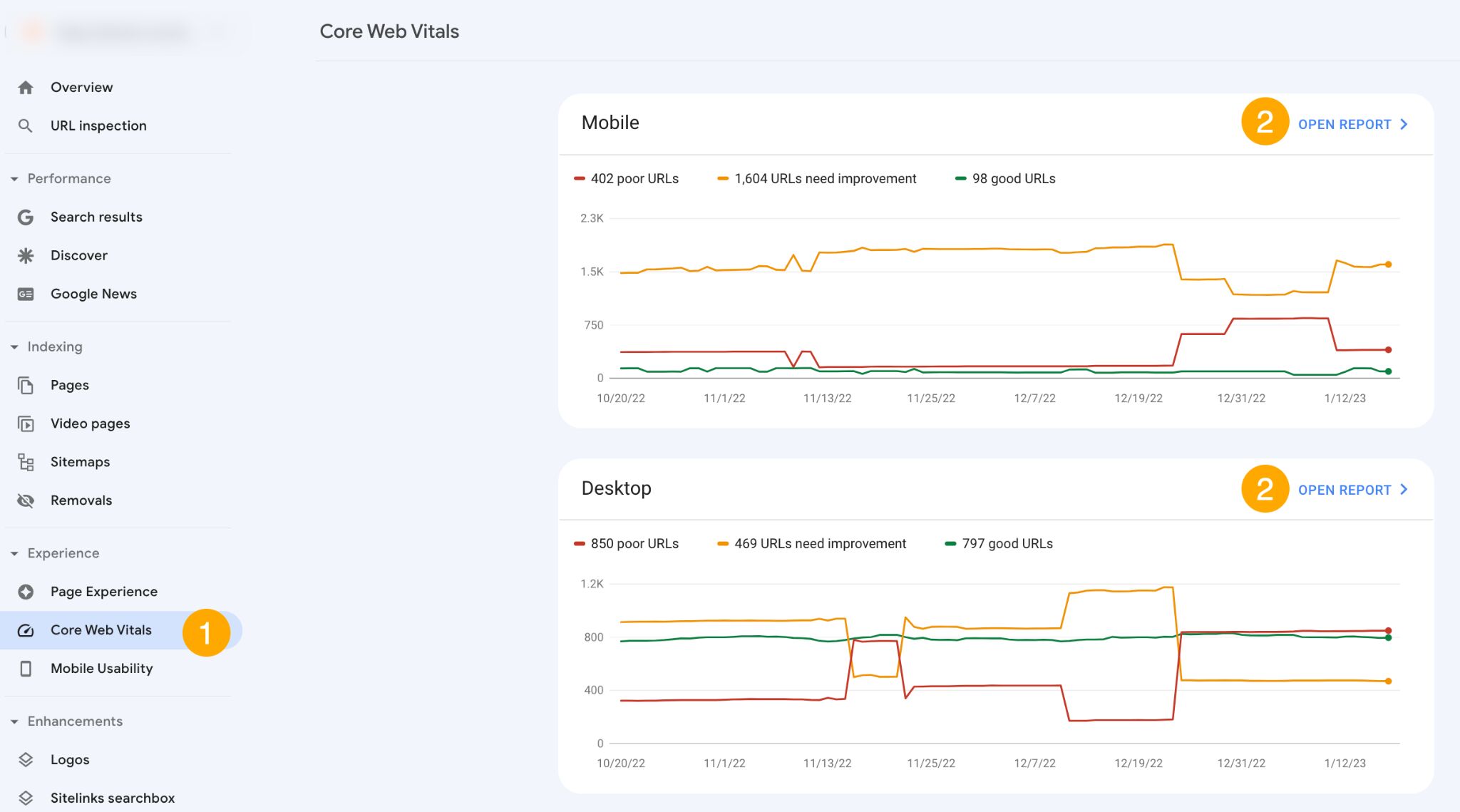

Google’s Core Web Vitals (CWV) is a set of metrics designed to gauge a website’s user experience, mainly in terms of loading and responsiveness speed. They are part of Google’s Page Experience signals, impacting search engine rankings.

That said, from an SEO perspective the goal isn’t to be the fastest site or the “most usable” one but to score at least “good” in these three categories:

- Largest Contentful Paint (LCP). This measures how long it takes for the biggest content element on the screen (like an image, video, or large text block) to fully load after you request a webpage. It’s a key indicator of how quickly a visitor can see content on the page.

- Interaction to Next Paint (INP). It evaluates the page’s responsiveness to user actions. It measures how long it takes for the page to react to clicks, taps, and key presses. The INP value reflects the longest response time for these interactions, excluding extreme cases. This metric replaces First Input Delay (FID) in March 2024.

- Cumulative Layout Shift (CLS). CLS totals up all the unexpected movements of page elements while the page is loading. A higher score indicates more shifting, which can be frustrating for users trying to read or interact with the page. This metric helps identify and minimize these shifts to improve the user experience.

How to fix

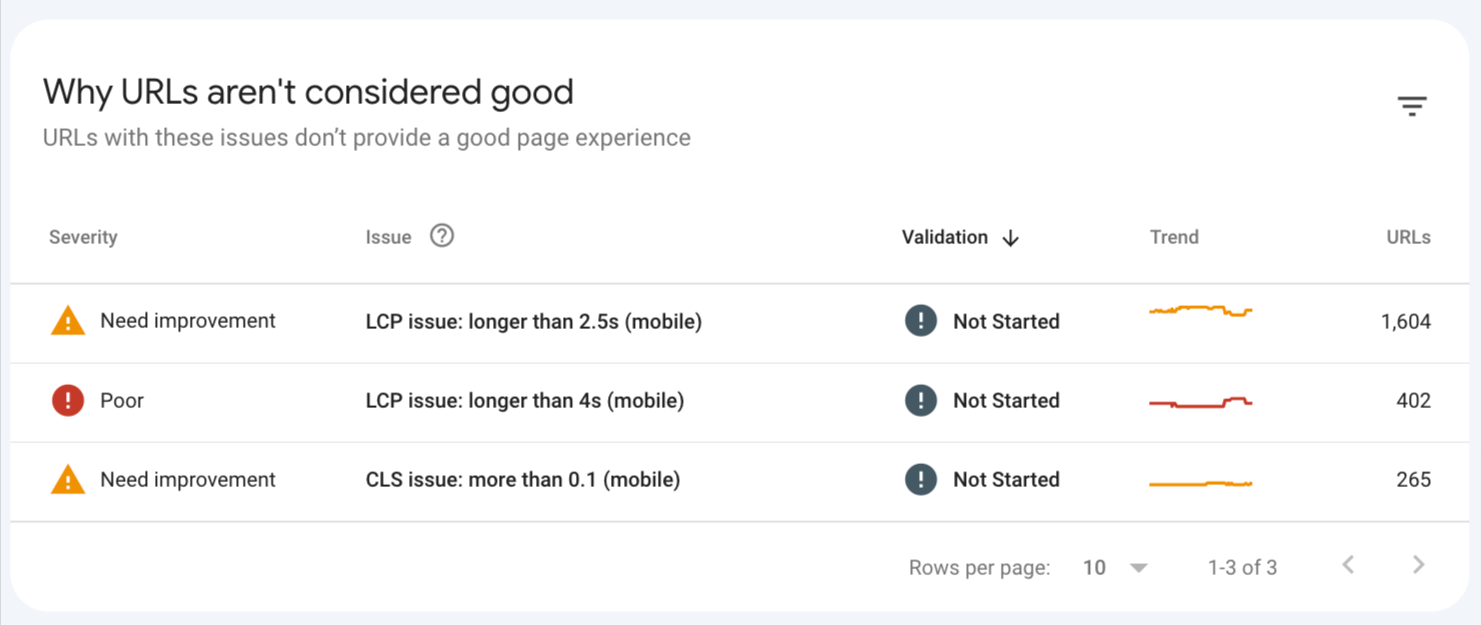

Probably the fastest way to check for CWV for an entire site is to use the free Google Search Console.

- Click on Core Web Vitals in the Experience section of the reports.

- Click Open report in each section to see how your website scores.

- For pages that aren’t considered good, you’ll see a special section at the bottom of the report. Use it to see pages that need your attention.

Optimizing for CWV may take some time and some web dev skills. This may include things like moving to a faster (or closer) server, compressing images, optimizing CSS, etc. We’re explaining how to do this in the third part of this guide to CWV.

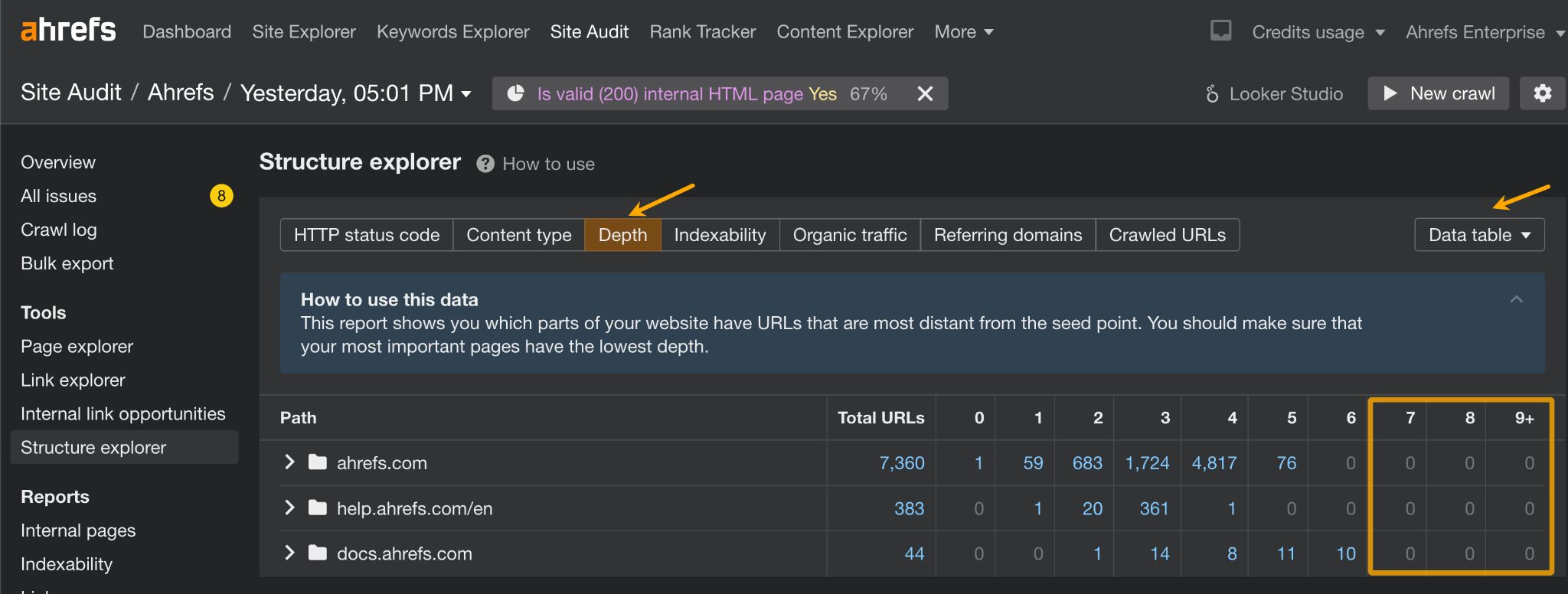

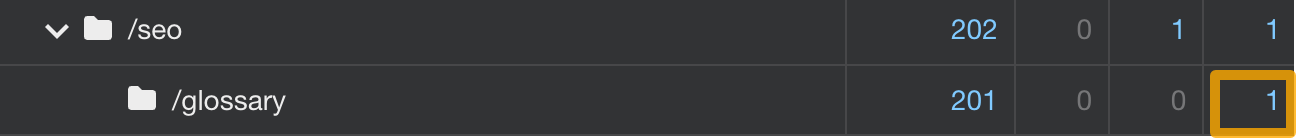

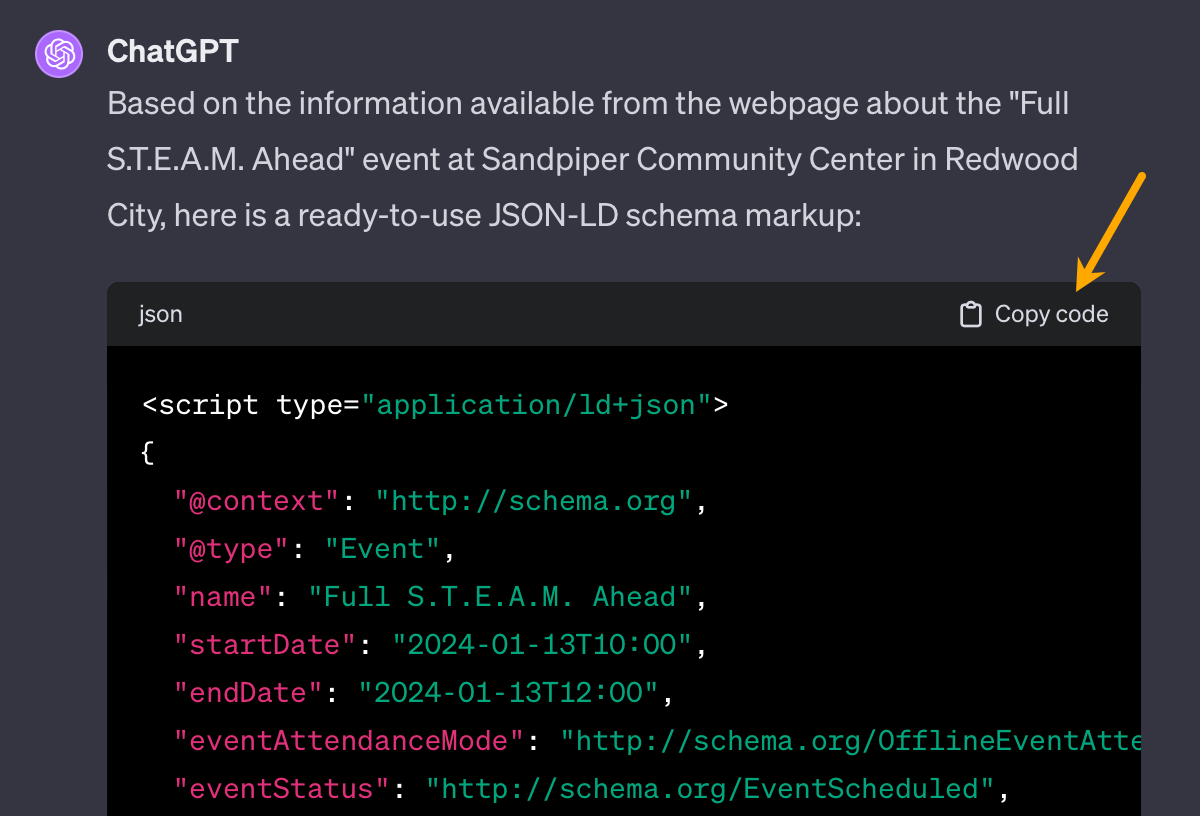

Unoptimized website structure in the context of technical SEO is mainly about important organic pages too deep into the website structure.

Pages that are nested too deep (users need more than 6 clicks from the website to get to them) will receive less link equity from your homepage (likely the page with the most backlinks), which may affect their rankings. This is because link value diminishes with every link “hop.”

Website structure is important for other reasons too, such as the overall user experience, crawl efficiency, and helping Google understand the context of your pages. Here, we’ll only focus on the technical aspect, but you can read more about the topic in our full guide: Website Structure: How to Build Your SEO Foundation.

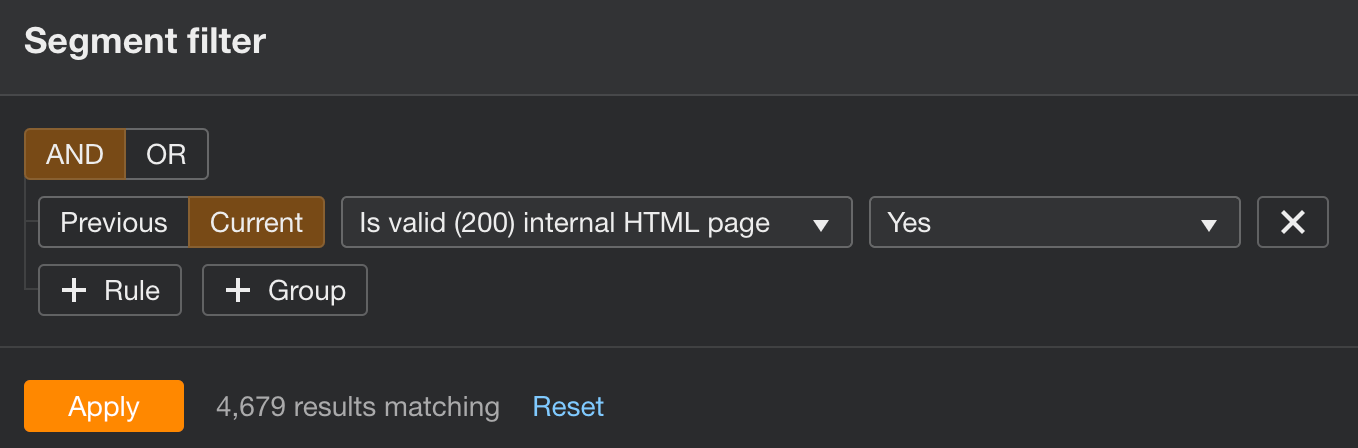

How to fix

- Open Site Audit and go to the Structure Explorer (in the menu on the left-hand side).

- Configure the Segment to only valid HTML pages and click Apply.

- Switch to the Depth tab, and set the data type to Data table.

- Use the graph to investigate pages with more than six clicks away from the homepage.

The way to fix the issue is to link to deeper nested pages from pages closer to the homepage. More important pages could find their place in site navigation, while less important ones can be just linked to the pages a few clicks closer.

It’s a good idea to weigh in user experience and the business role of your website when deciding what goes into sitewide navigation.

For example, we could probably give our SEO glossary a slightly higher chance to get ahead of organic competitors by including it in the main site navigation. Yet we decided not to because it isn’t such an important page for users who are not particularly searching for this type of information.

Instead, moved the glossary only up a notch by including a link inside the beginner’s guide to SEO (which itself is just one click away from the homepage).

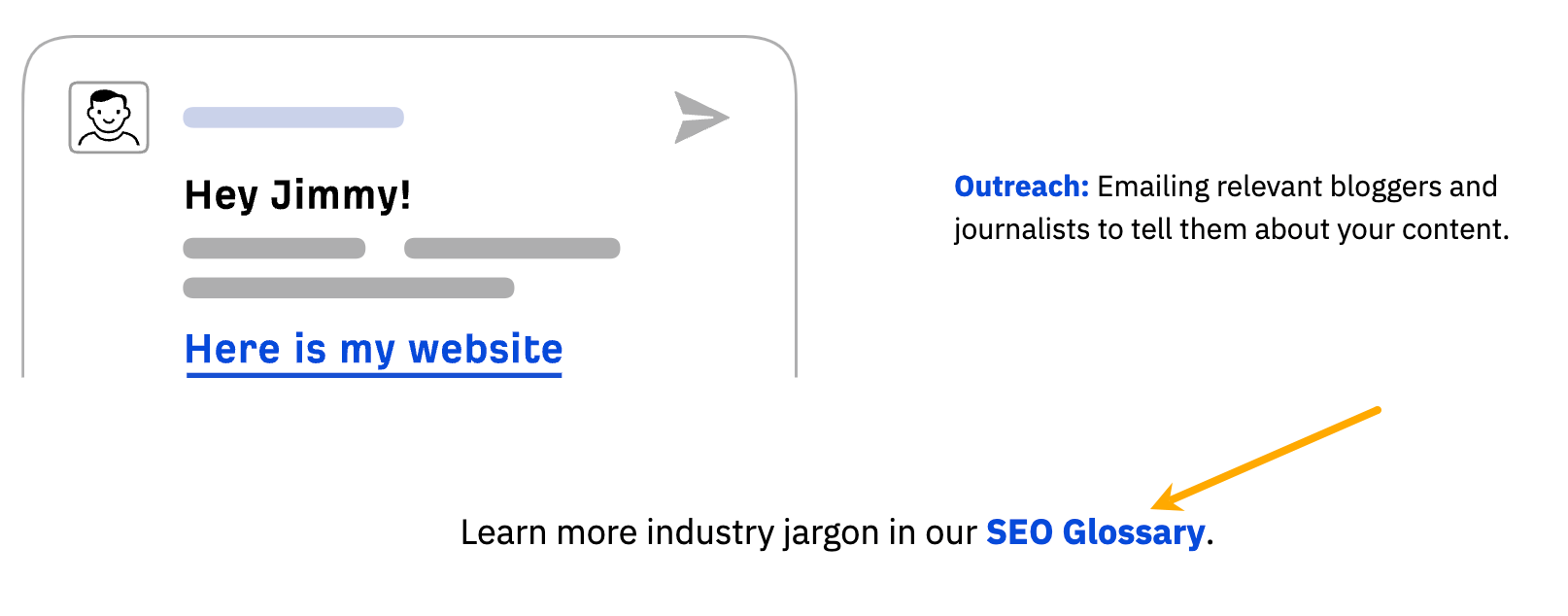

Schema markup is code that helps Google understand the information on a page, which can be used to show rich results (also known as rich snippets).

Schema markup is not a ranking factor, and it can’t make you rank higher in text results.

However, schema markup can make your pages eligible to rank in rich results. Google displays these for some search queries in spots that really stand out in the SERPs; some even show up on top of the standard text results.

This is especially important for pages with the following types of content:

- Recipes.

- Job ads.

- Videos.

- News articles.

- Information about events/landing pages for events.

- Details about movies.

How to fix

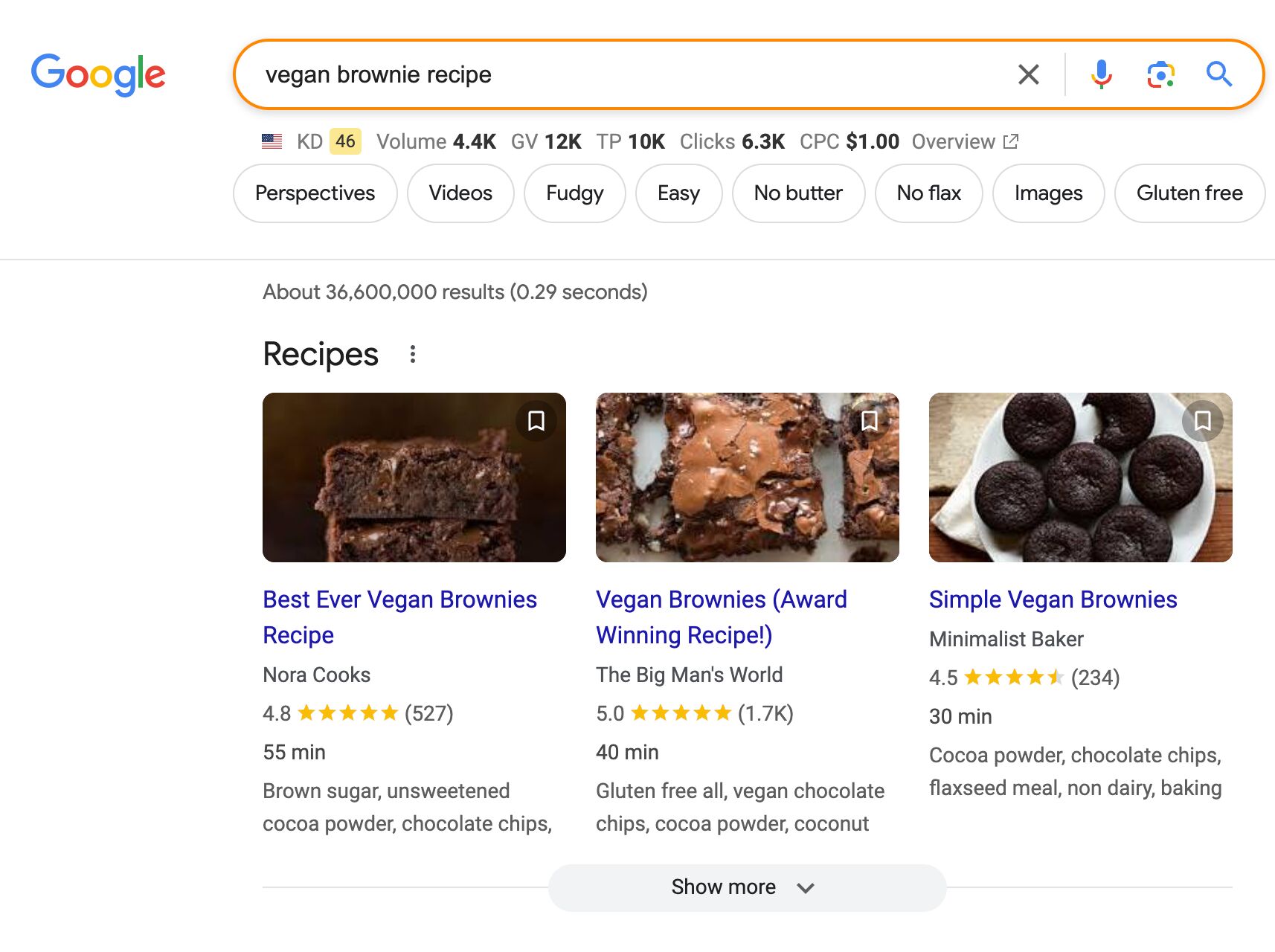

There are various methods you can generate and validate schema for free. You can use a tool like Merkle or Schema or semi-automate the process with ChatGPT.

ChatGPT is worth highlighting here because it’s able to recognize the relevant type schema for any page. Simply plug and URL if your page is already live or upload the copy if not live yet and ask AI to generate schema. You can use the following prompt:

Generate a ready-to-use schema in JSON-LD format for the following: [your URL or content].Next copy the code and run it through Google’s Rich Results Test just to double-check everything is in order.

Finally, apply the code to the page. If you’re using a popular CMS like WordPress or Wix, look for a schema markup plugin/app. Otherwise, you may need to add the code to each eligible page manually.

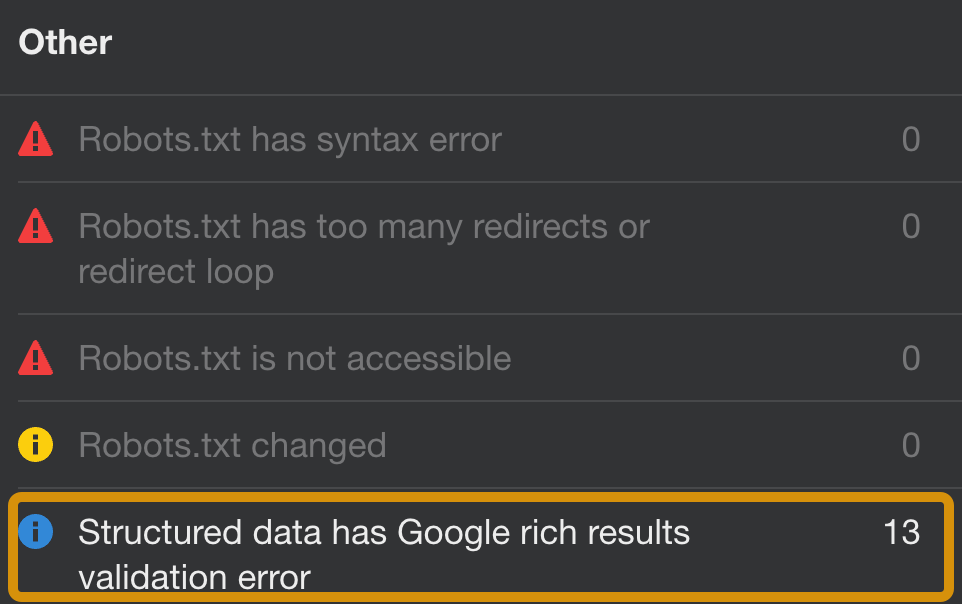

You can also use AWT to look for any schema issues across the entire site. You will find these issues under the Others section of the All issues report.

Optimizing pages with schema markup is an effective way to get more clicks from pages that already rank high—we’re explaining the details in this guide to schema.

Duplicate content happens when exact or near-duplicate content appears on the web in more than one place.

In cases of duplicate content, Google will choose one of the pages to show in the SERPs, but it won’t necessarily be the page you want to be indexed.

If we find exactly the same information on multiple pages on the web, and someone searches specifically for that piece of information, then we’ll try to find the best matching page ( … ) So if you have the same content on multiple pages then we won’t show all of these pages.

Content duplication is not necessarily a case of intentional or unintentional creation of similar pages. There are other less obvious causes, such as faceted navigation, tracking parameters in URLs, or using trailing and non-trailing slashes.

How to fix

First, check if your website is available under only one URL. If your site is accessible as:

- http://domain.com

- http://www.domain.com

- https://domain.com

- https://www.domain.com

… then Google will see all of those URLs as different websites.

The easiest way to check if users can browse only one version of your website: type in all four variations in the browser, one by one, hit enter, and see if they get redirected to the master version (ideally, the one with HTTPS).

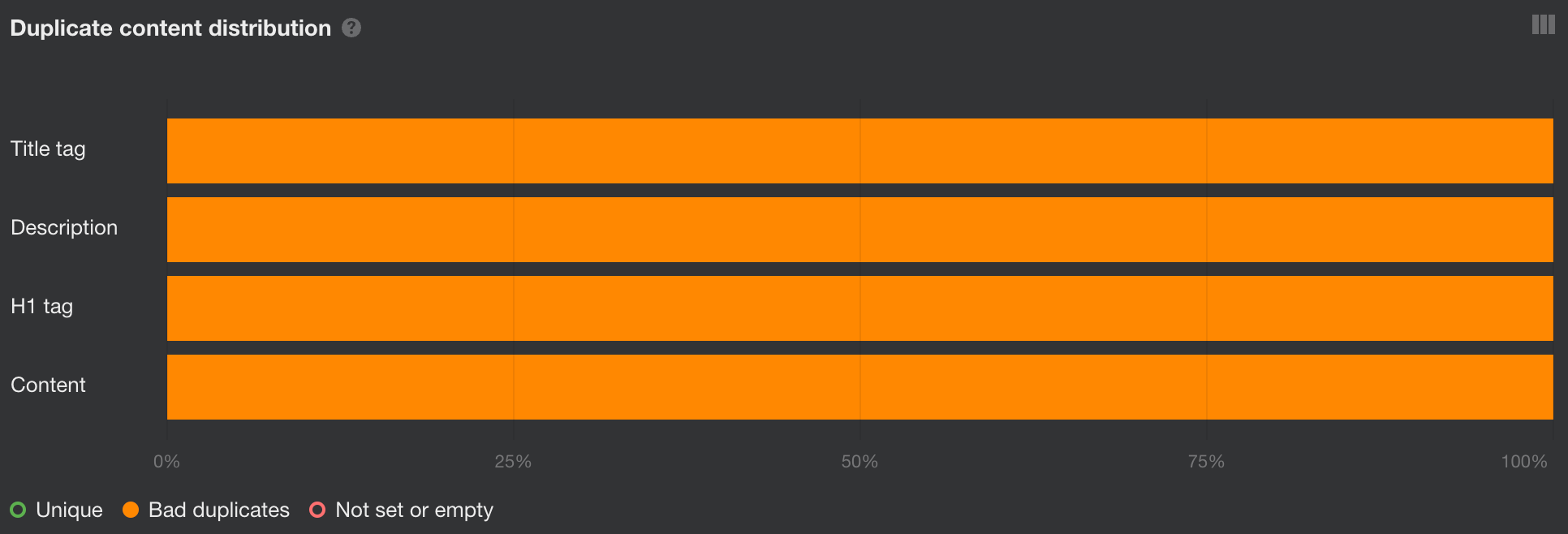

You can also go straight into Site Audit’s Duplicates report. If you see 100% bad duplicates, that is likely the reason.

In this case, choose one version that will serve as canonical (likely the one with HTTPS) and permanently redirect other versions to it.

Then run a New crawl in Site Audit to see if there are any other bad duplicates left.

There are a few ways you can handle bad duplicates, depending on the case. Learn how to solve them in our guide.

Final thoughts

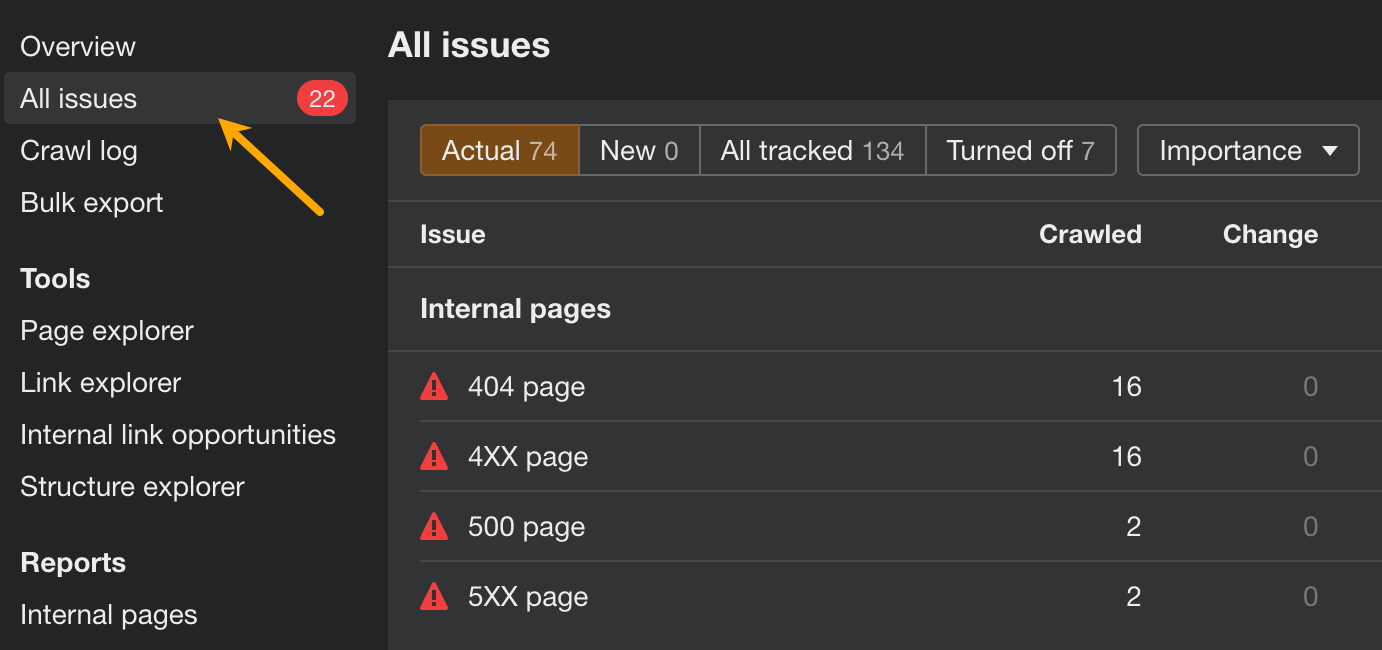

When you’re done fixing the more pressing issues, dig a little deeper to keep your site in perfect SEO health. Open Site Audit and go to the All issues report to see other issues regarding on-page SEO, image optimization, redirects, localization, and more. In each case, you will find instructions on how to deal with the issue.

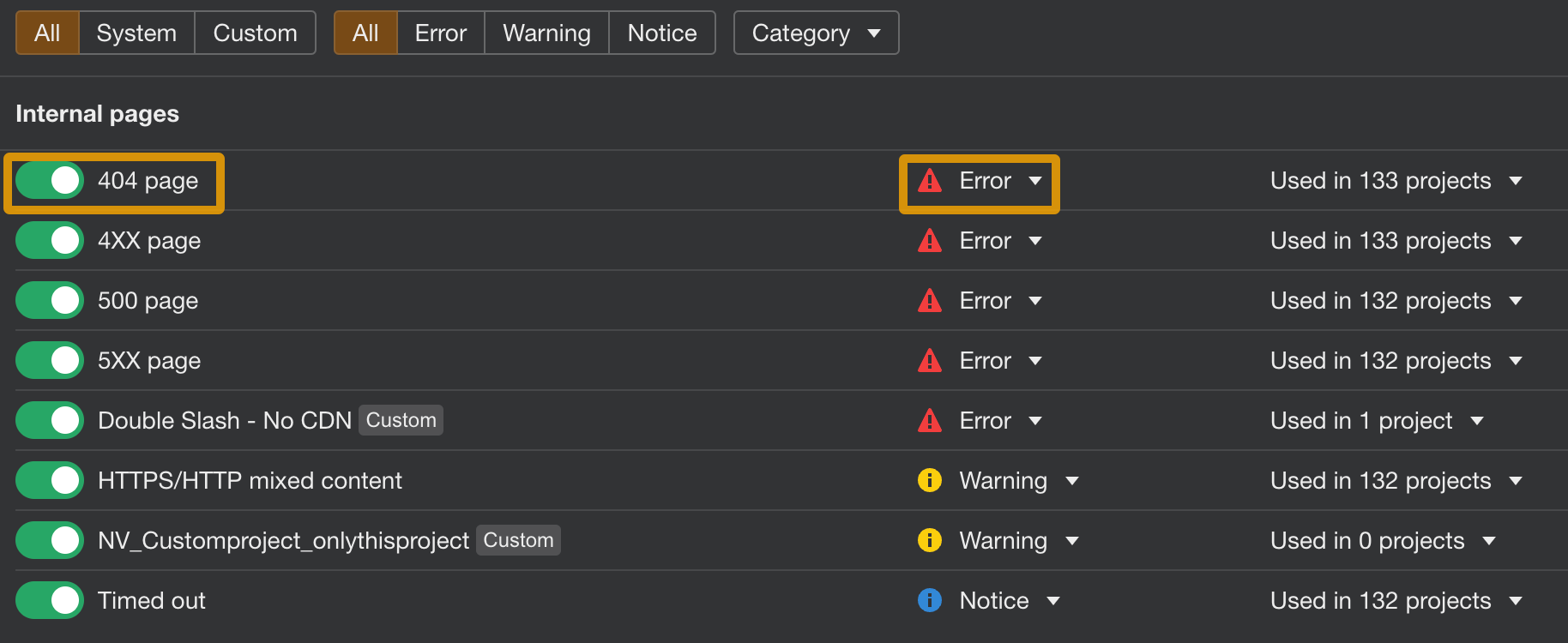

You can also customize this report by turning issues on/off or changing their priority.

Did I miss any important technical issues? Let me know on X or LinkedIn.