But here’s the truth: SEO is filled with repetitive, time-consuming tasks that Python can automate in minutes. Things like checking for broken links, scraping metadata, analyzing rankings, and auditing on-page SEO are all doable with a few lines of code. And thanks to tools like ChatGPT and Google Colab, it’s never been easier to get started.

In this guide, I’ll show you how to start learning.

SEO is full of repetitive, manual work. Python helps you automate repetitive tasks, extract insights from massive datasets (like tens of thousands of keywords or URLs), and build technical skills that help you tackle pretty much any SEO problem: debugging JavaScript issues, parsing complex sitemaps, or using APIs.

Beyond that, learning Python helps you:

- Understand how websites and web data work (believe it or not, the internet is not tubes).

- Collaborate with developers more effectively (how else are you planning to generate thousands of location-specific pages for that programmatic SEO campaign?)

- Learn programming logic that translates to other languages and tools, like building Google Apps Scripts to automate reporting in Google Sheets, or writing Liquid templates for dynamic page creation in headless CMSs.

And in 2025, you’re not learning Python alone. LLMs can explain error messages. Google Colab lets you run notebooks without setup. It’s never been easier.

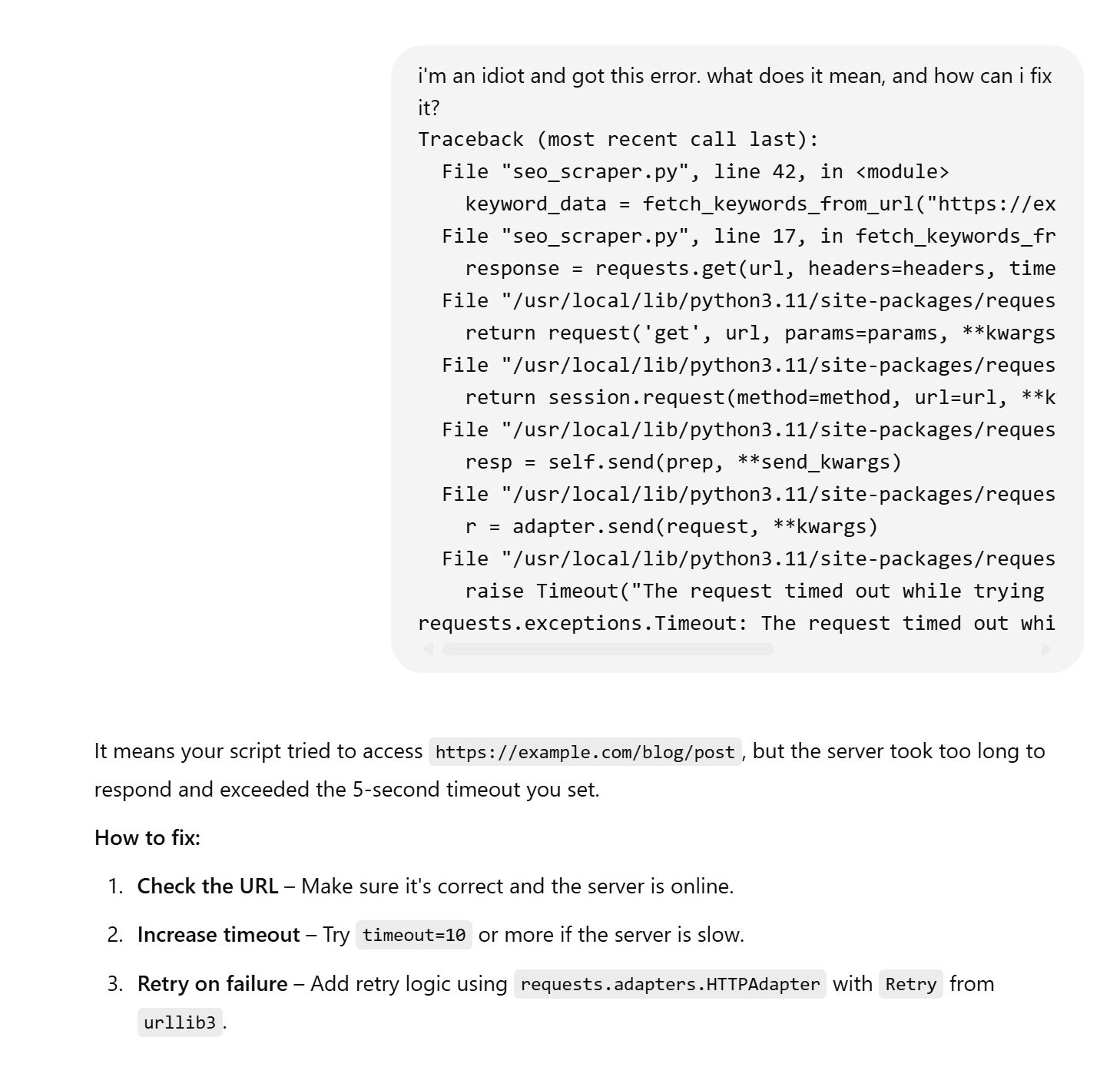

LLMs can tackle most error messages with ease—no matter how dumb they may be.

You don’t need to be an expert or install a complex local setup. You just need a browser, some curiosity, and a willingness to break things.

I recommend starting with a hands-on, beginner-friendly course. I used Replit’s 100 Days of Python and highly recommend it.

Here’s what you’ll need to understand:

1. Tools to write and run Python

Before you can write any Python code, you need a place to do it — that’s what we call an “environment.” Think of it like a workspace where you can type, test, and run your scripts.

Choosing the right environment is important because it affects how easily you can get started and whether you run into technical issues that slow down your learning.

Here are three great options depending on your preferences and experience level:

- Replit: A browser-based IDE (Integrated Development Environment), which means it gives you a place to write, run, and debug your Python code — all from your web browser. You don’t need to install anything — just sign up, open a new project, and start coding. It even includes AI features to help you write and debug Python scripts in real time. Visit Replit.

- Google Colab: A free tool from Google that lets you run Python notebooks in the cloud. It’s great for SEO tasks involving data analysis, scraping, or machine learning. You can also share notebooks like Google Docs, which is perfect for collaboration. Visit Google Colab.

- VS Code + Python interpreter: If you prefer to work locally or want more control over your setup, install Visual Studio Code and the Python extension. This gives you full flexibility, access to your file system, and support for advanced workflows like Git versioning or using virtual environments. Visit the VS Code website.

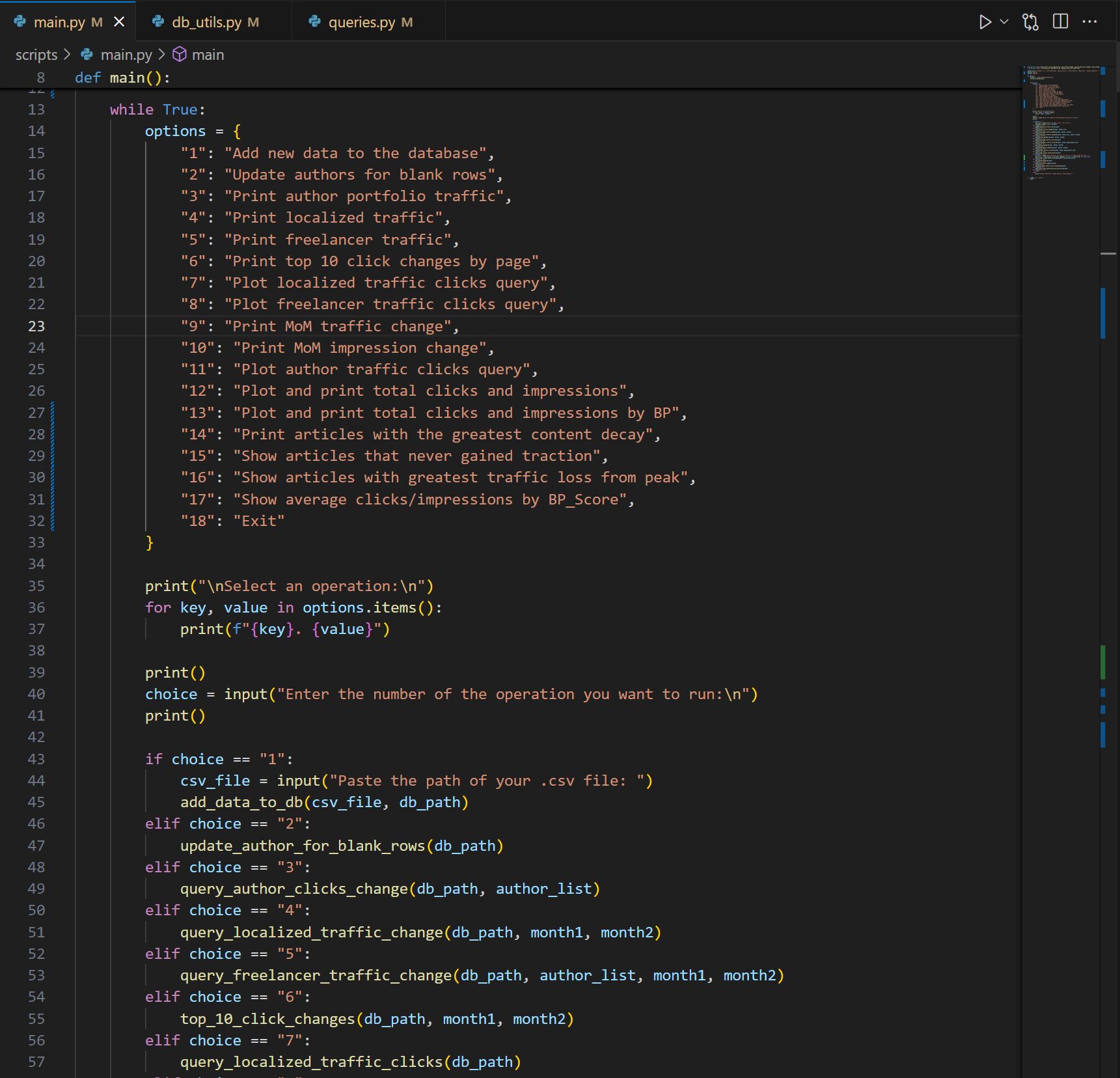

My blog reporting program, built in heavy conjunction with ChatGPT.

You don’t need to start here—but long-term, getting comfortable with local development will give you more power and flexibility as your projects grow more complex.

If you’re unsure where to start, go with Replit or Colab. They eliminate setup friction so you can focus on learning and experimenting with SEO scripts right away.

2. Key concepts to learn early

You don’t need to master Python to start using it for SEO, but you should understand a few foundational concepts. These are the building blocks of nearly every Python script you’ll write.

- Variables, loops, and functions: Variables store data like a list of URLs. Loops let you repeat an action (like checking HTTP status codes for every page). Functions let you bundle actions into reusable blocks. These three ideas will power 90% of your automation. You can learn more about these concepts through beginner tutorials like Python for Beginners – Learn Python Programming or W3Schools Python Tutorial.

- Lists, dictionaries, and conditionals: Lists help you work with collections (like all your site’s pages). Dictionaries store data in pairs (like URL + title). Conditionals (like if, else) help you decide what to do depending on what the script finds. These are especially useful for branching logic or filtering results. You can explore these topics further with the W3Schools Python Data Structures guide and LearnPython.org’s control flow tutorial.

- Importing and using libraries: Python has thousands of libraries: pre-written packages that do heavy lifting for you. For example, requests lets you send HTTP requests, beautifulsoup4 parses HTML, and pandas handles spreadsheets and data analysis. You’ll use these in almost every SEO task. Check out The Python Requests Module by Real Python, Beautiful Soup: Web Scraping with Python for parsing HTML, and Python Pandas Tutorial from DataCamp for working with data in SEO audits.

These are my actual notes from working through Replit’s 100 Days of Python course.

These concepts may sound abstract now, but they come to life once you start using them. And the good news? Most SEO scripts reuse the same patterns again and again. Learn these fundamentals once and you can apply them everywhere.

3. Core SEO-related Python skills

These are the bread-and-butter skills you’ll use in nearly every SEO script. They’re not complex individually, but when combined, they let you audit sites, scrape data, build reports, and automate repetitive work.

- Making HTTP requests: This is how Python loads a webpage behind the scenes. Using the requests library, you can check a page’s status code (like 200 or 404), fetch HTML content, or simulate a crawl. Learn more from Real Python’s guide to the Requests module.

- Parsing HTML: After fetching a page, you’ll often want to extract specific elements, like the title tag, meta description, or all image alt attributes. That’s where beautifulsoup4 comes in. It helps you navigate and search HTML like a pro. This Real Python tutorial explains exactly how it works.

- Reading and writing CSVs: SEO data lives in spreadsheets: rankings, URLs, metadata, etc. Python can read and write CSVs using the built-in csv module or the more powerful pandas library. Learn how with this pandas tutorial from DataCamp.

- Using APIs: Many SEO tools (like Ahrefs, Google Search Console, or Screaming Frog) offer APIs — interfaces that let you fetch data in structured formats like JSON. With Python’s requests and json libraries, you can pull that data into your own reports or dashboards. Here’s a basic overview of APIs with Python.

The Pandas library is unbelievably useful for data analysis, reporting, cleaning data, and a hundred other things.

Once you know these four skills, you can build tools that crawl, extract, clean, and analyze SEO data. Pretty cool.

These projects are simple, practical, and can be built with fewer than 20 lines of code.

1. Check if pages are using HTTPS

One of the simplest yet most useful checks you can automate with Python is verifying whether a set of URLs is using HTTPS. If you’re auditing a client’s site or reviewing competitor URLs, it helps to know which pages are still using insecure HTTP.

This script reads a list of URLs from a CSV file, makes an HTTP request to each one, and prints the status code. A status code of 200 means the page is accessible. If the request fails (e.g., the site is down or the protocol is wrong), it will tell you that too.

import csv

import requests

with open('urls.csv', 'r') as file:

reader = csv.reader(file)

for row in reader:

url = row[0]

try:

r = requests.get(url)

print(f"{url}: {r.status_code}")

except:

print(f"{url}: Failed to connect")

2. Check for missing image alt attributes

Missing alt text is a common on-page issue, especially on older pages or large sites. Rather than checking every page manually, you can use Python to scan any page and flag images missing an alt attribute. This script fetches the page HTML, identifies all <img> tags, and prints out the src of any image missing descriptive alt text.

import requests

from bs4 import BeautifulSoup

url = 'https://example.com'

r = requests.get(url)

soup = BeautifulSoup(r.text, 'html.parser')

images = soup.find_all('img')

for img in images:

if not img.get('alt'):

print(img.get('src'))

3. Scrape title and meta description tags

With this script, you can input a list of URLs, extract each page’s <title> and <meta name=“description”> content, and save the results to a CSV file. This makes it easy to spot missing, duplicated, or poorly written metadata at scale — and take action fast.

import requests

from bs4 import BeautifulSoup

import csv

urls = ['https://example.com', 'https://example.com/about']

with open('meta_data.csv', 'w', newline='') as f:

writer = csv.writer(f)

writer.writerow(['URL', 'Title', 'Meta Description'])

for url in urls:

r = requests.get(url)

soup = BeautifulSoup(r.text, 'html.parser')

title = soup.title.string if soup.title else 'No title'

desc_tag = soup.find('meta', attrs={'name': 'description'})

desc = desc_tag['content'] if desc_tag else 'No description'

writer.writerow([url, title, desc])

4. Using Python with the Ahrefs API

If you’re an Ahrefs customer with API access, you can use Python to tap directly into our data, fetching backlinks, keywords, rankings, and more. This opens the door to large-scale SEO workflows: auditing thousands of pages, analyzing competitor link profiles, or automating content reporting.

For example, you could:

- Monitor new backlinks to your site daily and log them to a Google Sheet

- Automatically pull your top organic pages every month for content reporting

- Track keyword rankings across multiple sites and spot trends faster than using the UI alone

Here’s a simple example to fetch backlink data:

import requests url = "https://apiv2.ahrefs.com?from=backlinks&target=ahrefs.com&mode=domain&output=json&token=YOUR_API_TOKEN" r = requests.get(url) data = r.json() print(data)

You’ll need an Ahrefs API subscription and access token to run these scripts. Full documentation and endpoint details are available in the Ahrefs API docs.

Patrick Stox, aka Mr Technical SEO, is always tinkering with Python, and he’s made tons of free tools and scripts freely available in Google Colab. Here are a few of my personal favorites:

- Redirect matching script: This script automates 1:1 redirect mapping by matching old and new URLs via full-text similarity. Upload your before-and-after URLs, run the notebook, and let it suggest redirects for you. It’s incredibly helpful during migrations. Run the script here.

- Page title similarity report: Google often rewrites page titles in search results. This tool compares your submitted titles (via Ahrefs data) with what Google actually displays, using a BERT model to measure semantic similarity. Ideal for large-scale title audits. Run the script here.

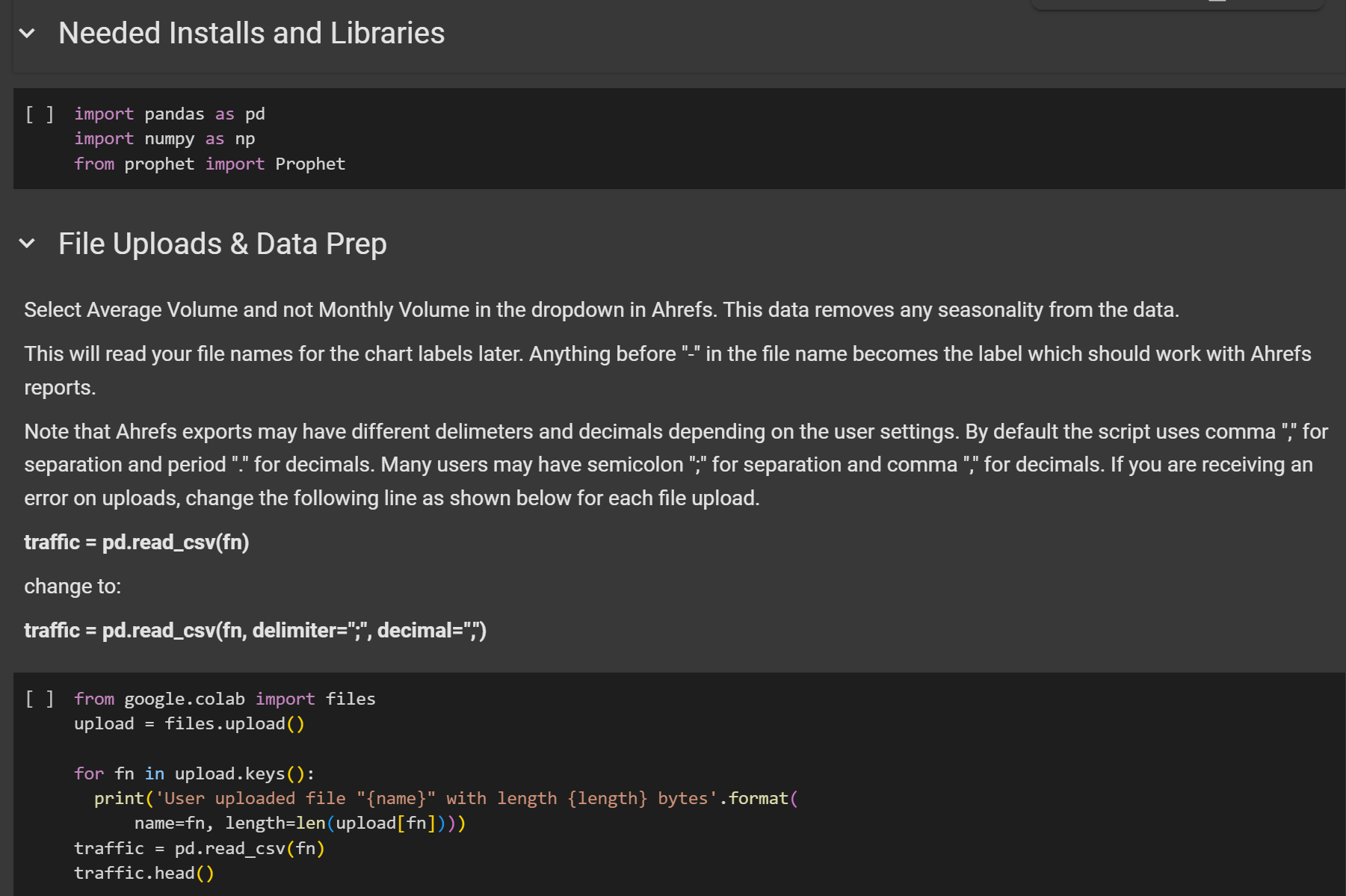

- Traffic forecasting script: Featured in our SEO Forecasting guide, this script uses historical traffic data to predict future performance. Great for setting expectations with clients or making the case for continued investment. Run the script here.

One of Patrick’s scripts in Colab.

Learn more about this forecasting script in Patrick’s guide to SEO forecasting.

Final thoughts

Python is one of the most impactful skills you can learn as an SEO. Even a few basic scripts can save hours of work and uncover insights you’d miss otherwise.

Start small. Run your first script. Fork one of Patrick’s tools. Or spend 30 minutes with Replit’s Python course. It won’t take long before you’re thinking: why didn’t I do this sooner?

- List of great SEO-specific scripts from tons of contributors

- Complete python for SEO guide by JC Chouinard

- RealPython for hands-on Python tutorials

- 13 Technical Marketing Skills You Can Learn (Even If You’re Not Technical)